Message boards : Graphics cards (GPUs) : Low power GPUs performance comparative

| Author | Message |

|---|---|

|

I live in Canary Islands. | |

| ID: 52615 | Rating: 0 | rate:

| |

|

ServicEnginIC, thanks for that! Good work :-) | |

| ID: 52617 | Rating: 0 | rate:

| |

|

Nice work! | |

| ID: 52620 | Rating: 0 | rate:

| |

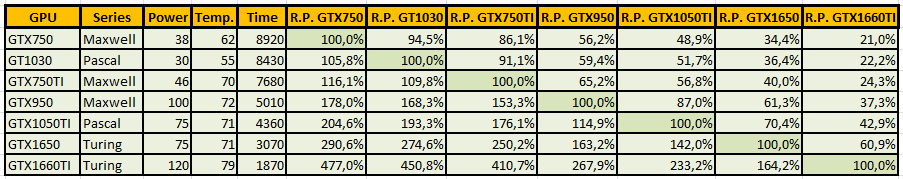

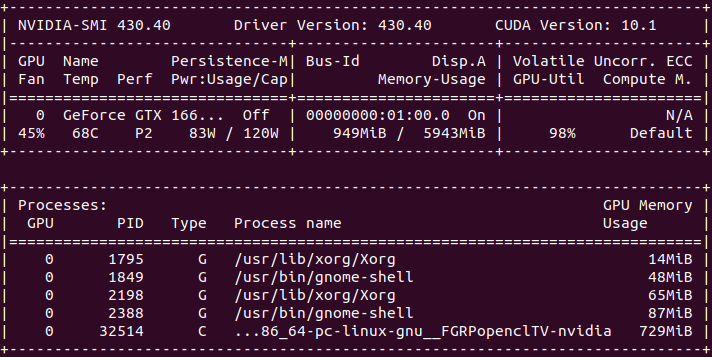

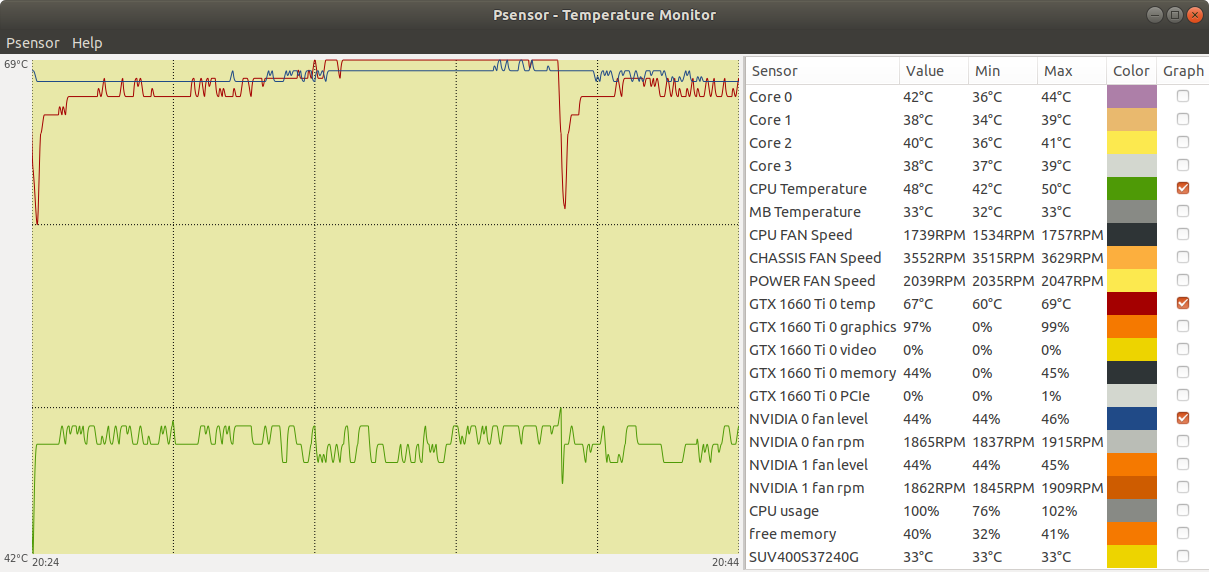

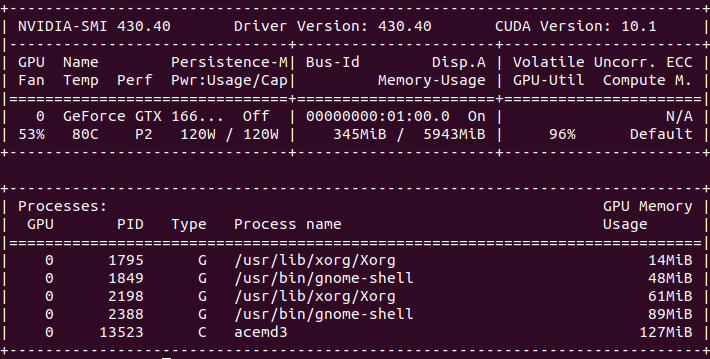

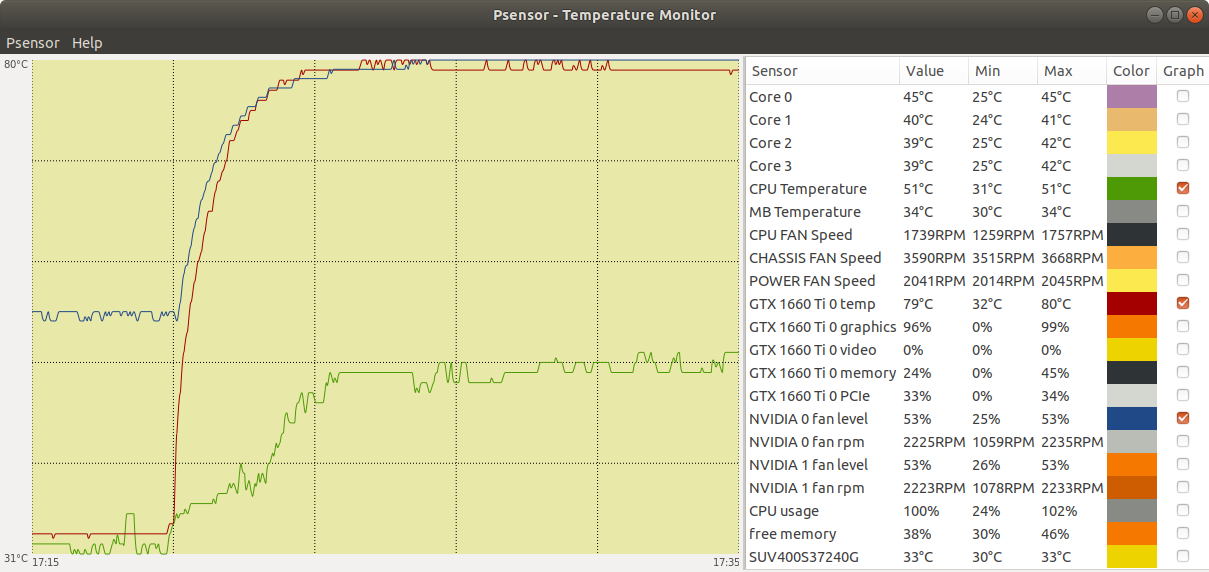

I guess you don't spend much time at the beach with all this GPUgrid testing!! +1 ;-) Theese tests confirmed me what I previously suspected: GPUGrid is the most power exigent Project I currently process. Really GPUGrid tasks do squeeze GPU's power till its maximum! Here is nvidia-smi information for my most powerful card while executing one TONI_TESTDHFR206b WU (120W of 120W used):  And here are Psensor curves for the same card from GPU resting to executing one TONI_TESTDHFR206b WU:  | |

| ID: 52633 | Rating: 0 | rate:

| |

GPUGrid is the most power exigent Project I currently process. Agreed. here are Psensor curves for the same card Always interesting to see how other volunteers run their systems! What I found interesting from your Psensor chart: - GPU running warm at 80 degrees. - CPU running cool at 51 degrees (100% utilization reported) - Chassis fan running at 3600rpm. (do you need ear protection from the noise?) As a comparison, my GTX 1060 GPU on Win10 processes a89-TONI_TESTDHFR206b-23-30-RND6008_0 task in 3940 seconds. Your gtx 1660 ti is completing test tasks quicker at around the 1830 second mark. The Turing cards (on Linux) are a nice improvement in performance! | |

| ID: 52634 | Rating: 0 | rate:

| |

|

Thanks for the data. | |

| ID: 52636 | Rating: 0 | rate:

| |

Thanks Toni. Good to know. | |

| ID: 52639 | Rating: 0 | rate:

| |

- CPU running cool at 51 degrees (100% utilization reported) CPU for this system is a low power version Q9550S, with high performance CPU cooler Arctic Freezer 13. And several degrees in CPU temperature are rised by radiated heat coming from GPU... - GPU running warm at 80 degrees. I had to work very hard to maintain GPU temperature below 80's at full load for this particular graphics card. But perhaps it is matter for other thread... http://www.gpugrid.net/forum_thread.php?id=4988 | |

| ID: 52640 | Rating: 0 | rate:

| |

|

For comparison: | |

| ID: 52658 | Rating: 0 | rate:

| |

|

E@H is most mining like BOINC project I've seen in that it responds better from memory OC than core OC. Try a math project that can easily run parallel calculations to push GPU temps. | |

| ID: 52685 | Rating: 0 | rate:

| |

SWAN_SYNC is ignored in acemd3.Well, the CPU time equals the Run time, so could you elaborate this? Could someone without SWAN_SYNC check their CPU time and Run time for ACEMD3 tasks please? | |

| ID: 52707 | Rating: 0 | rate:

| |

SWAN_SYNC is ignored in acemd3.Well, the CPU time equals the Run time, so could you elaborate this? CPU Time = Run time with the new app. https://www.gpugrid.net/result.php?resultid=21402171 | |

| ID: 52710 | Rating: 0 | rate:

| |

|

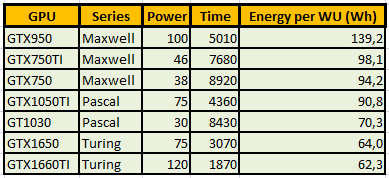

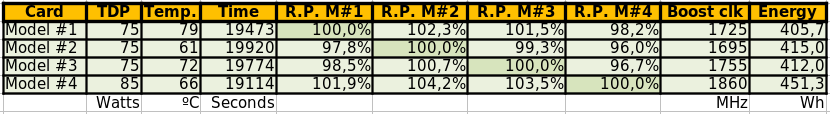

I still can squeeze a bit more the data from my tests: | |

| ID: 52974 | Rating: 0 | rate:

| |

|

Nice stats. | |

| ID: 52975 | Rating: 0 | rate:

| |

(If only we could get some work for them....) if the GPUGRID people send out some work at all in the near future ... the developement within the past weeks unfortunately was a steadily decreasing number of available tasks :-) | |

| ID: 52976 | Rating: 0 | rate:

| |

|

How do we calculate energy consumed by GPU to process a certain Work Unit? the developement within the past weeks unfortunately was a steadily decreasing number of available tasks :-) This situation remains currently the same. Who knows, may be a change of cycle is in progress ???? | |

| ID: 52980 | Rating: 0 | rate:

| |

|

Hello ServicEnginIC; | |

| ID: 53213 | Rating: 0 | rate:

| |

|

And by the way, as you mentioned that you live in the Canary Islands and prefer low-power devices, here is some more info about my GPUs: | |

| ID: 53214 | Rating: 0 | rate:

| |

|

Thank you very much for your appreciations, Carl. My preferred website to find info on GPUs is Techpowerup. I didn't know about Techpowerup site. They have an enormous database with GPU data and very exhaustive specifications. I like it, and take note. But anyway, I would not recommend buying the GT710 or GT730 anymore unless you need their very low consumption. I find theese both models perfect for office computers. Specially fanless models, that offer a silent and smooth working for office applications, joining their low power consumption. But I agree that their performance is rather scarce to process at GPUGrid. I've made a kind request for Performance tab to be rebuilt At the end of this tab there was a graph named GPU performance ranking (based on long WU return time) Currently this graph is blank. When it worked, it showed a very useful GPUs classification according to their respective performances at processing GPUGrid tasks. Just GT 1030 sometimes appeared at far right (less performance) in the graph, and other times appeared as a legend out of the graph. GTX 750 Ti always appeared borderline at this graph, and GTX 750 did not. I always considered it as a kind invitation for not to use "Out of graph" GPUs... The GTX745 is just a little slower than the GT1030, but it was sold to OEMs only That was the first time I heard about GTX 745 GPU. I thought: a mistake? But then I searched for "GTX 745 Techpowerup"... and it appeared as an OEM GPU! Nice :-) | |

| ID: 53367 | Rating: 0 | rate:

| |

|

Thanks very much for the info. | |

| ID: 53948 | Rating: 0 | rate:

| |

I recently installed a couple of new Dell/Alienware GTX 1650 4GB cards and they are very productive... I heve also three GTX 1650 cards of different models currently running 24/7, and I'm very satisfied. They are very efficient according to their relatively low power consumption of 75W (max). On the other hand, all the GPU models listed on my original performance table are processing current ACEMD3 WUs on time to achieve full bonus (Result returned in <24H :-) | |

| ID: 54244 | Rating: 0 | rate:

| |

Thanks very much for the info. There really isn't much point in overclocking the core clocks because GPU Boost 3.0 overclocks the card on its own in firmware based on the thermal and power limits of the card and host. You do want to overclock the memory though since the Nvidia drivers penalize all their consumer cards when a compute load is detected on the card and pushes the card down to P2 power state and significantly lower memory clocks than the default gaming memory clock and the stated card spec. The memory clocks can be returned to P0 power levels and the stated spec by using any of the overclocking utilities in Windows or the Nvidia X Server Settings app in Linux after the coolbits have been set. | |

| ID: 54247 | Rating: 0 | rate:

| |

... the Nvidia drivers penalize all their consumer cards when a compute load is detected on the card and pushes the card down to P2 power state and significantly lower memory clocks than the default gaming memory clock and the stated card spec. why so ? | |

| ID: 54251 | Rating: 0 | rate:

| |

... the Nvidia drivers penalize all their consumer cards when a compute load is detected on the card and pushes the card down to P2 power state and significantly lower memory clocks than the default gaming memory clock and the stated card spec. Because Nvidia doesn't want you to purchase inexpensive consumer cards for compute when they want to sell you expensive compute designed Quadros and Teslas. No reason other than to maximize profit. | |

| ID: 54253 | Rating: 0 | rate:

| |

... the Nvidia drivers penalize all their consumer cards when a compute load is detected on the card and pushes the card down to P2 power state and significantly lower memory clocks than the default gaming memory clock and the stated card spec. Well, they also say they can't guarantee valid result with the higher P state. Momentary drops or errors are ok with video games, not so with scientific computations. So they say they drop down the P state to avoid those errors. But like Keith says, You can OC the memory. Just be careful because you can do it too much and start to throw A LOT of errors before you know it and then you are in time out by the server. ____________   | |

| ID: 54254 | Rating: 0 | rate:

| |

|

Depends on the card and on the generation family. I can overclock a 1080 or 1080Ti by 2000Mhz because of the GDDR5X memory and run them at essentially 1000Mhz over official "graphics use" spec. | |

| ID: 54256 | Rating: 0 | rate:

| |

|

What happens when there is a memory error during a game? Wrong color, screen tearing, odd physics on a frame? The game moves on. So what. | |

| ID: 54359 | Rating: 0 | rate:

| |

What happens when there is a memory error during a game? Wrong color, screen tearing, odd physics on a frame? The game moves on. So what. In my opinion Nvidia does this not because of concern for valid results, it's because they don't really want consumer GPUs used for compute. They'd rather sell you a much more expensive Quadro or Tesla card for that. The performance penalty is just an excuse. ____________ Team USA forum | Team USA page Join us and #crunchforcures. We are now also folding:join team ID 236370! | |

| ID: 54361 | Rating: 0 | rate:

| |

What happens when there is a memory error during a game? Wrong color, screen tearing, odd physics on a frame? The game moves on. So what. exactly this. ____________  | |

| ID: 54362 | Rating: 0 | rate:

| |

What happens when there is a memory error during a game? Wrong color, screen tearing, odd physics on a frame? The game moves on. So what. +100 You can tell if you are overclocking beyond the bounds of your cards cooling and the compute applications you run by simply monitoring the errors reported, if any. Why would Nvidia care one whit whether the enduser has compute errors on the card. They carry no liability for such actions. Totally on the enduser. They build consumer cards for graphics use in games. That is the only concern they have whether the card produces any kind of errors. Whether it drops frames. If you are using the card for secondary purposes, then that is your responsibility. They simply want to sell you a Quadro or Tesla and have you use it for its intended purpose which is compute. Those cards are clocked significantly less than the consumer cards so that they will not produce any compute errors when used in typical business compute applications. Distributed computing is not even on their radar for application usage. DC is such a small percentage of any graphics card use it is not even considered. | |

| ID: 54363 | Rating: 0 | rate:

| |

|

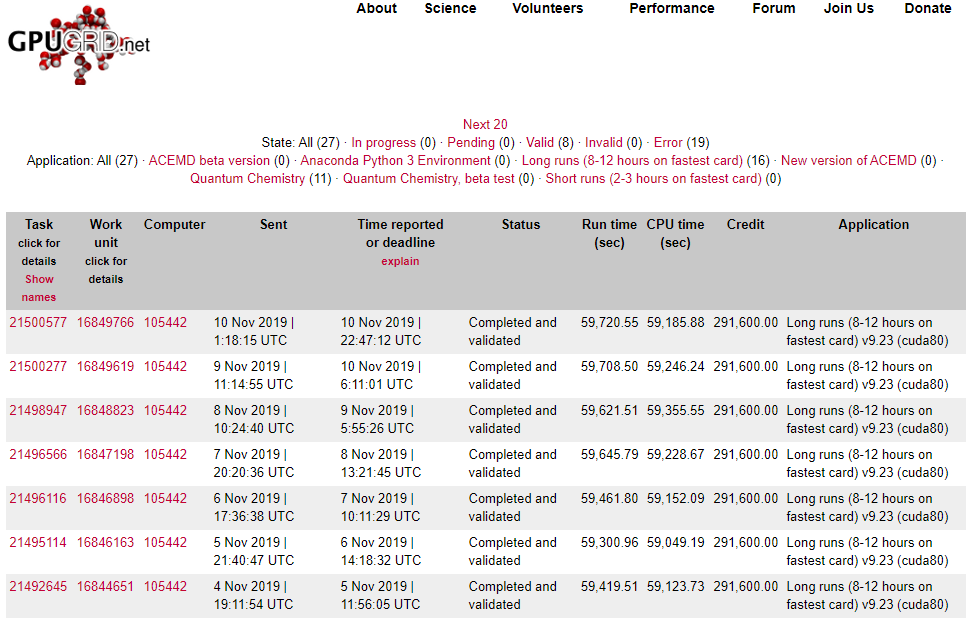

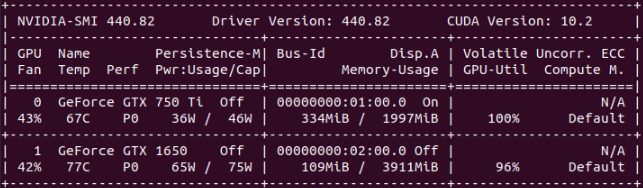

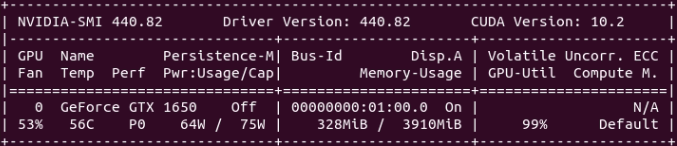

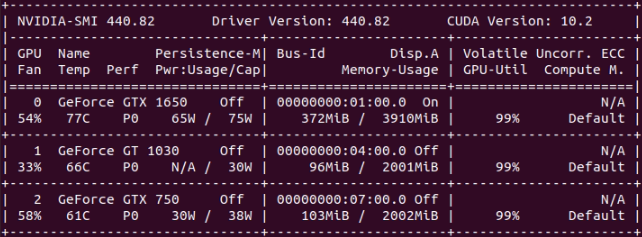

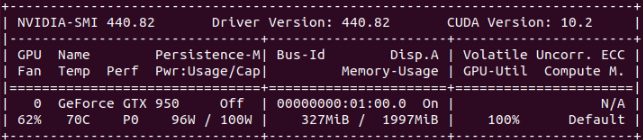

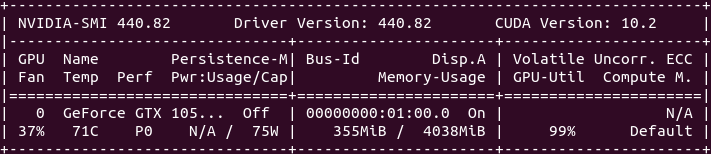

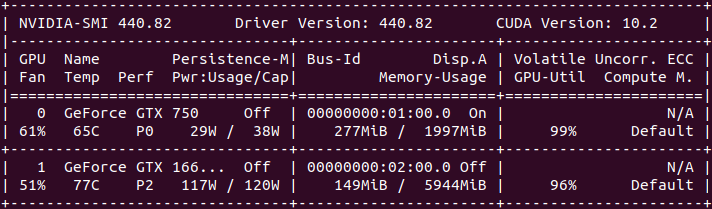

On Apr 5th 2020 | 15:35:28 UTC Keith Myers wrote: ...Nvidia drivers penalize all their consumer cards when a compute load is detected on the card and pushes the card down to P2 power state and significantly lower memory clocks than the default gaming memory clock and the stated card spec. I've been investigating this. I suppose this fact is more likely to happen with Windows drivers (?). I'm currently running GPUGrid as preferent GPU project on six computers. All these systems are based on Ubuntu Linux 18.04, no overclocking at GPUs apart from some factory-overclocked cards, coolbits not changed from its default. Results for my tests are as follows, as indicated by Linux drivers nvidia-smi command: * System #325908 (Double GPU)  * System#147723  * System #480458 (Triple GPU)  * System #540272  * System #186626  And the only exception: * System #482132 (Double GPU)  As can be seen at "Perf" column, all cards but the noted exception are freely running at P0 maximum performance level. On the double GPU system pointed as exception, GTX 1660 Ti card is running at P2 performance level. But on this situation, power consumtion is 117W of 120W TDP, and temperature is 77ºC, while GPU utilization is 96%. I think that increasing performance level, probably would imply to exceed recommended power consumption/temperature, with relatively low performance increase... | |

| ID: 54365 | Rating: 0 | rate:

| |

|

Keith forgot to mention that the P2 penalty only applies to higher end cards. low end cards in the -50 series or lower do not get this penalty and are allowed to run in the full P0 mode for compute. | |

| ID: 54366 | Rating: 0 | rate:

| |

To sum up the above: when NV reduces the clocks of a "cheap" high-end consumer card for the sake of correct calculations it's just an excuse for that they want to make us buy overly expensive professional cards which are even lower clocked for the sake of correct calculations. That's a totally consistent argument. Oh wait, it's not!They simply want to sell you a Quadro or Tesla and have you use it for its intended purpose which is compute. Those cards are clocked significantly less than the consumer cards so that they will not produce any compute errors when used in typical business compute applications.Down clocking evidently can reduce some errors so that's what NV does.In my opinion Nvidia does this not because of concern for valid results, it's because they don't really want consumer GPUs used for compute. They'd rather sell you a much more expensive Quadro or Tesla card for that. The performance penalty is just an excuse. (I've snipped the distracting parts) | |

| ID: 54368 | Rating: 0 | rate:

| |

|

Many thanks to all for your input. I Just finished a PABLO WU which took a little over 12 hrs on one of my GTX1650 GPUs. | |

| ID: 54371 | Rating: 0 | rate:

| |

|

I find myself agreeing with Mr Zoltan on this. I am inclined to leave the timing stock on these cards as I have enough problems with errors related to running two dissimilar GPUs on my hosts. When I resolve that issue I might try pushing the memory closer to the 5 GHz advertised DDR5 max speed on my 1650's. | |

| ID: 54372 | Rating: 0 | rate:

| |

...I Just finished a PABLO WU which took a little over 12 hrs on one of my GTX1650 GPUs. I guess that an explanation about the purpose of these new PABLO WUs will appear in "News" section in short... (?) | |

| ID: 54374 | Rating: 0 | rate:

| |

...I have enough problems with errors related to running two dissimilar GPUs on my hosts. This is a known problem in wrapper-working ACEMD3 tasks, already announced by Toni in a previous post. Can I use it on multi-GPU systems? Users experiencing this problem might be interested to take a look to this Retvari Zoltan's post, with a bypass to prevent this errors. | |

| ID: 54375 | Rating: 0 | rate:

| |

|

Thanks, ServicEnginIC. I always try to use that method when I reboot after updates. I need to get myself a good UPS backup as I live where the power grid is screwed up by the politicians and activists and momentary interruptions are way too frequent. | |

| ID: 54380 | Rating: 0 | rate:

| |

Am I correct that identical GPUs won't have the problem? That's what I understand also, but I can't check it by myself for the moment. I've noticed that ACEMD tasks which have not yet reached 10% can be restarted without errors when the BOINC client is closed and reopened. Interesting. Thank you for sharing this. | |

| ID: 54381 | Rating: 0 | rate:

| |

|

Yes you can stop and start a task at any time when all your cards are the same. | |

| ID: 54382 | Rating: 0 | rate:

| |

Yes you can stop and start a task at any time when all your cards are the same. Thanks for confirming that, Keith. I've also found that when device zero is in the lead but less than 90% it will (sometimes) pick them both back up without erring. When dev 0 is beyond 90% it will show a computation error even if it is in the lead. Has anyone else observed this? An unrelated note: BOINC sees a GTX 1650 as device 0 when paired with my 1060 3GB, even though it is slightly slower than the 1060 (almost undetectable running ACEMD tasks). I think it might be due to Turing being newer than Pascal. I don't see any GPU benchmarking done by the BOINC manager. | |

| ID: 54384 | Rating: 0 | rate:

| |

|

By the way, muchas gracias to ServicEnginIC for hosting this thread! It's totally synchronous with my current experiences as a cruncher. 🥇👍🥇 | |

| ID: 54385 | Rating: 0 | rate:

| |

An unrelated note: BOINC sees a GTX 1650 as device 0 when paired with my 1060 3GB, even though it is slightly slower than the 1060 (almost undetectable running ACEMD tasks). BOINC will order Nvidia cards based on CC (compute capability), and then by how much memory it has. the 1650 has CC of 7.5 where the 1060 has 6.1. the 1650 also has more memory than the 3GB version of the 1060. ____________  | |

| ID: 54386 | Rating: 0 | rate:

| |

|

Pop Piasa wrote: By the way, muchas gracias... You're welcome, de nada, I enjoy learning new matters, and sharing knowledge Ian&Steve C. wrote: BOINC will order Nvidia cards based on CC (compute capability), and then by how much memory it has. I've ever wondered about that. Thank you very much!, one more detail I've learnt... | |

| ID: 54387 | Rating: 0 | rate:

| |

|

Thanks for your generous reply Ian & Steve, you guys have a great working knowledge of BOINC's innards! | |

| ID: 54389 | Rating: 0 | rate:

| |

Great that you brought your talents and hardware over to this project when they stopped SETI. (Hmm... does shutting down the search mean that they gave up, or that the search is complete?)👽 Without getting too far off topic, they basically had money/staffing problems. they didnt have the resources to constantly babysit their servers, while working on the backend analysis system at the same time. all of the work that has been processed over the last 20 years has not been analyzed by the scientists yet, it's just sitting in the database. they are building the analysis system that will sort through all the results, so they are shutting down the data distribution process so they can focus on that. they feel like 20 years of listening to the northern sky was enough for now. They left the option open that the project might return someday. ____________  | |

| ID: 54390 | Rating: 0 | rate:

| |

they feel like 20 years of listening to the northern sky was enough for now. When SETI first started I used a Pentium II machine at the University I retired from to crunch when it was idle and my boss dubbed me "Mr Spock". Thanks for filling in the details and providing a factual perspective to counter my active imagination. I should have guessed it was a resource issue. | |

| ID: 54394 | Rating: 0 | rate:

| |

|

SETI is written in Distributed Computing history with bold letters. | |

| ID: 54396 | Rating: 0 | rate:

| |

|

On Apr 18th 2020 | 22:34:50 UTC Pop Piasa wrote: ...I Just finished a PABLO WU which took a little over 12 hrs on one of my GTX1650 GPUs. I also received one of this PABLO WUs... and by any chance it was assigned to my slowest GPU currently in production. It took 34,16 hours to complete in a GTX750, anyway in time to get half bonus. | |

| ID: 54397 | Rating: 0 | rate:

| |

I also received one of this PABLO WUs... and by any chance it was assigned to my slowest GPU currently in production. I've run 2 PABLOs so far, but only on my 1650s. They both ran approx. 12:10 Hrs, 11:58 CPU time. If I can get one on my 750ti (also a Dell/Alienware and clocked at 1200Mhz; 2GB 2700Mhz DDR3) we can get a good idea how they all compare. If anyone has run a PABLO designed ACEMD task on a 1050 or other low power GPU please share your results. I would also like to see how these compare to a 2070-super as I am considering replacing the 750ti with one. | |

| ID: 54400 | Rating: 0 | rate:

| |

If anyone has run a PABLO designed ACEMD task on a 1050 or other low power GPU please share your results.e2s112_e1s62p0f90-PABLO_UCB_NMR_KIX_CMYB_5-2-5-RND2497_0 GTX 750Ti 109,532.48 seconds = 30h 25m 32.48s | |

| ID: 54402 | Rating: 0 | rate:

| |

If anyone has run a PABLO designed ACEMD task on a 1050 or other low power GPU please share your results. I would also like to see how these compare to a 2070-super as I am considering replacing the 750ti with one. not a low power card, but something to expect when moving to the 2070-super. I have 2080's and 2070's. the 2070-super should fall somewhere between these two, maybe closer to the 2080 in performance. my 2080s do them in about ~13000 seconds = ~3.6 hours. my 2070s do them in about ~15500 seconds = ~4.3 hours. so maybe around 4hrs on average for a 2070 super. ____________  | |

| ID: 54403 | Rating: 0 | rate:

| |

my 2080s do them in about ~13000 seconds = ~3.6 hours. Thanks for the info., that pretty much agrees with the percentage ratios I've seen on Userbenchmark.com. I plan to replace my PSU when I can afford it and eventually have two matching high-power GPUs on my ASUS Prime board (i7-7700K). That will leave me with a 1060 3GB and a 750ti to play with in some other old machine I might acquire from my friends' business throw-aways. | |

| ID: 54404 | Rating: 0 | rate:

| |

I also received one of this PABLO WUs... and by any chance it was assigned to my slowest GPU currently in production. I was lucky to receive two more PABLO WUs today. One of them was assigned to my currently fastest GPU. So I can compare with the first one already mentioned, assigned to the slowest. I'll put them in image format, because they'll dissapear from GPUGrid's database in a few days. - GTX 750 - WU 19473911: 122.977 seconds = 34,16 hours - GTX 1660 Ti - WU 19617512: 24.626 seconds = 6,84 hours Conclusion: GTX 1660 Ti has processed its PABLO WU in about 1/5 the time than GTX 750. (About 500% relative performance) The second PABLO WU received today has been assigned again to other of my GTX 750 GPUs, and it will serve as a consistency check for execution time comparing to the first one. At this time, this WU is completed in 25,8% after an execution time of 9,5 hours. If I were lucky enough to get PABLO WUs at every of my GPUs, I'd be able to pepare a new table similar to the one at the very first of this thread... Or, perhaps, Toni would be able to take this particular batch to rebuild the Performance page with data from every GPU models currently contributing to the project...😏 | |

| ID: 54425 | Rating: 0 | rate:

| |

|

Here you go, ServicEngineIC: | |

| ID: 54428 | Rating: 0 | rate:

| |

|

Here you go, ServicEngineIC for the Pablo tasks. | |

| ID: 54429 | Rating: 0 | rate:

| |

|

Ok! | |

| ID: 54430 | Rating: 0 | rate:

| |

|

Well, I tried to manipulate a PABLO WU over to my gtx 750ti by suspending it at 0.23% on my gtx1650, and when it restarted on the 750ti it crashed, falsifying my theory that ACEMD tasks can all be restarted before 10%. Perhaps it is still true running MDAD tasks. | |

| ID: 54438 | Rating: 0 | rate:

| |

|

i think the PABLO tasks cannot be restarted at all, even in a system with identical GPUs. I had a GPU crash the other day, and after rebooting a PABLO task tried to restart on a different GPU, and it failed immediately saying that it cannot be restarted on a different device. I haven't had that problem with the MDAD tasks though. they seem to be able to be stopped and restarted pretty much whenever, even on another device if it's the same as the one it started on, 0-90% without issue. | |

| ID: 54439 | Rating: 0 | rate:

| |

|

I still am running with the switch timers set at 360 minutes. So don't step away from a running task for six hours. Think that might have covered my Pablo tasks but I never have changed my settings even after moving to an all RTX 2080 host. | |

| ID: 54443 | Rating: 0 | rate:

| |

|

Here are more PABLO results for your database, ServicEnginIC- | |

| ID: 54446 | Rating: 0 | rate:

| |

Here are more PABLO results for your database, ServicEnginIC- ServicEnginIC, I botched the above info. please ignore it. Here is what's correct: My GTX 1650 on ASUS Prime: 44,027.23sec = 12.23hrs https://www.gpugrid.net/workunit.php?wuid=19495626 My GTX 1060 3GB on ASUS Prime 37,177.73sec = 10.32hrs https://www.gpugrid.net/workunit.php?wuid=19422730 New entries below: My GTX 750ti 2GB on Optiplex 980 116,121.21sec = 32.25hrs https://www.gpugrid.net/workunit.php?wuid=19730833 (a tortoise) Found more PABLOs... My GTX 1650 4GB on Optiplex 980 45,518.61sec = 12.64hrs https://www.gpugrid.net/workunit.php?wuid=19740597 MyGTX 1060 3GB on ASUS Prime 37,556.23sec = 10.43hrs https://www.gpugrid.net/workunit.php?wuid=19755484 My GTX 1650 4GB on ASUS Prime 44,103.76sec = 12.25hrs https://www.gpugrid.net/workunit.php?wuid=19436206 That's all for now. | |

| ID: 54448 | Rating: 0 | rate:

| |

I'll try to throw a new comparative table on this Sunday April 26th... I'm waiting to pick up data for better complete my original table. Today I catched a new PABLO WU, and it is running now in a GT1030. I still miss data for my GTX950 and GTX1050Ti... I've got from you data for GTX750Ti and GTX1650, among several other models. Thank you! | |

| ID: 54449 | Rating: 0 | rate:

| |

I'll try to throw a new comparative table on this Sunday April 26th... Here's one more PABLO run on my i7-7700K ASUS Prime: GTX 1650 4GB. 4,4092.20sec = 12.25hrs. http://www.gpugrid.net/workunit.php?wuid=19859216 Getting consistent results from these Alienware cards. Run pretty quiet for a single fan cooler at ~80% fan and a steady 59-60C @ ~24C room temp. (fan ctrl by MSI Afterburner) They run ~97% usage and ~90% power consumption running ACEMD tasks. They are factory overclocked +200MHz so I'd like to see results from a different make of card for comparison. I think this might be the most GPU compute power per watt that NVIDIA has made so far. 🚀 | |

| ID: 54495 | Rating: 0 | rate:

| |

|

I stumbled on this result while tracing a WU error history. | |

| ID: 54496 | Rating: 0 | rate:

| |

I stumbled on this result while tracing a WU error history. Also see these results for a PABLO task on a GTX 1660S https://www.gpugrid.net/workunit.php?wuid=19883994 GTX 1660S/ i5-9600KF/ Linux 26,123.87sec = 7.26hrs | |

| ID: 54497 | Rating: 0 | rate:

| |

e8s30_e2s69p4f100-PABLO_UCB_NMR_KIX_CMYB_5-0-5-RND3175This CPU is probably overcommitted, hindering the GPU's performance. e6s59_e2s143p4f450-PABLO_UCB_NMR_KIX_CMYB_5-2-5-RND0707_0 11,969.48 sec = 3.33hrs = 3h 19m 29.48s RTX 2080Ti / i3-4160 e15s66_e5s54p0f140-PABLO_UCB_NMR_KIX_CMYB_5-0-5-RND6536_0 10,091.61 sec = 2.80hrs = 2h 48m 11.61s RTX 2080Ti / i3-4160 e7s4_e2s94p3f30-PABLO_UCB_NMR_KIX_CMYB_5-1-5-RND1904_0 9,501.28 sec = 2.64hrs = 2h 38m 21.28s RTX 2080Ti / i5-7500 (This GPU has higher clocks then the previous) | |

| ID: 54498 | Rating: 0 | rate:

| |

|

My latest Pablo on one of my RTX 2080's | |

| ID: 54499 | Rating: 0 | rate:

| |

|

For future reference, | |

| ID: 54574 | Rating: 0 | rate:

| |

A GTX 1660Ti can be brought lower in power, and increase overclock; resulting in the same performance at (an estimated) 85W. Saves you about $40 a year on electricity. Thank you for your tip. I add it to my list of "things to test"... | |

| ID: 54577 | Rating: 0 | rate:

| |

A GTX 1660Ti can be brought lower in power, and increase overclock; resulting in the same performance at (an estimated) 85W. Saves you about $40 a year on electricity. I agree with ProDigit. I have used the same principle on my GPUs and experienced similar results. I run GPUs power limited and have also overclocked with a power limit on both ACEMD2 and ACEMD3 work units with positive results. Caveat Have not tested power draw at the wall, I find it odd that greater performance can be derived by both overclocking and power limiting. Testing at the wall should be the next test to validate this method of output increase. | |

| ID: 54581 | Rating: 0 | rate:

| |

|

I've read your post, with exhaustive tests, at ProDigit's Guide: RTX (also GTX), increasing efficiency in Linux thread. | |

| ID: 54583 | Rating: 0 | rate:

| |

|

When last ACEMD3 WUs outage arrived, I thoght it would last longer. | |

| ID: 55094 | Rating: 0 | rate:

| |

|

Nice analysis of your gtx1650 GPUs. Always enjoy your technical hardware posts. | |

| ID: 55095 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : Low power GPUs performance comparative