Message boards : Graphics cards (GPUs) : Poor times with 780 ti

| Author | Message |

|---|---|

|

Hi guy's, similar to TJ's post (GTX 770 vs GTX780Ti) I'm getting quite poor times for a 780 ti. (compared to other's) | |

| ID: 34414 | Rating: 0 | rate:

| |

|

Sorry wrong host :) | |

| ID: 34415 | Rating: 0 | rate:

| |

|

Hi JugNut, | |

| ID: 34417 | Rating: 0 | rate:

| |

|

I have a Win XP disc that came with a used Dell computer I bought from a friend. I have no use for the disk, you can have it if you want it, it comes with a legal/valid key. I'll mail it to you on my dime. | |

| ID: 34418 | Rating: 0 | rate:

| |

|

Thanks for your post guy's. | |

| ID: 34424 | Rating: 0 | rate:

| |

|

Hmm you have the same settings I have. I tried that as well indeed, switch everything to maximum but no extra speed yet. Temperature goes quick to 82-83°C then but GPU load remains around 81%. | |

| ID: 34425 | Rating: 0 | rate:

| |

|

Built a new machine with Win7 and a pair of 780Ti. http://www.gpugrid.net/show_host_detail.php?hostid=165832 2/9/2014 10:47:56 PM | | Starting BOINC client version 7.2.33 for windows_x86_64 | |

| ID: 34973 | Rating: 0 | rate:

| |

|

Different types of work unit utilize the GPU to different extents. The NOELIA_DIPEPT WU's don't use the GPU as much. The boost will reduce when this is the case. To stop downclocking set the NVIDIA settings to Prefer Maximum Performance. | |

| ID: 34974 | Rating: 0 | rate:

| |

|

Thank you for the suggestion. I have put app_config back in place and set it with <cpu_usage>1.0</cpu_usage>. I do not think this is the issue since the CPU times are on par with GPU time, task manager is running 12-13% solid, and I have spare CPU cycles. Will see tonight if any differences. | |

| ID: 34975 | Rating: 0 | rate:

| |

|

Times do seem to be stabilizing on WU's with the change, but same speed as a 680 still. Previously, it was saying 0.87 CPU for WU. Still seems odd, and will watch if the daily work volume increases. GPU utilization is still low at 50% for both GPU's and only pulling 630W currently. | |

| ID: 34988 | Rating: 0 | rate:

| |

|

Jeremy, I'm running SANTI_MAR tasks on a 770 and 670 under W7 and seeing 80% GPU utilization while running 6 CPU tasks.

| |

| ID: 34996 | Rating: 0 | rate:

| |

|

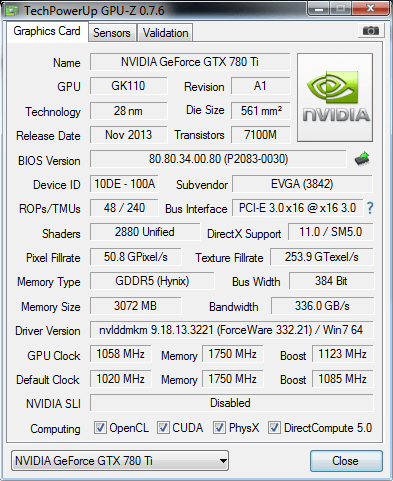

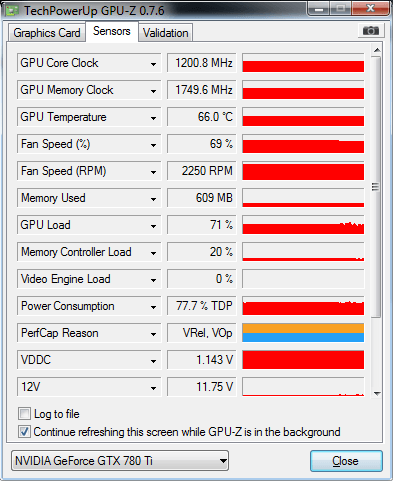

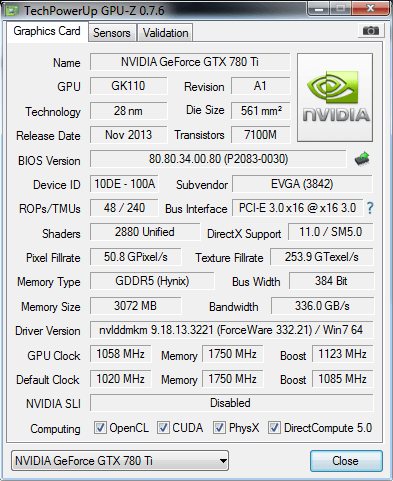

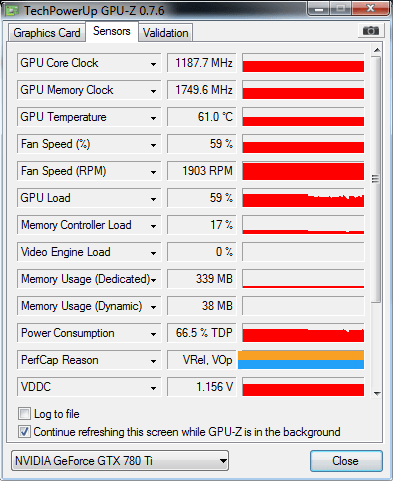

skygiven, Thank you for your time and thoughts. This is just an odd situation. See below responses. See below responses. Jeremy, I'm running SANTI_MAR tasks on a 770 and 670 under W7 and seeing 80% GPU utilization while running 6 CPU tasks. I am running 4 CPU tasks, 2 GPU Grid Tasks, 1 Intel GPU task, and then leave a core free. Is SLI on? No. What are the GPU clocks and what are you using to control the fan speed (MSI Afterburner for example)? 1st card is boosting to 1200mhz at 1.161V with 55% utilization at 63°C and second card is boosting to 1187mhz at 1.174V with 55% utilization at 59°C. Using EVGA Precision for fan speed control. 1st Card on http://www.gpugrid.net/result.php?resultid=7769186   2nd Card on http://www.gpugrid.net/result.php?resultid=7769298   Might be worth adding a modest OC on the GPU and saving the profile; just in case the GPU's are going into a reduced power state and not recovering properly (say the GDDR5 stays low). I have only added 38mhz boost with 105% Power Target. Left the memory alone since it is at 7000mhz. [url]Did you set Prefer Maximum Performance in NVidia Control Panel; right click on desktop, open NVidia Control Panel, select Manage 3D settings (top left), under Global Settings (right pain) scroll down to Power Management Mode and select Prefer Maximum Performance.[/url] Yes, currently at Max Performance. Have tried it both ways. | |

| ID: 35010 | Rating: 0 | rate:

| |

|

Stop using the Intel GPU and compare. It's not the drivers, it's the use of the Intel GPU that's to blame. It competes with GPUGrid's CPU requirements. Ditto for other GPU projects. | |

| ID: 35025 | Rating: 0 | rate:

| |

|

skgiven, had to check your name a little closer. Sorry about that, you have been skygiven in my head the whole time reading the forums. :) | |

| ID: 35027 | Rating: 0 | rate:

| |

|

The times are back normal for 780Ti's under Win7. | |

| ID: 35046 | Rating: 0 | rate:

| |

The times are back normal for 780Ti's under Win7. Hello Jeremy, that are great times you are reporting under win7 with the 780Ti. Can you please give some details about how you achieve this. I am looking since last November to get run times low. Now WU's (Santi) run around 24000 seconds and that is almost as fast as my 770. I have an Asus not the OC version. Have down clocked per advice here to achieve better times. The iGPU is not hampering me as I can not get it working at Einstein@home. What is you clock speed, memory, temp etc. Do you use MSI afterburner or something else? ____________ Greetings from TJ | |

| ID: 35077 | Rating: 0 | rate:

| |

|

TJ, | |

| ID: 35085 | Rating: 0 | rate:

| |

|

Thank you for the information Jeremy. It gives me information to fiddle with my card. | |

| ID: 35092 | Rating: 0 | rate:

| |

|

I have increased to GPU core clock and also the voltage little to try to achieve same times as Jeremy. However if a WU has run for a few seconds the GPU clock goes to 875.7MHz, very occasionally to 920-930MHz for a few minutes. GPU load is 78% with Santi's. Temperature is 72°C. | |

| ID: 35149 | Rating: 0 | rate:

| |

I have increased to GPU core clock and also the voltage little to try to achieve same times as Jeremy. However if a WU has run for a few seconds the GPU clock goes to 875.7MHz, very occasionally to 920-930MHz for a few minutes. GPU load is 78% with Santi's. Temperature is 72°C. I don't think the clock drops down because the voltage is too low. It drops because the temperature is too high. If you want the clock to stay at 920-930MHz you need to keep the temperature at or below 70*C. Increasing the voltage will increase the temperature so if you want the clocks to stay high then you have to cool the GPU better if you increase the voltage. You can decrease the voltage to help reduce the temperature but lower voltage might make it unstable. If you can get away with lowering the voltage then OK but I would try to improve the cooling solution somehow (more fans, lower the ambient, open the case and put a big fan to blow lots of air in, duct cold air into the case, whatever works). ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35151 | Rating: 0 | rate:

| |

|

Temperature is currently at 68°C. I can not get it low. This card will power itself down when reached 109°C. So as long as I can keep it below 80°C it should be okay. Ambient temperature will only increase as the season gradually warms. | |

| ID: 35161 | Rating: 0 | rate:

| |

|

I give up. | |

| ID: 35164 | Rating: 0 | rate:

| |

|

I just replaced my two 680s with two 780Tis. Only on my first run with the new cards, but so far the times seem better. | |

| ID: 35397 | Rating: 0 | rate:

| |

I just replaced my two 680s with two 780Tis. Only on my first run with the new cards, but so far the times seem better. Which model Matt? | |

| ID: 35400 | Rating: 0 | rate:

| |

|

EVGA GTX 780Ti 03G-P4-2883-KR. | |

| ID: 35401 | Rating: 0 | rate:

| |

|

Completed times on first WUs: | |

| ID: 35402 | Rating: 0 | rate:

| |

Edit: Hmm, may have jumped the gun a bit. Rebooted and now back to 1124/1137. Maybe the Nvidia preferences needed a reboot to take effect? I'll have to check again after these WUs finish. I bet if you were to run an app that tracks and records the temperature and clock speeds over time for a few tasks and then graphed that data you would see that the clocks stay up until the temp goes above a certain cutoff temp then the clock drops until the temp drops back down below that cutoff temp. I bet your card that is downclocking is doing so because the temp rises above 70C. That seems to be the temp where mine downclock. I've found that if I set the fanspeed to say 60% the temp might be at say 65C and it will stay at 65C for many minutes. If I go away for an hour and then peak at the temperature I find sometimes it has risen by 6 degrees to 71C and I also find it has downclocked. I think the temp rises for 2 reasons (maybe more): 1) the temperature of the air going into the case rises for some reason (the furnace kicks in or someone closes a window, for example) 2) the simulation reaches a hard spot that works the GPU harder The fix is to recurve the fanspeed or run software that monitors the GPU temp and increases the fanspeed when the temp rises and decreases the fanspeed when the temp falls. The software allows you to set a target temperature which is the temp at which you want the GPU to run. It works like a thermostat. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35409 | Rating: 0 | rate:

| |

|

Temperature is very model, brand and even individual card depending. My primary EVGA GTX660 runs at 75°C steady with radial fan at maximum speed which is 75% for this card and will not become any cooler. But is steady at 940MHz for 6 days without booting. Some WU's, especially Santi's can down clock the card a bit but it will go up again if that WU finishes. | |

| ID: 35422 | Rating: 0 | rate:

| |

Temperature is very model, brand and even individual card depending. I agree and that is part of the problem with tweaking GPUs to get top performance. There are so many variables to deal with. I know I always say "We need a script to solve this" but I think if we had a script to collect temperature, clocks, % usage and various other data every second (maybe 2 seconds) for the entire length of tasks and then graph that data we would get a much better understanding of what is happening. I can do that for Linux hosts and have a possible way of doing it for Windows hosts. Storing the data and graphing it is easy but I don't have code for reading the data from the GPU on Windows yet, just Linux. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35431 | Rating: 0 | rate:

| |

|

Why not use HWiNFO and its Sensor logging ? | |

| ID: 35434 | Rating: 0 | rate:

| |

Why not use HWiNFO and its Sensor logging ? I had never heard of HWiNFo for Windows until now, thanks. It looks like it might have everything one needs. If it logs the data we would want for GPUgrid tasks and is capable of composing the kind of graphs that would be useful to GPUgrid users then it would be great. Anybody doing it so far? Python exposes many of the NVIDIA driver API calls and there are graphing apps (GNU plot) that runs on Windows as well as Linux. Python runs on Windows too. Using that API via Linux one could write one app that runs on Windows and Linux that logs precisely the data we want and produces exactly the graphs we want. That's easy with Python because it can also use the BOINC API to access task names and other useful data BOINC generates and exposes via its API. That would allow you to log data and associate its graph with a task name, driver version, OS, BOINC configuration and hundreds of other types of info/data that HWiNFO might not be able to access or graph. It might or it might not, I have no idea as I've never used it. graphs it, apparently. TIf WiIt might be worth looking into. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35448 | Rating: 0 | rate:

| |

|

I have installed it and used it, but the readings differ with other programs that read all sort of system information but especially temperatures differ. I have started a thread about temperature readings and it seems everyone has it own preference for a reading program. | |

| ID: 35449 | Rating: 0 | rate:

| |

|

Since your objective is to keep the GPU temperature below a limit then believe the application that reports the highest temperature. If you can keep the temp reported by that app below the limit then you can be quite certain it actually is below the limit.[/quote] | |

| ID: 35454 | Rating: 0 | rate:

| |

|

I think there's a lot of users logging data using HWiNFO and it can directly draw graphs too. Here's one example, though for AMD, but NV is similar: I have installed it and used it, but the readings differ with other programs that read all sort of system information but especially temperatures differ. I have started a thread about temperature readings and it seems everyone has it own preference for a reading program. Which exact temperatures differ, can you please post which sensor is it and which value? Also what other tools do you use, which show different values ? I believe most tools use NVAPI to read NV GPU temperatures on later families and so HWiNFO does, so I'm really wondering that there are differences. Let me know about any issues and I'll look at that, since I'm the author of HWiNFO ;-) BTW, I have already contacted the author of BoincTasks about an integration with HWiNFO and he thinks it's a good idea, but is currently very busy. But I think this might be implemented sometime.. would be definitively interesting to see all sorts of sensor information from HWiNFO via BoincTasks. | |

| ID: 35458 | Rating: 0 | rate:

| |

BTW, I have already contacted the author of BoincTasks about an integration with HWiNFO and he thinks it's a good idea, but is currently very busy. But I think this might be implemented sometime.. would be definitively interesting to see all sorts of sensor information from HWiNFO via BoincTasks. You're the author, excellent :-) Integration with BoincTasks would be very handy for all BOINC volunteers. BT runs on Linux too under Wine so integration would be cross platform compatible... perfect. I'm moving this "log data and graph it" thing to lowest priority on my "would like to code it" list and I intend to try HWiNFO for Linux ASAP. No, wait!! The hwinfo package for Linux is a different package! Or did you author it for Linux as well? If not then I would be interested in collaborating with you to make a Linux version of your HWiNFO so users can have the same experience on both platforms. First I have other stuff to clear off my plate but perhaps in a couple months... ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35462 | Rating: 0 | rate:

| |

You're the author, excellent :-) Integration with BoincTasks would be very handy for all BOINC volunteers. BT runs on Linux too under Wine so integration would be cross platform compatible... perfect. I'm moving this "log data and graph it" thing to lowest priority on my "would like to code it" list and I intend to try HWiNFO for Linux ASAP. No, wait!! The hwinfo package for Linux is a different package! Or did you author it for Linux as well? If not then I would be interested in collaborating with you to make a Linux version of your HWiNFO so users can have the same experience on both platforms. First I have other stuff to clear off my plate but perhaps in a couple months... The HWiNFO I do is for Windows (and DOS) only. The hwinfo on Linux is a completely different thing. Porting my HWiNFO to Linux would be a really huge effort. Though I think about that sometimes, I don't believe this is going to happen in near future. | |

| ID: 35464 | Rating: 0 | rate:

| |

|

I was thinking more like I write the Linux version and make it look and feel like the Windows version as much as possible. The collaboration part would involve very little work from you. But that's a topic for a different discussion in a different thread some time in the future. PM if interested or I might PM you about it in a month or so. Right now I'm just discovering the power of GKrellM for Linux. I overlooked it for a while but today the light went on and I realized just how much it can do. It's awesome and will be included in Crunchuntu. | |

| ID: 35469 | Rating: 0 | rate:

| |

I was thinking more like I write the Linux version and make it look and feel like the Windows version as much as possible. The collaboration part would involve very little work from you. But that's a topic for a different discussion in a different thread some time in the future. PM if interested or I might PM you about it in a month or so. Right now I'm just discovering the power of GKrellM for Linux. I overlooked it for a while but today the light went on and I realized just how much it can do. It's awesome and will be included in Crunchuntu. I'm not sure how you meant that, but sure, let's move this discussion out of this thread. You can PM me, or better send direct e-mail (you can find mine in HWiNFO)... | |

| ID: 35472 | Rating: 0 | rate:

| |

|

Under Windows, NVSMI shows the GPU temps and drivers for all cards, and more info for Titans, Quadro's and Teslas. | |

| ID: 35473 | Rating: 0 | rate:

| |

Under Windows, NVSMI shows the GPU temps and drivers for all cards, and more info for Titans, Quadro's and Teslas. There is no nvidia-smi in Linux. For Linux they ship the nvidia-settings app which, when run from command line with no args, starts the nvidi-settings GUI. If run with args (and there are a million possible args, just do 'man nvidia-settings' to read the manual) it exposes the driver API, very powerful. Or you can just click on the nvidia-settings icon to open the GUI which allows setting fan speeds if you've set coolbits in xorg.conf. It also gives a load of info and allows other tweaks such performance profile which can be used to increase performance. What nvidia-settings does not do is allow to set a target temperature and for that reason it is not the app it could be so I give it 4 out of 5 stars. I use calls to nvidia-settings extensively in my gpu_d script to get temp readings and to adjust fanspeed up and down for the purpose of maintaining the user specified target temp. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35483 | Rating: 0 | rate:

| |

|

I have increased the voltage of my 780Ti to 1.185mV and not rebooted the system. At first this didn't help. Temperature is steady at 72°C with ambient temperature of 27°C. | |

| ID: 35532 | Rating: 0 | rate:

| |

Which exact temperatures differ, can you please post which sensor is it and which value? Also what other tools do you use, which show different values ? I don't know which sensors are the problem but I find all different reading when checking the CPU temps. You program has a lot of information, which is great! But I have an Asus MOBO and Asus gives a software package with it for temperature control and readings. However is too high, according to a lot of others with same MOBO. Then there is CPUID HWMonitor, also used by many, (give very high readings with AMD CPU), CoreTemp32, TThrottle and RealTemp. If I check my CPU temperature with all programs, then there is a range in differences of 13 degrees! So for me, as I don't have the technical knowledge, it is difficult to decide which program I can believe. If I use the hottest I should be safe is the main advice. That is true, but if the CPU runs actually 13 degrees colder, I could set the fan lower which reduced sound. And I don't have to shut down my rigs to often in summer when ambient temps go to 35°C. Therefore I need to know which program I can trust. ____________ Greetings from TJ | |

| ID: 35533 | Rating: 0 | rate:

| |

I don't know which sensors are the problem but I find all different reading when checking the CPU temps. You program has a lot of information, which is great! I'll explain this, maybe more users are interested in this... I suppose you have a Core2 or similar family CPU. These families didn't have a certain marginal temperature value programmed - it's called Tj,max and when a software tries to read core temperature from the CPU, this is not the final value, but offset from that Tj,max. So if reading gives x, all tools do "temperature = Tj,max - x". Now the problem is that actually nobody knows exactly what the correct Tj,max for a particular model of those families should be ! Intel tried to clarify this, but they caused more mess, than a real explanation. So this why these tools differ - each of them believes that a different Tj,max is used for your CPU. But the reality is - nobody knows this exactly.. There have been several attempts to determine the correct Tj,max for certain models, some folks made large tests, but they all failed.. So all of us can just guess. The other issue with core temperatures is the accuracy. If you're interested to know more, I wrote a post about that here: http://www.hwinfo.com/forum/Thread-CPU-Core-temperature-measuring-via-DTS-Facts-Fictions. Basically it means, that on certain CPU families the accuracy of the temperature sensor was very bad, especially at temperatures < 50 C. So bad, that you can't use it at all.. That's the truth ;-) So in your case, you better rely on the temperature of the external CPU diode... | |

| ID: 35534 | Rating: 0 | rate:

| |

The times however have increased with around 2000 seconds. So even for the crunchers with post XP there is hope :) I hope you meant Decreased :) ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 35537 | Rating: 0 | rate:

| |

|

Thanks you for the explanation Mumak. | |

| ID: 35552 | Rating: 0 | rate:

| |

The times however have increased with around 2000 seconds. So even for the crunchers with post XP there is hope :) Yes indeed skgiven, the times are better (faster) now. ____________ Greetings from TJ | |

| ID: 35553 | Rating: 0 | rate:

| |

|

TJ, | |

| ID: 35556 | Rating: 0 | rate:

| |

|

Yes thank you Matt. As I saw better times with other crunchers with same OS, it should be possible for me too. And I like to experiment a bit and change only a little at a time to see the results. Perhaps I increase 1 or 2 mV more to see if it can a bit more better. But so far I am happy with the results. | |

| ID: 35560 | Rating: 0 | rate:

| |

|

Hi guys :) | |

| ID: 35738 | Rating: 0 | rate:

| |

|

completed this task <core_client_version>7.2.0</core_client_version> SWAN_SYNC is enable in my envronment variables ... this task takes 115,650.00 credits only (I see that Windows OS takes 135,000.00 credits :| With new task (longruns) I started other projects (only cpu) and now it is slow in its processing steps :( (only 3% after 30 minutes) . previous task after ~20 minutes was at ~10% (or more...) my systeminfo Portage 2.2.8-r1 (default/linux/amd64/13.0, gcc-4.8.2, glibc-2.17, 3.13.6-gentoo x86_64) ================================================================= System uname: Linux-3.13.6-gentoo-x86_64-Intel-R-_Core-TM-_i7-4770_CPU_@_3.40GHz-with-gentoo-2.2 KiB Mem: 16314020 total, 14649060 free KiB Swap: 0 total, 0 free Timestamp of tree: Sun, 16 Mar 2014 11:15:01 +0000 ld ld di GNU (GNU Binutils) 2.23.2 distcc 3.1 x86_64-pc-linux-gnu [disabled] ccache version 3.1.9 [enabled] app-shells/bash: 4.2_p45 dev-lang/python: 2.7.5-r3, 3.3.3 dev-util/ccache: 3.1.9-r3 dev-util/cmake: 2.8.11.2 dev-util/pkgconfig: 0.28 sys-apps/baselayout: 2.2 sys-apps/openrc: 0.12.4 sys-apps/sandbox: 2.6-r1 sys-devel/autoconf: 2.13, 2.69 sys-devel/automake: 1.12.6, 1.13.4 sys-devel/binutils: 2.23.2 sys-devel/gcc: 4.7.3-r1, 4.8.2 sys-devel/gcc-config: 1.7.3 sys-devel/libtool: 2.4.2 sys-devel/make: 3.82-r4 sys-kernel/linux-headers: 3.13 (virtual/os-headers) sys-libs/glibc: 2.17 Repositories: gentoo ACCEPT_KEYWORDS="amd64" ACCEPT_LICENSE="*" CBUILD="x86_64-pc-linux-gnu" CFLAGS="-O2 -march=native -pipe" CHOST="x86_64-pc-linux-gnu" nvidia-drivers : 331.49 [edit] after 1h of computation , progress is at 4.8% :( | |

| ID: 35748 | Rating: 0 | rate:

| |

|

I finally got a Noelia task on my GTX780Ti,and runs smooth with a steady GPU use of 90% which is way better then the 74% of Santi's and 66-72% of Gianni's. | |

| ID: 35957 | Rating: 0 | rate:

| |

So not only WDDM is hampering the performance of the 780Ti but also the way a GPUGRID WU is programmed. From your statement above it seems that these are separate factors, but actually they aren't. I would say that for those workunits which (have to) do more CPU-GPU interaction the performance hit of the WDDM is larger. | |

| ID: 35988 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : Poor times with 780 ti