Message boards : Number crunching : Building a Desktop for GPUGrid

| Author | Message |

|---|---|

|

I would like to have a go at replacing my four-year-old+ Dell XPS 435 with a home-built (i.e. me-built!) system. The Dell has but one PCIe x16 socket and I have a spare GPU that's sitting in its box doing nothing.

| |

| ID: 34074 | Rating: 0 | rate:

| |

|

I just built a new system, based on AMD FX 8350 8 core CPU. It uses an ASRock 990FX Extreme3 with 3 PCIe slots (two @ 16x and one @ 8x). I use my older GTX 560 and GT 440 cards but only the GTX 560 crunches for GPUGRID as the 440 is somewhat underpowered, though it crunches Milkyway and Einstein. I live in Belgium and payed for the mobo 100€ while the CPU costs me 188€. PSU, HDD, Case and DVD come from my old system so I just reuse them | |

| ID: 34076 | Rating: 0 | rate:

| |

|

Here is a lot of information, if you like some reading. | |

| ID: 34082 | Rating: 0 | rate:

| |

|

We can give better advice if we know what you intend to use your new system for. So far you have told us you will be using it to crunch mostly GPUgrid. What else do you intend to use it for? Do you do video/photo editing? Gaming? Develop software? Compose music (MIDI stuff or whatever they do these days)? | |

| ID: 34085 | Rating: 0 | rate:

| |

|

Humble apologies! I subscribed to this thread but have yet to get any email notification of your postings! Sorry. | |

| ID: 34199 | Rating: 0 | rate:

| |

|

Just checked out Amazon Germany prices. | |

| ID: 34200 | Rating: 0 | rate:

| |

|

Hi Tom, | |

| ID: 34204 | Rating: 0 | rate:

| |

|

You may consider the ASRock 990FX Extreme3. It costs 99.95 euro's (in Belgium) and has 3 PCIe slots. It's a very decent mobo in my experience, despite its "cheaper" price. Its UEFI BIOS is also nicely laid out. That mobo unofficially supports up to 64GB RAM. I say unofficially because ASRock says it needs to bring out a new BIOS version to support that much memory, but it's only willing to do so when 16GB modules become available so it can verify they are compatible. Without a new BIOS, the mobo officially supports 32GB. | |

| ID: 34205 | Rating: 0 | rate:

| |

|

Tomba, 3 x PCIe 2.0 x16 (dual x16 or x16/x8/x8) The dual x16 means if you install only two x16 cards then those two slots will be assigned 16 lanes each. The x16/x8/x8 means if you install three x16 cards then one slot will be assigned 16 lanes but the other two will be assigned only 8 lanes each. A PCIe lane is similar to a lane on a roadway. A road with one lane can carry a certain amount of traffic, a road with four lanes can carry (theoretically) 4X more traffic, sixteen lanes means ~16X more traffic. More PCIe lanes means more data can flow from the GPU to RAM/CPU/disk in the same amount of time. The point is this... though that mobo can accept four cards that can use sixteen lanes each, if you install three such cards only one will be able to move data at x16 speeds, the other two will be reduced to half speed (x8). Pray the gurus correct me if I am wrong about that. You said eventually you want to put high power GPUs on the mobo. The question is... How "big" or "powerful" could you go with that mobo. The future is hard to predict but with the demands of current GPUgrid tasks, IMHO, you could install 3 X GTX 660TI or even 3 X GTX 680 cards and get excellent results. If you want to eventually put 3 X GTX 690 then you might need all slots to run at x16 speed to prevent a bottleneck on the PCIe "bus". Probably someone with more experience should comment on the feasibility of 3 X 690 on that mobo, current feasibility as well as future feasibility. There are mobos that have four double-width x16 slots that will all run at x16 speeds but they are bloody expensive. IIRC, over $400 CDN for such a mobo. On top of that you need a CPU that has, IIUC, 4 X 16 = 48 PCIe lanes else some slots will run at less than x16 speed. As you can imagine, the more lanes a CPU has the more it costs. Intel CPUs with 48 lanes are bloody expensive and limited only to Haswell (?), not sure about equivalent AMD models. The CPU IMHO, a four core CPU running at a reasonable clock speed is more than sufficient for 3 X GTX 680 running GPUgrid tasks. Again, not sure about 3 X GTX 690. RAM on mobo I also doubt you need 16 GB but RAM is relatively cheap so maybe 16 is not a bad idea. But remember the mobo has four RAM slots. You could start with 8 GB and wait for a really got deal on another 8, if you think you need it. Cooling rant continued Liquid cooling isn't worth the price or hassle, IMHO. Pumps wear out, hoses leak, if you use the wrong fluid or combination of metals the radiator corrodes and leaks... who needs that? Also, there was a cruncher here a little over a year ago who thought he had it all wrapped up with expensive liquid cooling but he put the machine under his desk as you plan to do, Tomba. Bad move. He fried the CPU (possibly GPU too, can't remember) because the hot exhaust built up under his desk and the whole works overheated. Apparently the thermal protection system in the GPU/CPU didn't function correctly or something like that. The good news is it kept his toes warm, for a while. Anyway, forget all the hype in the ads, a shiny new "game changing" liquid cooling system with "jaw dropping" cooling specs won't work worth a damn if the ambient temperature is too high. In geographical locations that get fairly warm in summer, the smart way to cool rigs that generate a lot of heat is to expel the hot exhaust to the outdoors or at least into a different room in the house. Or, if you can afford it, crank up the air conditioner. If I were to put liquid cooling on 3 GPUs I would run the coolant lines out of the house and situate the radiator outside otherwise it's a bad waste of good money. I would also devise a way to move the radiator indoors for the winter so the rig can help heat the house. That additional flexibility requires only two additional hose couplings. Under no circumstances would I situate the radiator under my desk without an additional, large, mains powered fan (about 25 cm?) to move the hot air out from under the desk. PSU Google 'psu calculator'. I've heard the "eXtreme site" is very good, YMMV. Enter your system specs and get the answer. Case Think outside the box, literally. Three cards on a mobo that is oriented vertically is not a good idea, IMHO, unless you're willing to install several additional case fans. If you orient the mobo horizontally and leave the top open, you get the benefit of convection (hot air rises all on its own) which means fewer additional fans and less noise. Also, with three GPUs on one mobo I would go for cards with the single blower type fan which are louder but move more air than blade fans. The main benefit of the blower fans is that they push the air out the back of the card and away from the other cards rather than out to the edges of the card and onto adjacent cards as is the case with dual bladed fans. Others may disagree but I think for a three card setup that is very important. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34207 | Rating: 0 | rate:

| |

|

A good sum up Dagorath but I have some comments. | |

| ID: 34221 | Rating: 0 | rate:

| |

|

Good points TJ :) | |

| ID: 34224 | Rating: 0 | rate:

| |

|

Many many thanks for all the insights you guys have provided. I'm grateful. | |

| ID: 34227 | Rating: 0 | rate:

| |

|

An air duct, that was the word I was thinking of but could not get into mind. So thanks Dagorath. The Alienware now from Dell has such an air duct over the GPU's. Only two will fit and then with a front fan it blows directly into the duct while another fan cools the rest of the case | |

| ID: 34232 | Rating: 0 | rate:

| |

|

One more thing Tom, | |

| ID: 34233 | Rating: 0 | rate:

| |

One more thing Tom, Hi TJ, Thank you for that. Good idea to check other suppliers in France and I will, though there are pretty good reasons to give Amazon France the business. Except for the CPU cooler, which will come from an Amazon trader, I can put all the bits one one order. I can specify on that order to ship only when everything is available. That means they won't take my money till then, and my 30 days "Return to Amazon" guarantee starts at the same time for all components. Tom | |

| ID: 34234 | Rating: 0 | rate:

| |

A GTX690 has 2 processors so it is in fact a dual card. With Windows you can only run 2 of these (4 GPU's in total). For more you need to go to Linux or something else. You should ask Firehawk on how to put 3 GTX 690's in a single PC with Win7. Finally there is a lot written about single and dual (or triple) GPU fans. I have a 770 which has two fans. So one troughs heat out and the other puts it into the system. This card however runs GPUGRID at 63°C (or lower). Blower type (single radial fan) GPUs are better when there are more than 1 GPU in a close case, as the blower type fan directly blows the hot air out from the case on the back side, except the GTX 690, which has the fan in the middle of the card, so half of the heat stays in the case (unless you have some special front fans). The multi-fan (axial fan) coolers are always blow the hot air inside the case (only a small amount goes out on the rear grille), so if you put more such cards in a close case they will heat each other (especially the upper ones gets hotter). I recommend not to put any GPUs next to the other, at least 1 slot space should be left empty between the cards to have adequate airflow. So you should buy a MB with 2 slots between the PCIe x16 slots. (The Asus Sabertooth 990FX R2.0 is such an MB). I'm using motherboards with 4 (equally spaced) PCIe x16 slots, but I'm using 2 slots only so I have 3 slots between the used PCIe slots, to have 2 slot room for the airflow between the cards. | |

| ID: 34237 | Rating: 0 | rate:

| |

|

@TJ: | |

| ID: 34253 | Rating: 0 | rate:

| |

@TJ: Here I read that the 660TI is but 4% points better than the 660:  Given that Amazon France's 660 is €165 and the TI version is €255, for a 4% improvement I'll stick with the non-TI version (me thinks...). It's hardly a big performance increase and it's not a little more money. Tom | |

| ID: 34261 | Rating: 0 | rate:

| |

Here I read that the 660TI is but 4% points better than the 660: I think that was quite accurate about a year ago. But since then, the work units have gotten harder. My own tests on the GTX 660 Ti indicate that it is maybe 15% faster than the 660 now. And the power useage is slightly less, so it has close to a 20% improvement in points per day/watt. I don't think that is necessarily enough to overcome the big price advantage that the 660 enjoys (about $100. in the U.S. at the moment, maybe more), but it is worth mentioning. | |

| ID: 34262 | Rating: 0 | rate:

| |

|

I often go looking for bargains - earlier this year I acquired 2 Crosshair IV Formula motherboards for less than the price of one more recent Crosshair V. | |

| ID: 34267 | Rating: 0 | rate:

| |

|

Nearly there! Funding approved by she who must be obeyed... | |

| ID: 34270 | Rating: 0 | rate:

| |

@tomba: Thanks for that, Dagorath. I like the idea of shorter run times but, this being my first build, I think it unwise to implement a new opsys now. And I do have a "free" copy of Win7 available, i.e. paid for long ago and written off. Perhaps you could point me at a rundown on the differences between your preferred Linux and Win7? | |

| ID: 34271 | Rating: 0 | rate:

| |

|

Lovely machine, Tomba. | |

| ID: 34273 | Rating: 0 | rate:

| |

The only changes I would make would be using Ubuntu and installing an FX-8350 CPU. Linux is not on, yet... If I went for the FX-8350, what difference would I see for the extra €40? Tom | |

| ID: 34275 | Rating: 0 | rate:

| |

Thanks for that, Dagorath. I like the idea of shorter run times but, this being my first build, I think it unwise to implement a new opsys now. And I do have a "free" copy of Win7 available, i.e. paid for long ago and written off. Oh, well if you already have a Windows and that's the OS you know best than that is definitely the way to go to get it all working. You can experiment with Linux later when the time is right. And since you have a legal Windows disk you have a lot of nice options for implementing a dual opsys system. You can go dual-boot which allows booting either Linux or Windows. Or you can install Linux then install VirtualBox (free) on Linux and use your Win7 disk to create a virtual Win7 machine that runs on the host Linux opsys. That way you can have both opsys running simultaneously. You can also do the opposite and install VirtualBox on Win7 and create a virtual Linux machine running on the host Win7 opsys. This latter scenario as a very convenient way to explore Linux without having to boot back and forth between Win and Lin. People who have never experienced a virtual machine are usually surprised at how well they work and how robust they are. I use them frequently. My favorite Linux is Ubuntu and one of the reasons (not necessarily the main reason) it is my favorite is because it's lead developer, Mark Shuttleworth, is wealthy and doesn't mind spending the money required for development. Probably the biggest reason it is my favorite is that Shuttleworth has abandoned many of the ideas that have prevented more people from adopting Linux. He has made Ubuntu very easy to use, easier than Windows IMHO, while retaining all of Linux's traditional stability and open source concept. I could point you at various rundowns of the differences between Ubuntu and Windows but I think you would view those the same way I do. They hype either Linux or Windows and resort to niggling little details about both. For me there are only three important differences: Linux is free, more stable and more user friendly. All other differences grow out of those three top level differences, again IMHO. Others present differences that are correct but so irrelevant that in the end those differences are always overridden by which opsys you are most familiar with so what's the point of worrying about those. I like Linux because it's free and there is nobody saying... I agree Bob, your team's code would improve our opsys, good job. To keep revenues up we cannot offer that as a free update/upgrade so we'll hold it back for now and include it in the next version along with other things our loyal suckers... ooops! I mean loyal users... have been brainwashed to want, and we'll charge them ummm... errrr... hmmmm... call in the marketing people and lawyers and ask them what they think our loyal suckers... ooops! I mean loyal users... will be able to afford next time then add 25% to that. Huh? The new code hasn't been fully debugged and tested? What does that have to do with anything? If we hear any complaints about that we'll send McAfee, Norton and their crew on a free Mediterranean cruise and layout how they will tell the world all the trouble is due to... ummmm... hmmmmm.... who is most unpopular these days... Al Qaida? that nutbar in North Korea? those holier than thou Canucks? bahh it doesn't make any difference and yes I know it's Canadonians not Canucks, the point is we'll pull a name out of a hat and blame it all on the cyber-terrorists they sponsor. It works every time, even Jobs gets away with it and everybody knows what a poofter he is. Which reminds me... how is our campaign to associate turtlenecks with homosexuality and AIDS progressing? Are our loyal suckers... ooops! I mean loyal users... buying that nonsense yet or do we have to give away a truckload of cheap turtlenecks away at the upcoming Gay Pride parade and snap pictures of them with the turtlenecks on with Gay Pride banners clearly visible in the background and plant the pics right beside ads about AIDS? You know all the above is true, extrapolate from there. You don't need my help with that. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34277 | Rating: 0 | rate:

| |

|

Hi Tom, | |

| ID: 34283 | Rating: 0 | rate:

| |

|

My friend installed an SSD in his computer. Now that the initial Wow! factor has worn off he says he wishes he had bought a UPS instead. The main benefit he sees is that the machine boots extremely fast but if the machine is a dedicated cruncher it doesn't shutdown and reboot often. He claims the SSD makes little difference to his normal computer activities like web surfing, listening to music, word processing, and CAD (he's a draftsman). Sure, those files load and save a little faster but there's not much practical difference between 1 second and 5 seconds. If you save 4 seconds 50 times a day that's 200 seconds or less than 4 minutes which is nothing I would worry about. | |

| ID: 34285 | Rating: 0 | rate:

| |

My friend installed an SSD in his computer. Now that the initial Wow! factor has worn off he says he wishes he had bought a UPS instead. The main benefit he sees is that the machine boots extremely fast but if the machine is a dedicated cruncher it doesn't shutdown and reboot often. He claims the SSD makes little difference to his normal computer activities like web surfing, listening to music, word processing, and CAD (he's a draftsman). Sure, those files load and save a little faster but there's not much practical difference between 1 second and 5 seconds. If you save 4 seconds 50 times a day that's 200 seconds or less than 4 minutes which is nothing I would worry about. In my experience it is a very important factor that the user interface should immediately react to the user's actions something spectacular (not just changing the shape of the mouse pointer), or the user will click again and again and again. Having an SSD and a lot of RAM (so you can turn off the virtual memory) can enhance the user experience so much, that the wow factor could be permanent. In this area the mobile devices always had the benefit of having an SSD built in right from the start. (I had an old "tablet" PC with Win98 on a 2.5" HDD - it was a completely pointless device) EDIT: I have a Core i7-980X (6 cores, 2 threads on each core) and I used to crunch on it for rosetta@home. Their workunits read and write so much at startup, that they keep on failing when the BOINC manager is trying to start 8-9-10 of them at once (with "no heartbeat from client" error). This error could be avoided by suspending all rosetta@home workunits before shutdown, and restarting them one by one after startup, or having an SSD drive. A UPS can save you hours of messing around with restores and redoing work that got destroyed by a power failure before it got saved or backed up. Sure it can. A UPS is highly recommended t for workstations and servers. But if you don't have one, you can turn off write caching on your drive(s) in device manager, and this will reduce the chance of data loss in a case of a power failure, and it won't degrade the performance of the SSD drive much. If you turn off virtual memory also, it will reduce the chance of data loss even more. | |

| ID: 34286 | Rating: 0 | rate:

| |

|

During the last week I tested Linux vs W7 and Linux was 12.5% faster on a GTX770 (Exact same system). XP is about as fast as Linux. | |

| ID: 34287 | Rating: 0 | rate:

| |

@TJ: That is a 4% delta when comparing cards against what was the top GPU for GPUGrid, the Titan (now overtaken by the GTX780Ti), and ((55/51)*100%)-100%=8%; the Reference 660Ti at Reference clocks is 8% faster than a reference 660. Most GTX660Ti's typically boosts up to ~1200MHz and for here are around 20% fast than a reference 660, as suggested. However the theoretical performance difference between the GTX660 and 660Ti is 40%. The 660Ti is somewhat bandwidth constrained compared to the GTX660; both have the same bandwidth, but the 660 has less CUDA cores to feed. Obviously I can't make a performance table that includes each and every manufactured version of every card, so I just went by reference models. A few people did chip in with their cards boost capabilities. NVidia GPU Card comparisons in GFLOPS peak ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 34288 | Rating: 0 | rate:

| |

The 660Ti is somewhat bandwidth constrained compared to the GTX660; both have the same bandwidth, but the 660 has less CUDA cores to feed. I believe you're refering to GPU memory bandwidth correct? And I believe that restriction either decreases or disappears on GTX 670, yes? Regarding comments extolling SSD... don't take this as mindless Windows bashing or a Linux plug but I had no idea it takes 30 minutes and several reboots to install or upgrade an NVIDIA driver on Windows. On Linux an NVIDIA driver installs in about 7 minutes for me. The way I do it requires a reboot but that's only because that's the only way I can find to shutdown and restart X. If the preferred method worked for me I wouldn't have to reboot at all and install and updates would require about 5 minutes. OK, that's a compelling reason for a Windows user to have an SSD. So I'll expand on that a little because tomba asked for a list of differences between Lin and Win. I'm not sure of the technical reason but driver installation in general, not just NVIDIA driver, as well as opsys updates are generally much faster and easier on Lin compared to Win. Anybody who has used both opsys' can verify that. Devoted Win proponents counter with the argument that Win has drivers for more devices than Lin and that is true but not nearly as true as it was 10 years ago. As more and more device manufacturers have come to realize that complying with open standards has a positive influence on their revenue, Linux devs have followed up with drivers for those devices and frequently you bring home a new camera, printer or whatever and just connect it to the USB port and 10 seconds later it's working, no need to put a disc in the DVD drive or download something or even reboot. Now that is the way Bill Gates intended Plug 'n Play to work, I think. And that's one of the reasons why I feel my claim that Linux is more user friendly is not just fanboy talk, it's real. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34289 | Rating: 0 | rate:

| |

|

I like to read all these Linux things as I still have issues with my Linux or even better Linux and I. | |

| ID: 34291 | Rating: 0 | rate:

| |

|

If we had a nice Linux forum area, all things Linux could be shifted to there... Alas we don't so all things Linux are strewn across all threads in all forums... | |

| ID: 34293 | Rating: 0 | rate:

| |

|

Again, thanks everyone for your input. | |

| ID: 34295 | Rating: 0 | rate:

| |

I like to read all these Linux things as I still have issues with my Linux or even better Linux and I. You are correct and I have tried to restrain myself from going too far off-topic. My apologies if I have done so but I got the feeling tomba wanted to hear a little about Linux. Again, sorry if I've gone too far. @skgiven An area for Linux would be nice. As for app to control fan speed on Linux try my gpu_d script. If there is sufficient interest I'll improve it to handle all the GPUs in a single PC and, if the backend nvidia-settings binary supports LAN, I'll make the script support that too. Or if you would be more interested in re-flashing and fixing the self-serving temperature vs. fan speed curve some manufacturers seem to be building into their cards I can give links to what I've read about that. The only reason I haven't posted those links so far is because they're scattered all through my browser's bookmarks and it would take some time to round them all up and put them in a post here. I'll do it if anybody indicates they would find those links useful otherwise I won't bother wasting the time. Also, I thought most everybody here already knows about re-flashing their GPU BIOS. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34296 | Rating: 0 | rate:

| |

You are correct and I have tried to restrain myself from going too far off-topic. My apologies if I have done so but I got the feeling tomba wanted to hear a little about Linux. Again, sorry if I've gone too far. No, no you are not going to far. But after a while it will be difficult to find all the bits and peaces of information. With more "dedicated" threads that will be easier. ____________ Greetings from TJ | |

| ID: 34298 | Rating: 0 | rate:

| |

|

Hi Tom, | |

| ID: 34299 | Rating: 0 | rate:

| |

|

Hi TJ, The AMD CPU will come with a stock cooler. It is very easy to install and cools great. My system does 4 Rosetta's and 2 GPUGRID on two 660's and runs at 48-54°C with the stock cooler. It can make a lot of noise though if the room temperature becomes high. During summer in France even higher then in the Netherlands. but you can try first. The thermal past is already mounted on that cooler. I think I'm committed to the already-purchased cooler but, if there's a decent cooler in the mobo box, I might try installing it in my i7 Dell, which makes too much CPU fan noise for me to run any Rosettas. I would install an SSD though of 100 or 120 MB. Insight (a Europe) company has now 40% discounts on a Kingston SSD. If you can find that company in France too you could if have for around 50 Euro's. I'm convinced. I looked at Insight France but there was no deal like your found locally. So I went for a 120GB SSD, and cradle, from Amazon France. Tom | |

| ID: 34306 | Rating: 0 | rate:

| |

I got the feeling tomba wanted to hear a little about Linux. Absolutely right, Dagorath! In fact, I decided to have a go at Linux on my Win7 PC while waiting for my PC build pieces to come together. I installed and ran Virtualbox, which soon wanted a Ubuntu CD. I downloaded the 12.04.3 ISO and inserted a new 700MB RW CD for the burn. "Not enough space on the CD"; the ISO is 724,992KB. This is no place to solve my problem but can you point me at a list I can cry on? Thanks. Tom | |

| ID: 34308 | Rating: 0 | rate:

| |

I downloaded the 12.04.3 ISO and inserted a new 700MB RW CD for the burn. "Not enough space on the CD"; the ISO is 724,992KB. I had that problem too, downloaded a older version and updated. Another option is to put it into a USB stick. There's plety of forums talking about this (I find it very silly, that they did this). Search "ubuntu size 700MB" and you find more info. | |

| ID: 34310 | Rating: 0 | rate:

| |

|

Tomba, | |

| ID: 34311 | Rating: 0 | rate:

| |

When you create a new machine in Virtualbox, it will step you through the parameters to create a new virtual machine. When it gets to the step where it asks for the install *.iso file, you should be able to point it to the file location on your hard drive. Thanks! Did that on my 64-bit Windows 7 PC. Got this:  Not easy!! | |

| ID: 34312 | Rating: 0 | rate:

| |

|

I didn't pay much attencion to this, but I would advice you to see issues with GPUs on VirtualBox. Not very sure you will go smooth. | |

| ID: 34313 | Rating: 0 | rate:

| |

Hi TJ, I'm afraid that is not going to work. The cooler delivered with the AMD CPU is especially for AMD and on the MOBO a fitting with backplate is already mounted for it. Only pushing a handle and it is tight locked. You can try it first and if you like its performance you can put your new cooler on the i7. ____________ Greetings from TJ | |

| ID: 34315 | Rating: 0 | rate:

| |

|

Tomba, | |

| ID: 34318 | Rating: 0 | rate:

| |

The only thing I know to tell you is to check and make sure that virtualization is enabled in your BIOS. No mention of virtualisation in my BIOS. Did a BOIS update. Nothing changed... | |

| ID: 34320 | Rating: 0 | rate:

| |

I downloaded the 12.04.3 ISO and inserted a new 700MB RW CD for the burn. "Not enough space on the CD"; the ISO is 724,992KB. They didn't do anything silly. It just looks silly because so many newbies are unfamiliar with the terms they use and get confused. I will attempt to explain. You can download 2 different kinds of ISO files from which to install Ubuntu:

| |

| ID: 34325 | Rating: 0 | rate:

| |

|

Tomba, | |

| ID: 34329 | Rating: 0 | rate:

| |

|

Regarding necessity for hardware virtualization, see Hardware vs. software virtualization from the official VirtualBox documentation. To summarize, you don't need hardware virtualization if the guest OS is 32 bit. If the guest OS is 64 bit then your CPU must support hardware virtualization. | |

| ID: 34332 | Rating: 0 | rate:

| |

|

Wow, this thread surely developed quickly! I hardly managed to catch up with new posts over the week, let laone posting myself. I suppose you haven't ordered yet, Tomba? Anyway, here are my few cents: | |

| ID: 34333 | Rating: 0 | rate:

| |

|

Thanks for chipping in MrS, and a special thanks for bringing this thread back on topic! I suppose you haven't ordered yet, Tomba? Yes I have! Here 'tis:  GPU: back when the GTX660Ti wasn't much more expensive than a GTX660 it was the obvious choise for crunchers. But now the markup on the last cards is far too large. You should go straight for a GTX770 (280€) if you want more than a GTX660, anything in between is too expensive for the performance. Now there's a thought... I just emailed this link to my son with a gentle hint that Xmas is just around the corner :). If that works, I have the potential of a 770 and 2x660 for the new rig, with my spare 460 going into the current one. Perhaps that's OTT? . What's the clock speed and timing of your kit? I have no idea :( DVD: you don't need this if it's going to be a dedicated cruncher (install from USB and later on use another drive over the network). No. It is not to dedicated. It replaces the old one, which goes to my daughter. SSD: I recommend the Samsung 840 Evo 120 GB at 85€. That's the one coming! Number of GPUs: I won't put more than 1 high performance card into my main rig, since I couldn't cool that heat silently enough with air. Two cards is still a manageable setup: you can have enough space between the cards and the PCIe connections are not really bottlenecking yet (I'm not only considering the current GPU-Grid app - it's better to err on the safe side here). At 3 cards things become challenging: you need a significnatly stronger PSU and you're almost forced into blower-style coolers, which are powerful but inherently loud. Also the PCIe configurations and on AMD the link between Northbridge and CPU become important to consider. Hmmm. Food for thought there, especially if my 'cunning plan', aka Baldrick, for a 770 comes off... 100€/year higher running costs for the AMD system (unless your electricity is significantly cheaper)! The electric tariff I'm on gives me 22 days a year when the price is 10x the normal tariff. No crunching on those days! However, for the remaining 343 days I pay half the normal tariff, so electric usage is not a problem. Many thanks again for a fascinating rundown on crunching considerations. I'm grateful. Tom | |

| ID: 34343 | Rating: 0 | rate:

| |

|

Re my plan to use the third of the three WIN 7 licences I bought ages ago, I just noticed it's the "Update" version. | |

| ID: 34344 | Rating: 0 | rate:

| |

Re my plan to use the third of the three WIN 7 licences I bought ages ago, I just noticed it's the "Update" version. Call off the dogs! Just ordered WIN7 Professional SP1 with COA on eBay UK for £37. Excellent feedback. Tom | |

| ID: 34345 | Rating: 0 | rate:

| |

|

Hi Tomba, have fun with your new system :) | |

| ID: 34351 | Rating: 0 | rate:

| |

Re my plan to use the third of the three WIN 7 licences I bought ages ago, I just noticed it's the "Update" version. I also recently bought Win7 Pro on ebay, got for £35 with COA. When it was delivered it turned out to be a Dell disc that came with an unused COA. Didn't use the disc but the COA worked, although I did have to use phone activation to verify it. | |

| ID: 34359 | Rating: 0 | rate:

| |

|

| |

| ID: 34373 | Rating: 0 | rate:

| |

|

I don't have a cc_config.xml file, which I shall need for multiple GPUs. | |

| ID: 34390 | Rating: 0 | rate:

| |

|

The cc.config goes into the BOINC Data folder. | |

| ID: 34392 | Rating: 0 | rate:

| |

|

The case arrived this morning so I made a start. | |

| ID: 34396 | Rating: 0 | rate:

| |

The cc.config goes into the BOINC Data folder. Thanks TJ. Noted. Tom | |

| ID: 34398 | Rating: 0 | rate:

| |

|

The connectors on the ends of the cables usually have names such as USB, HDD led, PWR, RESET, etc. stenciled on them. Your mobo's manual will tell you wherte each connector goes. The connectors usually are keyed which means they can fit only one way. Where there is any doubt about polarity, the mobo manualwill probably show + and - on a diagram. Red is +, black is -, white can also be + and green is sometimes -. | |

| ID: 34399 | Rating: 0 | rate:

| |

The connectors on the ends of the cables usually have names such as USB, HDD led, PWR, RESET, etc. stenciled on them. Your mobo's manual will tell you wherte each connector goes. The connectors usually are keyed which means they can fit only one way. Where there is any doubt about polarity, the mobo manualwill probably show + and - on a diagram. Red is +, black is -, white can also be + and green is sometimes -. Thanks for that, Dagorath. You were right. The mobo manual told me exactly what to do with all those pretty cables! The earth strap arrived 11:00 this morning so I continued the build. Google was a great help in sorting out information missing from some of the Micky Mouse instructions. Only the mobo instructions were comprehensive. Thanks, ASUS! I chickened out on the Hyper 212 Evo CPU cooler and installed the stock cooler that came with the CPU. Time will tell if that was a bad decision. The build is done. Five hours, allowing for a quick lunch and my standard two-hour nap! I decided to leave the christening till the morning, after a thorough check that everything's in the right place. Fingers crossed... Tom | |

| ID: 34421 | Rating: 0 | rate:

| |

|

You're welcome. | |

| ID: 34422 | Rating: 0 | rate:

| |

I would watch the GPU temperature very carefully. Another good tip. Thanks! Currently copying my GPU temperature monitoring software onto a USB stick. | |

| ID: 34423 | Rating: 0 | rate:

| |

I decided to leave the christening till the morning, after a thorough check that everything's in the right place. Fingers crossed... With some trepidation I powered up this morning. Post screen, followed by BIOS screen. Checked first boot was the CD, popped in the Win 7 CD and it booted. Win 7 installed and now installing a humongous number of Windows updates. I'm amazed! It works!! :) Tom | |

| ID: 34427 | Rating: 0 | rate:

| |

I would watch the GPU temperature very carefully. Both PCs are running Santi WUs on GTX 660. -------------Temp C------Fan RPM Old------------65---------2130 New-----------60---------1470 Looking good for New, methinks... | |

| ID: 34428 | Rating: 0 | rate:

| |

|

It looks very good for New. Congratulations! Your first build appears to be a success :-) | |

| ID: 34431 | Rating: 0 | rate:

| |

|

And congratulations from me, too: I may even try such a project in 2014.... | |

| ID: 34432 | Rating: 0 | rate:

| |

|

The new beast is now running GPUGrid with both GTX 660s so I retired the old PC with a GTX 460. I have planned to include the 460 in the new rig but I had forgotten it too is double width. There's no room in this case for three double width GPUs.

1) Stepping into the unknown is always intimidating so I took my time, double checking as I went along and using Web searches for clarification when in doubt. No. It was not a difficult process even for this old guy! 2) Oh yes. Up to now I had always bought Dell and my opening bid was to check their top-of-the-line desktop; €2000. I spent a tad over half that for better function and expandability. She who holds the purse strings is delighted :) 3) Definite 'Yes' on both counts. If I can do it, anyone can. Tom | |

| ID: 34434 | Rating: 0 | rate:

| |

|

Congratulations Tom! | |

| ID: 34436 | Rating: 0 | rate:

| |

|

Had a fright last night. Got home to find the new PC off! | |

| ID: 34439 | Rating: 0 | rate:

| |

|

BOINC Notices tells me: | |

| ID: 34444 | Rating: 0 | rate:

| |

|

Always check with the documentation - in this case, client configuration. | |

| ID: 34446 | Rating: 0 | rate:

| |

I think your tag would be valid if you put it inside an <options> block. That fixed it. Thank you! Tom | |

| ID: 34449 | Rating: 0 | rate:

| |

By default, BOINC will use the 'better' of two dis-similar GPUs. The Boinc manual merely says, <use_all_gpus>0|1</use_all_gpus> If 1, use all GPUs (otherwise only the most capable ones are used).

| |

| ID: 34462 | Rating: 0 | rate:

| |

|

@skgiven, | |

| ID: 34463 | Rating: 0 | rate:

| |

|

Excellent! | |

| ID: 34464 | Rating: 0 | rate:

| |

|

Well, my new rig is busy making a bigger contribution to GPUGrid. I even have the old rig upstairs running with a GTX 460… | |

| ID: 34468 | Rating: 0 | rate:

| |

By default, BOINC will use the 'better' of two dis-similar GPUs. Sorry, I tend to vary my answers - both style of writing, and depth of technical detail included - according to my perception of the needs of the questioner (and how energetic I'm feeling at the time...). Here are a couple of previous attempts at the same subject - feel free to grab either of them. SETI message 1085712 (technical, quotes source) BOINC message 42194 (interpretation) | |

| ID: 34470 | Rating: 0 | rate:

| |

|

Try ducting cool air from outside the case directly to the CPU fan. Here are a bunch of pictures of ducts in use. Notice the pics of a pop bottle with both ends cut off. Now that's my kind of modding... cheap and recycling stuff. I'll give you a link to a tool you can use to cut holes easily in cases, the tool is called a nibbler. | |

| ID: 34473 | Rating: 0 | rate:

| |

|

Nibblers: | |

| ID: 34474 | Rating: 0 | rate:

| |

Well, my new rig is busy making a bigger contribution to GPUGrid. I even have the old rig upstairs running with a GTX 460… You can experiment a bit with the fan settings in Thermal Radar or chose another preset cooling mode. Or turn the "turbo" mode of in the BIOS. As you can see the AMD is running at higher speed, if that is lower is is cooler and uses less power, and thus has the little fan make less rotations (not spin that fast). You can put on another cooler if you want, the new CPU coolers from Be Quit are very good. ASUS don't care what you use to you cool with, its the manual of the CPU that says that you need the stock cooler to keep the warranty. But if you keep the stock cooler, than no problem you can send that in with the CPU if needed. But the change that you ever need to do that is very very small. ____________ Greetings from TJ | |

| ID: 34479 | Rating: 0 | rate:

| |

|

I shall be back here soon with a plea for help on the BIOS of this beast I've bought... | |

| ID: 34487 | Rating: 0 | rate:

| |

Has anyone had experience of squeezing three wide PSUs Make that GPUs... ____________ | |

| ID: 34488 | Rating: 0 | rate:

| |

|

620W is absolutely enough for one GTX770. | |

| ID: 34489 | Rating: 0 | rate:

| |

|

Tomba, if you need help optimizing your BIOS settings on the Sabertooth MB, just let me know, I'm running 4 990FX chipset motherboards (2 Asus M5A990FX Pro R2.0 and 2 Asus Crosshair V Formula-Z). I'm going to be upgrading the other 2 to Formula-Z MB's soon because they let me run 32GB of 1866 RAM with lower settings and they are much more stable because the memory slots have their own 4 pin power connection straight from the PSU. The PCIe slots also have their own 4 pin Molex connection too, my TDP hardly moves on my GPU's. If your interested, just let me know, I have a tremendous amount of experience with that chipset. | |

| ID: 34490 | Rating: 0 | rate:

| |

|

TJ is right... with 3 cards in the case you're going to have cooling problem and/or a noise problem. That's why Retvari recommended cards with radial fans if you intended to put 3 on 1 mobo. Cards with radial fans blow the heat out the back of the case. IIRC, your cards have axial fans which blow the hot air at the card beside it or the CPU and leave the hot air inside the case.

| |

| ID: 34491 | Rating: 0 | rate:

| |

Tomba, if you need help optimizing your BIOS settings on the Sabertooth MB, just let me know Thanks for that, flashawk. I think I need your help! Found some ASUS Windows apps, one of which gives me this opening bid:  Looks like the stock cooler fan revs are high. I was wondering if I could down-clock the CPU to reduce the heat but I have no idea how to do that. Note that fan 4 --- the top fan --- is not running; . I managed to break a blade with my fingers while it was running (ouch!). A replacement is on its way. I was a bit surprised to see the temp difference between my two GTX 660s; 57C vs. 43C. One is brand new and short, the other 2+years old and long. I think the old one is in PCIE-1. Do you see anything here I should worry about? Thanks, Tom | |

| ID: 34494 | Rating: 0 | rate:

| |

TJ is right... with 3 cards in the case you're going to have cooling problem and/or a noise problem. That's why Retvari recommended cards with radial fans if you intended to put 3 on 1 mobo. Please tell me about cards with radial fans. Who makes them? I did reduce the fan noise by installing the case-supplied cover that funnels air from the front fan, through the hard disks and onto the GPUs. And I reckon that if I put the PSU at the top of the case I may just have room for a third GPU. We shall see, and hear!! Tom | |

| ID: 34495 | Rating: 0 | rate:

| |

|

In the following pictures, the axial fans are those with propeller type blades, radial fans are those with squirrel cage impellers. | |

| ID: 34498 | Rating: 0 | rate:

| |

|

Hi Tomba, not many of us AMD guys that have experience with the Asus software don't use it, it reads the motherboard thermistor built in to the AM3+ socket, it's not accurate and reads 10°C to 12°C too high. Programs like AMD Overdrive and HWiNFO64 are both great programs for accurate readings. In fact, they guy who wrote HWiNFO32/64 crunches here at GPUGRD. | |

| ID: 34500 | Rating: 0 | rate:

| |

|

I use the Asus software. The Al Suite 3.0 for Intel based CPU's is quite handy whit predefined Fan Control settings or change it manually, per fan. | |

| ID: 34503 | Rating: 0 | rate:

| |

|

Hey TJ, the AMD software I linked in my previous post is made by the manufacturer of the CPU, I don't see how you could go wrong. HWiNFO32/64 gives me identical temps as AMD Overdrive, they are both reading the "on die" thermistor that is built into the CPU, you can't get more accurate than that. The Asus software doesn't read the on die temperatures for each core, that's one way of telling what's being read when you see 8 different temps along 8 different speeds and multipliers and 8 different voltages. | |

| ID: 34504 | Rating: 0 | rate:

| |

As for the video cards, you might want to look at the "blower" type fans, they do a great job blowing all the hot air out the back of the case. This is an example of a blower card OK. I got it now!! Thanks !!! Today I ordered from Amazon France the PNY "blower" version of the GTX 770, and I persuaded them to take back my ASUS 660 and replace it with the PNY "blower" version. They even sent a no-cost-to-me mailing label for the ASUS return! What service!! I have to say that I've been very happy with ASUS GPUs over the years and I'm sorry to now be switching to another vendor. I hope it works out... ____________ | |

| ID: 34507 | Rating: 0 | rate:

| |

|

I belief you flashhawk and I understand your ideas about the BIOS. | |

| ID: 34509 | Rating: 0 | rate:

| |

|

I don't think you understood me TJ, maybe or maybe not. The Asus AI suite reads the temperature from a probe in the "socket" below the CPU, it's attached to the motherboard outside the CPU chip, it will never give you an accurate CPU temperature unless your interested in what that dead air space is under the CPU. If you remove the CPU, it's under the sliding bed that you drop the processor in to, Overdrive reads the 8 thermal probes built in to the silicone of each processor die. | |

| ID: 34511 | Rating: 0 | rate:

| |

|

Yes I thought I understood you flashawk, but with your latest technical explanation it is total clear to me now. Thanks for that. | |

| ID: 34512 | Rating: 0 | rate:

| |

|

I can't remember who it was that said that knowledge is power, the more accurate information you have the smoother the decision process is and you won't second guess your self. Software like AI (witch isn't all bad) is 90% responsible for giving OEM air coolers a bad name (AMD and Intel), people think they're junk when in reality they are adequate in a positively ventilated well engineered case. | |

| ID: 34513 | Rating: 0 | rate:

| |

|

My - this has been a busy thread! Since I started it, here's where I'm up to... | |

| ID: 34515 | Rating: 0 | rate:

| |

My - this has been a busy thread! Because we've covered many of the things one needs to consider when building a computer for crunching. There is no single recipe to follow when building your own. There's lot of options, lots of things to consider. In exchange for doing that extra work you end up with a high performance rig at a very attractive price. And thanks to input from flashawk we will now avoid the "bad" temperature reading software and not become worried over what are basically inaccurate temperature readings. That's essential stuff when building your own. Thanks flashawk :) BTW, the expression "knowledge is power" probably grew from Diderot's, "To ask who is to be educated is to ask who is to rule." Plan then is to move the PSU to the top of the case and see if I can fit three wide GPUs in there. After all, the mobo has four PCIe slots. We shall see what transpires.... It's not a matter of luck, it's a matter of skill and knowledge, but good luck to you anyway. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34517 | Rating: 0 | rate:

| |

And thanks to input from flashawk we will now avoid the "bad" temperature reading software and not become worried over what are basically inaccurate temperature readings. That's essential stuff when building your own. Thanks flashawk :) BTW, the expression "knowledge is power" probably grew from Diderot's, "To ask who is to be educated is to ask who is to rule." Ya, the deal with those temp readings really throws a wrench in people's plans and pushes them to throw money at something that could be better spent on other components. It's like teaching your self to let off on the brakes when your doing 360's in the snow so you can regain control, some people just can't let go of it (some won't either). "Diderot", that's it! Or him, it sucks getting old, I'm starting to forget stuff. I missed you last summer Dagorath, where did you disappear too? We missed you stirring things up around here, our discussions about cheaters on points and witch BOINC application gives the most points. I better not get to far off topic, the mods do a good job giving out tech advice too, this is actually one of the better GPU forums and we don't do gaming (or at least talk about it much). Edit: I like your new quote, it's deep. | |

| ID: 34519 | Rating: 0 | rate:

| |

|

I'm basically retired but I have mineral claims. To make a long story short, some claim jumpers tried to move in. Things got ugly. The leader went to jail but kept giving me grief. Then someone burned his property to the ground, they arrested me for it and refused bail. In jail I had a "talk" with the claim jumper and convinced him to never even think of setting foot on one of my claims again. See what happens in jail pretty much stays in jail as long as you make a reasonable effort to not put the guards in a position where they have to do their job and report stuff. It couldn't have worked out better vis a vis stopping the claim jumpers if I had planned it that way. | |

| ID: 34523 | Rating: 0 | rate:

| |

|

Your blowing my mind over here, I grew up on a mining claim in the Central Sierra's and the foot hills (49er country). My family were all hard rock and hydraulic miners on my fathers side, we had a 1944 Dodge Power Wagon we drove in 32 miles where we had a tarpaper shack, tack room, feed shed, lunging stable and stock pins with 2 quarter horses, 1 thoroughbred, 2 appaloosas, 1 Dunn and a Surrey plus 8 mules and 2 burrows. All 6 of us kids (me and 5 sisters) would sand bag the creek and he had a 4" floating dredge and the slurry got pumped in to a 6 stage sluice box with carpeting in it. | |

| ID: 34525 | Rating: 0 | rate:

| |

|

The TV clicker? There wasn't one because it would have batteries in it and batteries can be rigged to light cigarettes, joints or start fires. | |

| ID: 34526 | Rating: 0 | rate:

| |

|

Well - the PNY GTX 770 idea was a pig's breakfast. Should have been with me two days ago but... | |

| ID: 34552 | Rating: 0 | rate:

| |

|

Try turning the fan speed up! | |

| ID: 34555 | Rating: 0 | rate:

| |

Try turning the fan speed up! I think I might need help on how to do that...:) | |

| ID: 34556 | Rating: 0 | rate:

| |

|

In the big controller box that says "Precision", at the bottom is the fan speed controller, click on "Auto" to switch it over to manual, slide it to 100% or how ever high it will allow it to go. Let the temperature settle down, then start working it back down in increments until you find a balance between cooling and noise that suits you. | |

| ID: 34557 | Rating: 0 | rate:

| |

In the big controller box that says "Precision", at the bottom is the fan speed controller, click on "Auto" to switch it over to manual, slide it to 100% or how ever high it will allow it to go. Let the temperature settle down, then start working it back down in increments until you find a balance between cooling and noise that suits you. That "Apply at Windows start up" fixed it"! After the boot I could drag the slider. Great! Many thanks for the heads-up. | |

| ID: 34559 | Rating: 0 | rate:

| |

|

It was a pig's breakfast but don't deal with it by creating another pig's breakfast. | |

| ID: 34560 | Rating: 0 | rate:

| |

|

For reference about high GPU temps... | |

| ID: 34573 | Rating: 0 | rate:

| |

|

Thread starter here... | |

| ID: 34621 | Rating: 0 | rate:

| |

|

Yikes! I thought the space between the slots was discussed upthread before you bought the board. Yes, that definitely is an issue. My advice at this point would be to search for a board with adequate spacing and if the price is acceptable return yours. | |

| ID: 34622 | Rating: 0 | rate:

| |

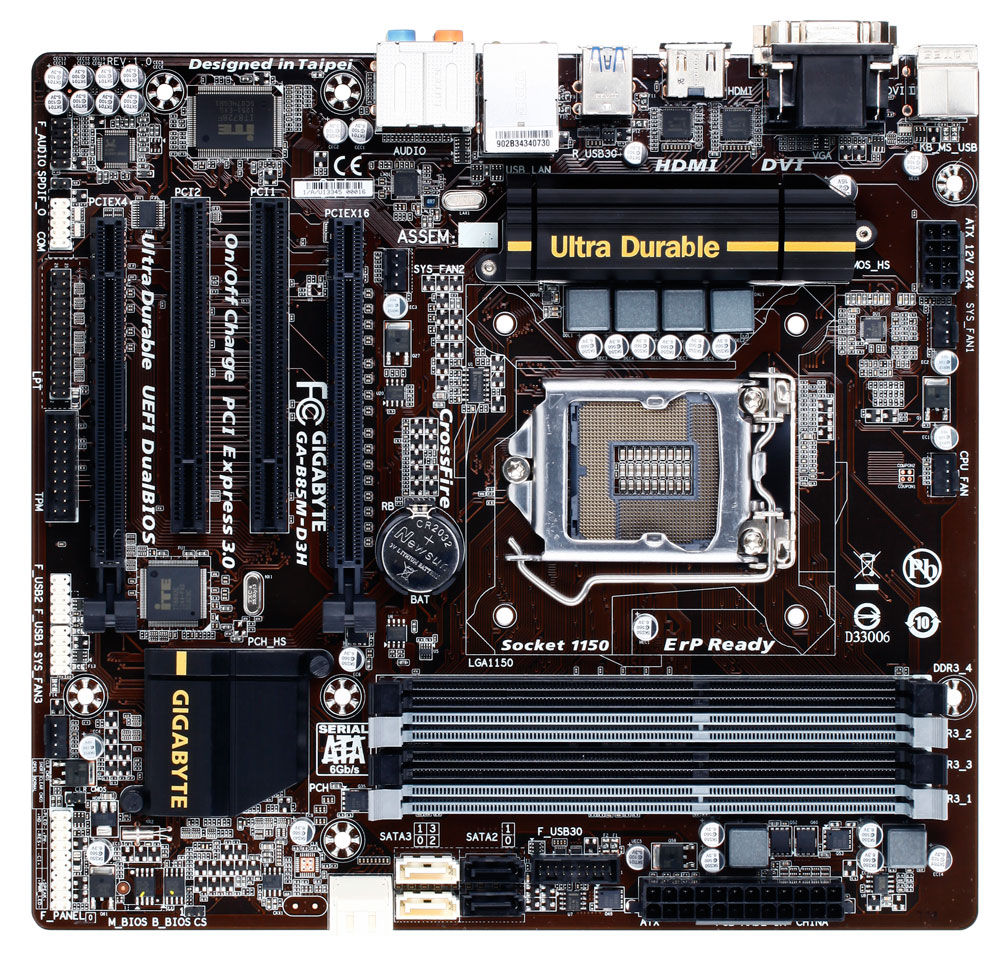

Thread starter here... You have three options: 1. install water blocks on your GPUs, and they will fit in single slots. 2. Use two top-end GPUs (at the moment: GTX 780Ti) 3. Use a different motherboard. I've checked the ASUS website, and I didn't find any AMD motherboards which could accomodate four double-slot GPUs. But I've found a Gigabyte MB which does: GA-990FXA-UD7 2. The case (Cooler Master HAF932 Advanced) does not help with the PCIe problem. It has but seven PCI slots: The ATX standard consists only 7 PCI slots. The 8 slot wide ATX cases are very rare, mostly custom-made. (for example the one mentioned in this post). If I were running a host with four air cooled GPUs, I wouldn't put it in a case at all. It is very hard (loud) to dissipate 1kW from a PC case sustaining low component temperatures, using air cooling. Higher temperatures shorten the lifetime of the components. I do not recommend four GPUs in a single MB. 3. GTX 770s were recommended here. I had one for a few days. Its GPUGrid performance was 1.4x the 660s. At twice the price I passed. The price of the cards are *not* in direct ratio of their performance. You always have to pay extra for the top-end cards. The 770 is 68% faster than a 660 - in theory. The faster the GPU the harder its performance will be hit by the WDDM overhead of the Windows 7 (Vista, 8, 8.1) (depending on the workunit batch). To fully utilize the performance of fast GPUs, you should run that fast card on an OS which does not have the WDDM overhead (Windows XP, Linux), and you have to leave a CPU core free from CPU tasks per GPU. Besides, in the long term it is much better to have two 770s in a single PC than four 660s. The cooling of these cards is *not* made for four-way crunching in 24/7. As time goes by new (faster) GPUs will be released, workunits will get longer, so the 770 is more future proof than a 660. BTW I think that the new Maxwell series GPUs (to be released later this year) won't have that WDDM overhead, or not that much. | |

| ID: 34623 | Rating: 0 | rate:

| |

|

Yes we told you that 4 GPU's would be a problem. Not only for fitting on the MOBO but also for heat. | |

| ID: 34624 | Rating: 0 | rate:

| |

It is not possible to build an "ideal" system cheap, but others have other ideas about that. I think you can build an "ideal" and well cooled system cheap and cool it very well with air alone. You might have to think outside the box and build some of the components yourself but it's doable. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34625 | Rating: 0 | rate:

| |

|

I had my hand slapped on another thread for not using my spare CPU threads on another worthwhile BOINC project. So I'm now running six Rosettas. With the two GPUGrids, the eight CPU cores are committed. | |

| ID: 34682 | Rating: 0 | rate:

| |

|

I didn't mean to slap your hand :-p. | |

| ID: 34683 | Rating: 0 | rate:

| |

|

Hi Tom, | |

| ID: 34687 | Rating: 0 | rate:

| |

|

If your CPU's max temp is 100*C then 64*C is a pretty decent CPU temperature. If you aren't bothered by fan noise, and your GPUs stay below 70*C and you can maintain those temperatures in the summer then I don't see much point in trying to improve anything. | |

| ID: 34688 | Rating: 0 | rate:

| |

|

Thanks for the response, TJ. I have the back fan connected to CHA_FAN4 as Asus recommend. I missed that. Tomorrow morning I'll check that the rear fan is on CHA_FAN4. Have you made any settings for the fans in thermal Radar? No! I had thought I had to get into the BIOS to do that. Nice to know I can do it in Windows!! For each connector on the MOBO and thus fan, you can set a scheme. Not so in my version of Thermal Radar; 1.01.29. #4 I can set individually, but the other three are in one group. I set the standard scheme for all three options; fan4, fans1-3 and the CPU fan. When I get in there tomorrow I shall also try Dagorath's suggestion (thanks, Dagorath!) to disable the side and top fans so I have an uninterrupted cool air path from front to back. That makes sense since the case came with a funnel that directs air to the GPUs, both of which vent out the back. Then I shall play with Standard vs. Turbo on the CPU and front and rear fans and see if I can optimize CPU core usage (yesterday I went to bed with all eight cores active and this morning I found an ASUS warning message: CPU temperature 65C) | |

| ID: 34690 | Rating: 0 | rate:

| |

|

That warning from Asus might not be cause for concern. Probably there is a setting in the Asus software that allows you to specify the temperature at which it will issue the warning and probably it's set to an arbitrary default of 65*C by Asus (assuming you haven't adjusted it yourself). 65 may or may not be appropriate for your CPU. What you need to do, since you are now officially a certified system builder :-) is find the specifications for your CPU and see what the official maximum operating is. You don't want to set the Asus alarm setting at that temperature because that is the temp where serious damage can start to occur. I like to keep my gear running no higher than 30*C below the official max while others say 10*C is good enough for them. The decision is yours but if were you I would set the Asus alarm at 20*C below max and strive to keep the temp 30*C below max. The point is you don't want the alarm going off when the CPU is not really in danger but you don't want it to wait until it's at the very brink of meltdown either, somewhere in between. Also, as flashawk pointed out a while ago, it depends on which sensor(s) the Asus software is reading. Complicated? Yes, but that's the life of a system builder :-) | |

| ID: 34692 | Rating: 0 | rate:

| |

|

More reference: | |

| ID: 34693 | Rating: 0 | rate:

| |

The 660 Ti was literally at 99*C, and still chugging, despite a ridiculous 40% fan value. I think I got lucky that it survived. Ouch! Mine have spiked up above 80°C on occasion but never that high. My gpu-d script monitors GPU fan temps and controls the fan to maintain a target temperature. I just added a feature that suspends crunching if the temp somehow gets out of control and goes above 80°. It runs only on Linux at the moment but yesterday I found Python bindings to NVML (NVidia Management Library) which will allow the script to work on Windows too. It could solve some of the problems with the SANTI tasks if I added a feature to downclock the GPU when it sees a SANTI task come in and restore the clock to normal for tasks that don't need downclocking. It could tweak voltage too. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34694 | Rating: 0 | rate:

| |

If some of your fans don't seem to be affected by settings in the software or don't have RPM readings then they might be fans that don't have a tachometer in them and don't allow speed control (they run at one speed). You can tell by counting the wires leading to the fan. Every fan has at least 2 wires for power, black is usually -, red is usually +. If it has only 2 wires then it has no tachometer and no speed control. If it has a third wire (usually white or yellow but could be other colors too) then that wire is usually the PWM speed control wire. If it has a 4th wire (usually blue) that wire is usually the tach wire. If it doesn't have a tach or PWM wire then any numbers you see in the software for that fan are bogus, ignore them. Actually the 3rd wire is the signaling wire for the tachometer, and in that case RPM control is done by applying the PWM to the + wire, or by lowering the voltage on the + wire. The 4th wire is the PWM control wire, you can connect more than one (4-pin) fan to such fan connectors, provided that only one of them should be connected to the 3rd (signaling) wire, so the system could monitor only that fan's RPM, but can control the RPM of all fans connected to that single fan connector through the 4th wire. | |

| ID: 34695 | Rating: 0 | rate:

| |

|

I got in there this morning. Moved the rear fan cable to CHA_FAN4 (it was on #2), and disconnected the top and side fans. | |

| ID: 34698 | Rating: 0 | rate:

| |

|

About 30 minutes ago I put the tower back in its hole. The thermal radar CPU temp has dropped from 61C to 59C and the AMD thermal margins are up to 20C. | |

| ID: 34699 | Rating: 0 | rate:

| |

|

Tomba, your "ASUS warning message: CPU temperature 65C" may be a Bios setting. If so, you can probably set it to a higher level or disable the warning in the Bios. | |

| ID: 34700 | Rating: 0 | rate:

| |

Note in my last post that the two thermal radar PCIe temps are 11C apart. They still are. The two GPUs are identical. Any thoughts? What exactly are "PCIe temps"? Are those the temps reported by sensors situated on the mobo close to the PCIe slots? Or are they temps reported by sensors on the GPUs in the slots? If the latter then I would say... If the target temperature is the same on both cards (and I would not just assume that it is) then the hotter one is either starving for cool air or it's fan is defective and isn't spinning as fast as it should. By "starving for cool air" I mean either the air it's receiving is too hot to allow the card to maintain the target temp or the airflow is somehow restricted. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34701 | Rating: 0 | rate:

| |

Note in my last post that the two thermal radar PCIe temps are 11C apart. They still are. The two GPUs are identical. Any thoughts? I think they are mobo sensors, thus:  Tomorrow I'll get in there and check the airflow and perhaps switch the GPUs round to see if there's any difference. | |

| ID: 34703 | Rating: 0 | rate:

| |

About 30 minutes ago I put the tower back in its hole. The thermal radar CPU temp has dropped from 61C to 59C and the AMD thermal margins are up to 20C. One GPU is always hotter, I have seen in all my rigs with two GPU's. Its the primary one that is hotter. Same with same type/brand or different. I have two top fans blowing air out and 1 side fan pulling air in, and have CPU of 55°C and PCIe at 48 and 43°C. Same MOBO and CPU. And the case closed. ____________ Greetings from TJ | |

| ID: 34704 | Rating: 0 | rate:

| |

Use a different motherboard. I've checked the ASUS website, and I didn't find any AMD motherboards which could accommodate four double-slot GPUs. But I've found a Gigabyte MB which does: GA-990FXA-UD7 Now there's a thought! Well spotted!!! That would let me add the GTX 660 that's upstairs, running in my old rig, to my new rig, and would give me the expansion capability I was looking for originally. I'm still within the Amazon 30-day return window. I'm tempted... :) | |

| ID: 34705 | Rating: 0 | rate:

| |

Tomorrow I'll get in there and check the airflow and perhaps switch the GPUs round to see if there's any difference. You might notice a difference but my bet is that you won't. I think the difference is more likely due to some warm running chip/component on the mobo close to the sensor near the hotter slot. Switch the cards if you want to verify but I wouldn't worry about the one temp being higher than the other. As for TJ's report that one GPU is always hotter than the other... I have noticed the same thing and like he says it's always the one in the primary slot. That's most likely due to heat from the card in the slot below it being sucked into the primary card's fan. Rear exhaust fans reduce that problem significantly but don't eliminate it completely because there is still radiant heat emanating from the secondary card. I have the same problem with 2 of my cards and when I have spare time I am going to build a custom intake duct that fits between the 2 cards and pushes lots of cool air between them as well as into the intake fans. But first I have to replace the mobo those cards are on because my cat murdered it yesterday by dragging it's little catnip scented toy into the custom cabinet I am building and dropping it on the mobo. I checked it with my ohm meter and the ribbon on the toy is very conductive! He went in through a temporary opening I had not blocked because I thought he would never be able to reach that opening. I think he must have lept off the TV which is amazing because the TV is about 1 meter away and the hole is pretty small. I found him there crying because he couldn't figure a way to get down, lol. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34706 | Rating: 0 | rate:

| |

Use a different motherboard. I've checked the ASUS website, and I didn't find any AMD motherboards which could accommodate four double-slot GPUs. But I've found a Gigabyte MB which does: GA-990FXA-UD7 I wouldn't plan on putting 4 GPUs on that mobo. They'll fit but I've been reading reports and info that indicate those mobos can't supply the current required by some video cards through the PCIe slot. The fact that the online stores I use here in Canada seem to be not re-stocking those boards kind of corroborates the notion they can't handle a big load through the PCIe slots. It might not even be able to handle 3 cards. Retvari and I were discussing it in my "What to build in 2014?" thread. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34707 | Rating: 0 | rate:

| |

I wouldn't plan on putting 4 GPUs on that mobo. They'll fit but I've been reading reports and info that indicate those mobos can't supply the current required by some video cards through the PCIe slot. Is it not the case that today's GPUs are powered from the PSU, not the mobo? | |

| ID: 34708 | Rating: 0 | rate:

| |

|

I have a problem. | |

| ID: 34709 | Rating: 0 | rate:

| |

I have a problem. It can be clearly seen from the GPU clock reading on the lower EVGA Precision display, that your GPU is downclocked to 324MHz. This is a safety feature of the GPU (driver), and it can be reset back to normal only by a system restart. BTW in that case, you don't have to abort the workunit, a system restart will restore its original processing speed. To avoid such situation in the future, you need to lower your GPU clock slightly (or increase the GPU voltage slightly). | |

| ID: 34710 | Rating: 0 | rate:

| |

I have a problem. Thanks for responding, Retvari. I rebooted. No change. I reinstalled the Nvidia driver. No change. you need to lower your GPU clock slightly (or increase the GPU voltage slightly). Help me out on how to do that please... | |

| ID: 34711 | Rating: 0 | rate:

| |

|

Are you sure the 41*C GPU is actually loaded? It looks like it isn't. | |

| ID: 34712 | Rating: 0 | rate:

| |

Are you sure the 41*C GPU is actually loaded? It looks like it isn't. Thanks for responding, Jacob. After working out how EVGA Precision X logging works, I constructed this snapshot:  So GPU 1 is loaded, but not very heavily!! It would be nice if there's a way to clone GPU2's settings to GPU1... | |

| ID: 34713 | Rating: 0 | rate:

| |

|

I'm beginning to think my GPU1 has lost it and needs to be replaced. It's still running at 10% of its potential. | |

| ID: 34716 | Rating: 0 | rate:

| |

I wouldn't plan on putting 4 GPUs on that mobo. They'll fit but I've been reading reports and info that indicate those mobos can't supply the current required by some video cards through the PCIe slot. Check out the thread titled "What to build in 2014". Retvari explains. The PCIe bus itself (separate from the GPU) requires power too. I'm not sure exactly why it requires that many amps but I know from other reading that it does and I am confident Retvari has done the calculations in that other thread correctly. The pins on the 24 pin connector can supply that much amperage for a little while but they will run hot and eventually deteriorate due to the high temperature to the point that they melt and/or catch fire. Remember the connector is only a mechanical connection not a soldered connection therefore it cannot carry as much current as the wires leading to and from it. Hmmm. That makes me think a good upgrade would be to cut the connector off and solder the wires directly to the mobo/pins but that marries the PSU to the mobo, not convenient. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34718 | Rating: 0 | rate:

| |

I'm beginning to think my GPU1 has lost it and needs to be replaced. It's still running at 10% of its potential. That's a good idea. If the card is OK in your old rig, I suggest you to try this card in the original PC, but remove the second card for a day. | |

| ID: 34723 | Rating: 0 | rate:

| |

|

Tomba, NVidia control panel - Prefer maximum performance. If that doesn't work tweak the clocks slightly (to try to force them to stick), suspend CPU work (in case the CPU is saturated and preventing the GPU using enough power - which causes it to run in low power mode), shut down and check the power connectors/do a cold restart. If it does start running normally, work out what caused the downclock, make sure the GPU fans are working properly... | |

| ID: 34724 | Rating: 0 | rate:

| |

I'm beginning to think my GPU1 has lost it and needs to be replaced. It's still running at 10% of its potential. Removed the offending PNY GPU and replaced it with the ASUS GPU from my old rig. Both are running full speed ahead. Put the offender into my old rig. The Nvidia driver kept stalling and the rig died. Tried a few times. Nada. Printed the Amazon returns doc and parcelled it up. Bye-bye tomorrow... | |

| ID: 34729 | Rating: 0 | rate:

| |

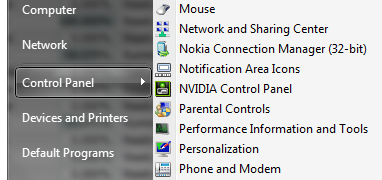

Tomba, NVidia control panel - Prefer maximum performance. Thanks for responding, skgiven. There was a time when I had the Nvidia control panel on the task bar, but those days are gone. And I could not find it anywhere else:  | |

| ID: 34730 | Rating: 0 | rate:

| |

|

It's a control panel: | |

| ID: 34731 | Rating: 0 | rate:

| |

|

If you right click on your desktop screen... | |

| ID: 34745 | Rating: 0 | rate:

| |

|

Thread starter here again! | |

| ID: 34855 | Rating: 0 | rate:

| |

|

I have the same MOBO same CPU two EVGA 660's but 5 fans and case closed despite what others are saying and have all temperatures lower than yours. Nothing is over 56°C. Running 24/7 ambient temperature 23.4°C. | |

| ID: 34856 | Rating: 0 | rate:

| |

I have the same MOBO same CPU two EVGA 660's but 5 fans and case closed despite what others are saying and have all temperatures lower than yours. Nothing is over 56°C. Running 24/7 ambient temperature 23.4°C. Thanks for responding TJ :) I connected the two missing fans and closed the case, as always (broke a top fan blade with my finger some time ago with one side off...). That got rid of the PCIe warning:  This morning found an ASUS warning "CPU at 65C". In fact it was at 67C, with it's fan on turbo. Stopped two Rosetta threads, which brought the CPU temp down to 63C. Looks like I cannot keep all my CPU cores busy... What case are you using? | |

| ID: 34861 | Rating: 0 | rate:

| |

|

18th January I reported that one of my two PNY 660s appeared to have gone on the blink, it running very slowly. I replaced it. | |

| ID: 34862 | Rating: 0 | rate:

| |

|

Tomba: | |

| ID: 34864 | Rating: 0 | rate:

| |

Tomba: Thanks for responding Jacob :) 1) I've been running 334.67 for four days. 2) Did that previously. Just cheked it stuck. It did. 3) No luck!! | |

| ID: 34865 | Rating: 0 | rate:

| |

|

Hmph. You might consider opening a ticket with NVIDIA support, while you continue researching it. Could very well be a driver bug. | |

| ID: 34866 | Rating: 0 | rate:

| |

Hmph. You might consider opening a ticket with NVIDIA support, while you continue researching it. Could very well be a driver bug. Could be, but almost once a day a Santi WU down clocks my 660. After a half year of experimenting I came to the conclusion that Santi WU's and 660's don't work well together. So I have to boot that system once a day. @ Tomba: it is an older and smaller case you have. I have to check what type exactly but it is about similar to a Cooler Master 6xx series. I don't have the CPU-fan in turbo mode and it is using 7 cores continuously at 54-55°. ____________ Greetings from TJ | |

| ID: 34867 | Rating: 0 | rate:

| |

|

Here's another view of my problem, courtesy of ASUS GPU Tweak. I show the two GPUs side by side. | |

| ID: 34868 | Rating: 0 | rate:

| |

|

I just submitted a problem report to PNY. Let's see what happens... | |

| ID: 34869 | Rating: 0 | rate:

| |

|

It's more likely to be an NVIDIA driver problem. You should contact NVIDIA. | |

| ID: 34870 | Rating: 0 | rate:

| |

It's more likely to be an NVIDIA driver problem. You should contact NVIDIA. OK! Did that... | |

| ID: 34871 | Rating: 0 | rate:

| |

18th January I reported that one of my two PNY 660s appeared to have gone on the blink, it running very slowly. I replaced it. Try increasing the "Power Target" to something like 110%. That has fixed it for me for two GTX 660s on one PC running Win7 64-bit (331.65 drivers), and another PC running one GTX 660 on WinXP (332.21 drivers). Otherwise, the card hits its power limit on difficult work units, and down-clocks to protect itself. In hard cases you might have to increase the core voltage a little and downclock some (reduce the GPU core frequency by 50 MHz or so). As long as the temperature does not get too high, you should not have a problem. | |

| ID: 34872 | Rating: 0 | rate:

| |

|

Jim, | |

| ID: 34873 | Rating: 0 | rate:

| |

Jim, OK, thanks. Maybe his card is bad as he suspects. I was wondering how long they last on average; Maxwell may be delayed a little, and we are running them harder than the average gamer would. | |

| ID: 34874 | Rating: 0 | rate:

| |

Jim, Tomba needs to reboot his system to get the cards at clock speed again, run in down clocked mode now! ____________ Greetings from TJ | |

| ID: 34876 | Rating: 0 | rate:

| |

|

I removed the offending PNY 660. Switched on and the second PNY ran full belt. | |

| ID: 34877 | Rating: 0 | rate:

| |

So - I'm back in full production but I've no idea how!! Simply by rebooting the system. That is what you should do at once when you have a down clocked GPU. All other work is unnecessary. ____________ Greetings from TJ | |

| ID: 34878 | Rating: 0 | rate:

| |

|

Rebooting the system is a workaround, not a fix. | |

| ID: 34879 | Rating: 0 | rate:

| |

Rebooting the system is a workaround, not a fix. Yes, the problem will reoccur when he hits another difficult portion of the work unit. But I don't see that he has reduced the GPU clock yet. The clock is set to 1110 MHz, and running at 1123 MHz as I read it; most 660s won't run stably when the base is set much above 1000 MHz in my experience (though the boost will increase it above that, but I would let the on-chip circuitry handle that). And the voltage might have to go up a little; 1175 mV should be about right. | |

| ID: 34880 | Rating: 0 | rate:

| |

So - I'm back in full production but I've no idea how!! TJ - in the couple of days since the problem arose I must have rebooted six times... | |

| ID: 34881 | Rating: 0 | rate:

| |

But I don't see that he has reduced the GPU clock yet. A couple of times in this thread I've asked what app to use for doing just that... | |

| ID: 34882 | Rating: 0 | rate:

| |

|

You can use Precision-X to change the "GPU Clock Offset" to not only raise it... but lower it. | |

| ID: 34883 | Rating: 0 | rate:

| |

So - I'm back in full production but I've no idea how!! What do you mean by reboot? Do you mean a shutdown->poweroff->restart or simply shutdown the OS and restart without a poweroff? Those are 2 different things. Your last reboot when everything started working must have involved a poweroff, IIUC, as it sounds like you switched some cards around. If previous reboots were not from a powered off condition then that's probably why they did not make any difference. Also, powering off and letting it sit for 15 minutes sometimes makes a difference too. Some components fail when warm but work again after they are powered off and allowed to cool. They fail again when they run for a while and warm up again but that pattern is a clue to the solution. Also, certain settings and/or conditions in some hardware components don't reinitialize unless you leave the power off long enough for the capacitors to drain down (lose their charge). That can take a few minutes. So if all else fails do an OS shutdown and poweroff for 30 minutes, everything, even the monitor and modem. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 34884 | Rating: 0 | rate:

| |

But I don't see that he has reduced the GPU clock yet. As Jacob said, you should be able to use Precision-X, though I have never used it. But MSI Afterburner also works on any card, with a little learning curve on how to save your settings and how to automatically re-enable them after a reboot (it is slightly obscure). My favorite for Nvidia cards, since that is all we are talking about here anyway, is Nvidia Inspector http://www.guru3d.com/files_details/nvidia_inspector_download.html Just select "Show Overclocking", and change the Base Clock, Voltage Offset and Power Target as you wish. Then, click on "Apply Clocks & Voltage", and finally save your settings by right-clicking on the "Create Short Cuts" button, and create a Clock Startup Task for Win7 (the "Create Clocks Shortcut" is for WinXP, and you then move that shortcut from the desktop to the startup folder). Occasionally I find a card where one or the other of these parameters can't be controlled by software and I have to modify the BIOS, but that is another subject that hopefully you can avoid. | |

| ID: 34885 | Rating: 0 | rate:

| |

|