Message boards : News : WU: NOELIA_INS1P

| Author | Message |

|---|---|

|

Hi all, | |

| ID: 32663 | Rating: 0 | rate:

| |

|

These slow a GTX 460/768mb GPU to a crawl. Santis and Nathans run fine. | |

| ID: 32798 | Rating: 0 | rate:

| |

|

I run these on both the machine with the Titans and the one with the 2GB GTX 650Ti. | |

| ID: 32809 | Rating: 0 | rate:

| |

|

running at 95% gpu load on gtx 660 ti in windows 7! really nice! | |

| ID: 32866 | Rating: 0 | rate:

| |

running at 95% gpu load on gtx 660 ti in windows 7! really nice! Yes, I finally got one too. Running 92% steady on my 770 at 66°C. ____________ Greetings from TJ | |

| ID: 32869 | Rating: 0 | rate:

| |

|

It is running at 96% on my GTX 650 Ti (63 C with a side fan). At 60 percent complete, it looks like it will take 18 hours 15 minutes to complete. And no problems with the memory (774 MB used). | |

| ID: 32872 | Rating: 0 | rate:

| |

|

Just got 80-NOELIA_INS1P-5-15-RND4120_0. It really is putting my 650Ti through its paces! vagelis@vgserver:~$ gpuinfo Fan Speed : 54 % Gpu : 67 C Memory Usage Total : 1023 MB Used : 938 MB Free : 85 MB The WU is only at the start (1.76%) and estimates to take 22:44. I expect this to drop significantly. ____________  | |

| ID: 32928 | Rating: 0 | rate:

| |

memory (774 MB used) Undoubtedly why they won't run on the GTX 460/768 cards. They work fine on my 1GB GPUs, but I have to abort them on the 460 in favor of Santi and Nathan WUs. | |

| ID: 32929 | Rating: 0 | rate:

| |

|

Finally I had another Noelia WU. It ran steady on my 660 with a GPU load off 97-98%, Nathan's do only 88-89%. And it ran in one go, that means no termination and restart because off the simulation becoming unstable. | |

| ID: 32952 | Rating: 0 | rate:

| |

memory (774 MB used) I noticed a lot of failures on the 400 series cards and almost posted about it, but wasn't sure why. I think you have explained it. | |

| ID: 32953 | Rating: 0 | rate:

| |

Just got 80-NOELIA_INS1P-5-15-RND4120_0. It really is putting my 650Ti through its paces! Finished successfully in 81,572.91 sec (22.7h) on the 650Ti (running on Linux). Didn't actually take 18-19 hours, like previous NOELIAs.. must be a more complex WU. 180k is sweet! :) ____________  | |

| ID: 32956 | Rating: 0 | rate:

| |

These slow a GTX 460/768mb GPU to a crawl. Santis and Nathans run fine. Sounds like the minimum GPU memory requirement is set too low for these, otherwise BOINC would refuse to run them on such cards. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 32966 | Rating: 0 | rate:

| |

These slow a GTX 460/768mb GPU to a crawl. Santis and Nathans run fine. MrS, can the minimum memory requirement be set for specific WUs or just for the app in general? | |

| ID: 32969 | Rating: 0 | rate:

| |

|

I don't know, I've never set a BOINC server up or created WUs myself. But if I had programmed BOINC this would be a setting tagged to each WU, because that's the only way it makes sense. The entire credit system was based on the idea that different WUs can contain different contents. | |

| ID: 32971 | Rating: 0 | rate:

| |

These slow a GTX 460/768mb GPU to a crawl. Santis and Nathans run fine. I believe it might need to be set at the plan_class level, which is between app and WU. So we might need to enable something like cuda55_himem, and create _INS1P WUs for that class only. | |

| ID: 32972 | Rating: 0 | rate:

| |

|

I just had a work unit fail (on an otherwise completely-stable system): Name pnitrox118-NOELIA_INS1P-7-12-RND7320_4 Workunit 4777876 Created 16 Sep 2013 | 19:44:18 UTC Sent 17 Sep 2013 | 4:01:45 UTC Received 17 Sep 2013 | 10:08:54 UTC Server state Over Outcome Computation error Client state Compute error Exit status -97 (0xffffffffffffff9f) Unknown error number Computer ID 153764 Report deadline 22 Sep 2013 | 4:01:45 UTC Run time 2.31 CPU time 2.13 Validate state Invalid Credit 0.00 Application version Long runs (8-12 hours on fastest card) v8.14 (cuda42) Stderr output <core_client_version>7.2.11</core_client_version> <![CDATA[ <message> (unknown error) - exit code -97 (0xffffff9f) </message> <stderr_txt> # GPU [GeForce GTX 660 Ti] Platform [Windows] Rev [3203] VERSION [42] # SWAN Device 0 : # Name : GeForce GTX 660 Ti # ECC : Disabled # Global mem : 3072MB # Capability : 3.0 # PCI ID : 0000:09:00.0 # Device clock : 1124MHz # Memory clock : 3004MHz # Memory width : 192bit # Driver version : r325_00 : 32680 # Simulation unstable. Flag 9 value 992 # Simulation unstable. Flag 10 value 909 # The simulation has become unstable. Terminating to avoid lock-up # The simulation has become unstable. Terminating to avoid lock-up (2) </stderr_txt> ]]> | |

| ID: 33050 | Rating: 0 | rate:

| |

|

It's failed on every other host too, so it's a bad workunit. | |

| ID: 33055 | Rating: 0 | rate:

| |

|

Just spotted a NOELIA_INS1P at 123h into a run, and only at 43% complete! | |

| ID: 33057 | Rating: 0 | rate:

| |

Just spotted a NOELIA_INS1P at 123h into a run, and only at 43% complete! That is what still concerns me. I can take errors, but stalling a machine may be even worse than a crash. | |

| ID: 33058 | Rating: 0 | rate:

| |

Just spotted a NOELIA_INS1P at 123h into a run, and only at 43% complete! Oops, dskagcommunity has it now: http://www.gpugrid.net/workunit.php?wuid=4771472 | |

| ID: 33060 | Rating: 0 | rate:

| |

|

I wonder if it will behave itself for dskagcommunity? He's running it with v8.14 (cuda42). | |

| ID: 33064 | Rating: 0 | rate:

| |

|

Oh. | |

| ID: 33067 | Rating: 0 | rate:

| |

Oh. While I was reading your words, this video just snapped into my mind. Sorry for being off topic. | |

| ID: 33068 | Rating: 0 | rate:

| |

|

Yeah, I can see/hear why that popped into your head! | |

| ID: 33070 | Rating: 0 | rate:

| |

|

The WU completed on both systems and both systems got partial credit, | |

| ID: 33090 | Rating: 0 | rate:

| |

|

Just wanted to say noelia recent wu are beating nathan by a long shot on the 780s. Avg gpu usage and mem usage is 80%/20% nathan v 90%/30% noelia. Her tasks also get a lot less access violations. | |

| ID: 33136 | Rating: 0 | rate:

| |

Just wanted to say noelia recent wu are beating nathan by a long shot on the 780s. Avg gpu usage and mem usage is 80%/20% nathan v 90%/30% noelia. Her tasks also get a lot less access violations. Yes I see the same on my 770 as well. I even got a Noelia beta on my 660 and did a better performance than the other beta, Santi´s I think they where. ____________ Greetings from TJ | |

| ID: 33145 | Rating: 0 | rate:

| |

Just wanted to say noelia recent wu are beating nathan by a long shot on the 780s. Avg gpu usage and mem usage is 80%/20% nathan v 90%/30% noelia. The other side of the coin is that Noelia WUs cause CPU processes to slow somewhat and can bring WUs on the AMD GPUs to their knees (my systems all have 1 NV and 1 AMD each). These problems are not seen with either Nathan or Santi WUs. | |

| ID: 33169 | Rating: 0 | rate:

| |

|

Personally, I have no issues with gpus stealing cpu resources if needed. Id rather feed the roaring lion than the grasshopper. | |

| ID: 33170 | Rating: 0 | rate:

| |

|

Arrgh... potx21-NOELIA_INS1P-1-14-RND1061_1. Another one with this in the stderr output: | |

| ID: 33594 | Rating: 0 | rate:

| |

Let's see how the next guy does on it. Just fine, I see...Never mind. Move along. Nothing to see here. ____________ | |

| ID: 33600 | Rating: 0 | rate:

| |

Arrgh... potx21-NOELIA_INS1P-1-14-RND1061_1. Another one with this in the stderr output: A large part could be due to the fact that these WU's consume a GIG of vRam and OC | |

| ID: 33612 | Rating: 0 | rate:

| |

|

My failure rate on these units is getting quite bad, three of them in the last couple of days and wingmen are doing them right. No problem with any other type of WUs. Any advise? | |

| ID: 33628 | Rating: 0 | rate:

| |

|

4 errors from 67 WU's isn't very bad, but they are all NOELIA WU's, so there is a trend. You are completing some though.

| |

| ID: 33634 | Rating: 0 | rate:

| |

|

I have had a long run of success (61 straight valid GPUGrid tasks over the past 2 weeks!), including 4 successful NOELIA_INS1P tasks, on my multi-GPU Windows 8.1 x64 machine. | |

| ID: 33637 | Rating: 0 | rate:

| |

4 errors from 67 WU's isn't very bad, but they are all NOELIA WU's, so there is a trend. You are completing some though. 4 out of 67 is ok I agree, but it's around 50% for these NOELIA_INS1P, so the trend is there as you say. No other type has failed in the last months included other NOELIAS's types. The two following units of the same type after the last failure have completed right.... maybe the Moon influence :) | |

| ID: 33642 | Rating: 0 | rate:

| |

|

Betting Slip wrote: A large part could be due to the fact that these WU's consume a GIG of vRam and OC Yep, good call. I've got another one of these running. Afterburner shows memory usage at slightly more than 1.1GB...I suppose that's stressing my GTX 570 (1280MB), isn't it? ____________ | |

| ID: 33649 | Rating: 0 | rate:

| |

I had one fail for becoming unstable on a GTX560 TI with same amount of memory. They should be OK on that amount but they're still failing. It's this sort of problem that scares away contributors and annoys the hell out of me. | |

| ID: 33651 | Rating: 0 | rate:

| |

|

Apparently they have quite small error rate (<10%), so nothing systematic to worry about. | |

| ID: 33652 | Rating: 0 | rate:

| |

|

I've only had 12 failures this month (that are still in the database), but 3 of the last 4 were NOELIA_INS1P tasks. If I include 2 recent NOELIA_FXArep failures that's 5 out of the last 6 failures. Of course I've been running more of Noelia's work recently, as there has been more tasks available. | |

| ID: 33670 | Rating: 0 | rate:

| |

|

On my GTX 660's (Win7 64-bit with 331.58 drivers): | |

| ID: 33677 | Rating: 0 | rate:

| |

|

If you want to avoid the reduced power efficiency which comes along with the increased voltage you could also scale GPU clock back by 13 or 26 MHz - should have the same stabilizing effect (but be a little slower and a little more power efficient). | |

| ID: 33715 | Rating: 0 | rate:

| |

If you want to avoid the reduced power efficiency which comes along with the increased voltage you could also scale GPU clock back by 13 or 26 MHz - should have the same stabilizing effect (but be a little slower and a little more power efficient). Actually, I do set back both cards by 10 MHz, but for a different reason. I found that the problem card still had the slowdown on a subsequent Noelia work unit. Then I remembered another old trick that sometimes works to keep the clocks going - let MSI Afterburner control them. It doesn't seem to matter whether you increase or decrease the clock rate from the default, or by what amount. My guess is that it takes control away from the Nvidia software, or whatever they use. At least it has been working for six days now, which is encouraging, if not proof. But such a small change in clock rate (it is very close to the Nvidia default of 980 MHz anyway) does not make any discernible change in temperature or power consumption as measured by GPU-Z. I would have to make a much larger change than that, which I will do if necessary. I think the chip on that particular card was just weak; when they test them, I am sure they don't run them through anything as rigorous as what we do here. I also had to bump up the voltage a little more - I started at 25 mv, but that wasn't quite enough, so now it is 37 mv. It has been error-free for a couple of days and three Noelias, but I need more Noelias to test it. | |

| ID: 33721 | Rating: 0 | rate:

| |

|

The clock granularity of Keplers is 13 MHz, so you might want to keep to multiples of this. If you don't, it's being rounded - no problem, unless you change clocks a bit but it actually gets rounded to the same clock speed and doesn't change anything. | |

| ID: 33724 | Rating: 0 | rate:

| |

And you're right, +/-10 MHz has a negligible effect on power consumption. What I was referring to was the increased power consumption from the voltage increase. It's not dramatic either (larger than what the frequency change causes), but it's something you might not want. There is a small effect from the voltage increase thus far, but not that much. The problem card (0) is in the top slot, and runs a couple of degrees hotter than the bottom card (1) even without the boost; typically 68 and 66 degrees C, probably due to air flow from the side fans (I have one of the few motherboards that puts the top card in the very top slot, which then raises the lower card up also). When I raise the voltage, it adds a degree (or less) to that on average. I probably should reverse their slot positions, but it is not that important yet. But the bottom card has done quite well - no errors in over a week; only the top card has had the errors. http://www.gpugrid.net/results.php?hostid=159002&offset=0&show_names=1&state=0&appid= I normally would buy Asus cards for better cooling (the non-overclocked versions), but needed the space-saving of these Zotac cards at the time. Now they are in a larger case, and I can replace them with anything if need be. The main point for me is that the big problems of a few months ago are past, for the moment. | |

| ID: 33729 | Rating: 0 | rate:

| |

|

The projects tasks have been quite stable of late. The only recent exception being a small batch of WU's that failed quickly. So it's a good time to know if you have a stable system or not. | |

| ID: 33743 | Rating: 0 | rate:

| |

If you have exhaust cooling GPU's then the side fans would be better blowing into the case. If not then these fans might be better blowing out (but it depends on the case and other fans). I have two 120 mm side fans blowing in, a 120 mm rear fan blowing out, and a top 140 mm fan blowing out (the power supply is bottom-mounted). I think that establishes the airflow over the GPUs pretty well, but you never know until you try it another way. As you point out, it can do strange things. However, the top temperature for the top card is about 70 C, which is reasonable enough. The real limitation on temperature now is probably just the heatsink/fans on the GPUs themselves. But my theory of why that card had errors has more to do with the power limit rather than temperature per se. It would bump up against the power limit (as shown by GPU-Z), and so the voltage (and/or current) to the GPU core could not increase any more when the Noelias needed it. By increasing the power limit to 105% and raising the base voltage, it can supply the current when it needs it. That particular chip just fell on the wrong end of the speed/power yield curve for number crunching use, though it would be fine for other purposes. And I can re-purpose it for other use if need be; it just needs to last until the Maxwells come out. | |

| ID: 33744 | Rating: 0 | rate:

| |

|

The claim is that errors can be caused by "not having enough voltage" or by "having too high of a temperature". | |

| ID: 33746 | Rating: 0 | rate:

| |

The claim is that errors can be caused by "not having enough voltage" or by "having too high of a temperature". All semiconductor manufacturers create yield curves for their production lots. They show how much voltage/current it takes to achieve a given speed. In general, the more power you supply to the chip, the faster it can be clocked. Of course, it also gets hotter, which can eventually destroy the chip. That is why a power limit is also specified (e.g., 95 watts for some Intel CPUs, etc.). But the chips vary, with some being able to run fast at lower power, and some requiring higher power to achieve the same speeds. You can get errors due to a variety of reasons, with temperature being just one. But I have seen errors even below 70 C, so some other limitation may get you first. | |

| ID: 33748 | Rating: 0 | rate:

| |

|

'New' (old?) 94x4-NOELIA_1MG_RUN4 very log running (over 24hrs). | |

| ID: 33786 | Rating: 0 | rate:

| |

'New' (old?) 94x4-NOELIA_1MG_RUN4 very log running (over 24hrs). You will struggle with this type of WU because one of your cards only has 1 GIG of memory and this Noelia unit uses 1.3 GIG but doesn't use much CPU. It will probably make any computer with a 1 GIG card unresponsive. I agree that the project is shooting itself in the foot by just dumping these WU's on machines that can't chew them http://www.gpugrid.net/forum_thread.php?id=3523 | |

| ID: 33787 | Rating: 0 | rate:

| |

'New' (old?) 94x4-NOELIA_1MG_RUN4 very log running (over 24hrs). Same here, with GTX680 or GTX 580 Very long crunch !!! | |

| ID: 33795 | Rating: 0 | rate:

| |

|

Oh its not me only again.. 32hours...560ti 448core 1,28GB -_- | |

| ID: 33796 | Rating: 0 | rate:

| |

|

My 35x5-NOELIA_1MG_RUN4-2-4-RND8673_0 running on a GTX660Ti is at 34% and took 4h22min. So it should complete in about 13h (Win7x64). | |

| ID: 33797 | Rating: 0 | rate:

| |

|

These NOELIA_1MG are about | |

| ID: 33799 | Rating: 0 | rate:

| |

My 35x5-NOELIA_1MG_RUN4-2-4-RND8673_0 running on a GTX660Ti is at 34% and took 4h22min. So it should complete in about 13h (Win7x64). On a GTX660TI with 2GB memory NO PROBLEM but this post all about those cards with less than 2GB | |

| ID: 33801 | Rating: 0 | rate:

| |

|

The NOELIA_1MG WU I'm presently running is using 1.2GB GDDR5, so it wouldn't do well on a 1GB card. | |

| ID: 33806 | Rating: 0 | rate:

| |

Would be interesting to know how much GDDR was being used on the different operating sytsems (XP, Linux, Vista, W7, W8). I'm not sure if we're talking the same error here but I had potx234-NOELIA_INS1P-12-14-RND6963_0 fail on my 660Ti with 3 gig mem on Linux, driver 331.17, more details here. That task also failed on this host (1 gig, Linux, 560Ti, driver unknown) but succeeded on this host , (2 gig, Win7, 2 X 680). I've had 4 other Noelia run successfully on my 660Ti on Linux. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 33812 | Rating: 0 | rate:

| |

The claim is that errors can be caused by "not having enough voltage" or by "having too high of a temperature". Hi Jacob.. I suppose you wouldn't mind going a bit deeper? To make a transistor switch (at a very high level) you apply a voltage which in turn pulls electrons through the channel (or "missing electrons" aka holes in the other direction). This physical movement of charge carriers is needed to make it switch. And it takes some time, which ultimately limits the clock speeds a chip can reach. This is where temperature and voltage must be considered. The voltage is a measure for how hard the electrons are pulled, or how quickly they're accelerated. That's why the maximum achievable (error-free) frequency scales approximately linear with voltage. Temperature is a measure for the vibrations of the atomic lattice. Without any vibrations the electrons wouldn't "see" the lattice at all. The atoms (in a single crystal) are forming a perfectly periodic potential landscape, through which the electrons move as waves. If this periodic structure is disturbed (e.g. by random fluctuations caused by temperature > 0 K), the electrons scatter with these perturbations. This slows their movement down and heats the lattice up (like in a regular resistor). In a real chip there are chains of transistors, which all have to switch within each clock cycle. In CPUs each stage of the pipeline is such a domain. If individual transistors are switching too slow, the computation result will not have reached the output stage of that domain yet when the next clock cycle is triggered. The old result (or something in between, depending on how the result is composed) will be used as the input for the next stage and a computation error will have occurred. That's why timing analysis is so important when designing a chip - the slowest path limits the overall clock speed the chip can achieve. And putting it all together it should be more clear now how increased temperature and too low voltage can lead to errors. And to get a bit closer to reality: the real switching speed of each transistor is affected by many more factors, including fabrication tolerances, non-fatal defects (which also scatter electrons and hence slow them down as well), defects developed due to operating the chip under prolonged load (at high temperature and voltage). At this point I can hand over to Jim: the manufacturer profiles their chips and determines proper working points (clock speed & voltage at maximum allowed temperature). Depending on how careful they do this (e.g. Intel usually allows for plenty of head room, whereas factory OC'ed GPUs have occasionally been set too agressive) things work out just normally.. or the end user could see calculation errors. Mostly these will only appear under unuausl work loads (which weren't tested for) or after significant chip degradation. Or just due to bad luck, which wasn't caught by the initial IC error testing (which is seldom, luckily). Hope this helps :) MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 33821 | Rating: 0 | rate:

| |

|

It does help, thank you very much for the detailed explanations. I've read through it once, and I'll have to read through it again a few more times for it to sink in. I actually studied Computer Engineering for a few years before switching over to a Bachelor's degree in Computer Information Systems. | |

| ID: 33823 | Rating: 0 | rate:

| |

|

Windows can't catch these calculation errors because, frankly, it doesn't see them. The GPU-Grid app sends some commands to the GPU, the GPU processes something and returns results to the app. Unless the GPU behaves in any different way (doesn't respond any more etc.), there's no way for the OS to tell if the data returned is correct or garbage. Specifically not even GPU-Grid can now this, unless they already know the result.. but they can check their results for sanity and, luckily for us, errors may often have no effect (on the long-term simulation result) or catastrophic effects. | |

| ID: 33847 | Rating: 0 | rate:

| |

The claim is that errors can be caused by "not having enough voltage" or by "having too high of a temperature". Something I've read that seems relevant to this explanation: Today's CPU chips are approaching the lower limit of the voltages at which the transistors work properly. Therefore, the power used by each CPU core can't get much lower. Instead, the companies are increasing the total speed by putting more CPU cores in each CPU package. Intel in also using a different method - hyperthreading. This method gives each CPU core two sets of registers, so that while the CPU is waiting for memory operations for the program running with one of these sets, the CPU can use the other set to run the other program using that set. This makes the CPU act as if it had twice as many CPU cores as it actually does. If a programmer want to use more than one of these CPU cores at the same time for the same program, that programmer must study parallel programming first, in order to handle the communications between the different CPU cores properly. I used to be an electronic engineer, specializing in logic simulation, often including timing analysis. | |

| ID: 33975 | Rating: 0 | rate:

| |

|

Just crunched my fist one on a GTX 770 at 76° in 31,145.32. Nice 153,150.00 Points ;-) | |

| ID: 36876 | Rating: 0 | rate:

| |

Just crunched my fist one on a GTX 770 at 76° in 31,145.32. Nice 153,150.00 Points ;-) You finished indeed a Noelia WU, but not this one but the new one: NOELIA_BI. But more important, your 770 can do better, mine finishes these new Noelia's in about 27000 seconds, but temperature is only 66-67°C. And the colder a GPU runs, the faster (and more error free) it does. So perhaps you can experiment with some settings to get the temperature a few degrees lower. ____________ Greetings from TJ | |

| ID: 36877 | Rating: 0 | rate:

| |

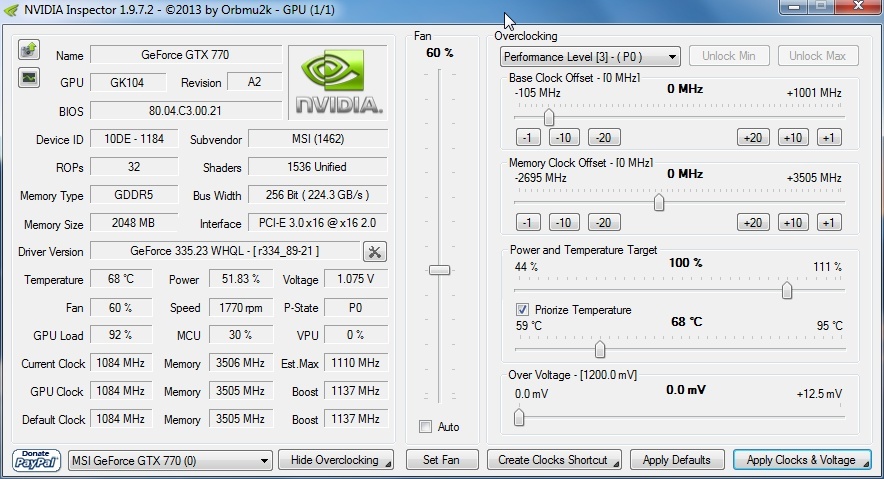

[quote]your 770 can do better, mine finishes these new Noelia's in about 27000 seconds, but temperature is only 66-67°C. THX for your advice. To lower the temperature, I'm usig the nvidia inspektor with the following settings: I unchecked Auto-Fan and set it to 60% which speeds the fan from 1300 to 1770 1/min what is still ear-friedly. But that reduces the temperature by only 3 degrees. So I have to check the Priorize Temperature box and put the slider to 68°. Which slows down cpu-clock a little bit. Is there a better approach?  ____________ Regards, Josef  | |

| ID: 36880 | Rating: 0 | rate:

| |

But more important, your 770 can do better, mine finishes these new Noelia's in about 27000 seconds, but temperature is only 66-67°C. And the colder a GPU runs, the faster (and more error free) it does. So perhaps you can experiment with some settings to get the temperature a few degrees lower. You might have accidently been looking at your 780 Ti. Here's your 3 Noelia results from the 770 so far: # GPU [GeForce GTX 770] Platform [Windows] Rev [3301M] VERSION [42] # Approximate elapsed time for entire WU: 29643.715 s # GPU [GeForce GTX 770] Platform [Windows] Rev [3301M] VERSION [42] # Approximate elapsed time for entire WU: 29572.861 s # GPU [GeForce GTX 770] Platform [Windows] Rev [3301M] VERSION [42] # Approximate elapsed time for entire WU: 29676.489 s | |

| ID: 36883 | Rating: 0 | rate:

| |

|

You are absolutely correct Beyond, my mistake. | |

| ID: 36886 | Rating: 0 | rate:

| |

|

Now I have tested the MSI Afterburner. There you can set a custom fan curve. However, I have a problem with that: In order to lower the temperature by 3-4 ° C, the fan speed increases to 3300 1/min. This is unpleasant. With my GTX 680 I was able to reduce the temperature by 8 degrees, as I dismounted the cooler and renewed the thermal paste;-) Unfortunately, the same procedure for the GTX 770 delivered nothing, since their thermal paste was not dried out. Too new;-) So I will reduce gpu-clock a little bit to remain below 70 degrees. Reducing from 1150 MHz to 1080-1100 reduces the temperature by 5 degrees. | |

| ID: 36888 | Rating: 0 | rate:

| |

|

Hi, | |

| ID: 37368 | Rating: 0 | rate:

| |

Hi, I had the same error in 4 units so far. Here is an example of one: potx1x492-NOELIA_INSP-3-13-RND4560_6 Workunit 9908013 Created 22 Jul 2014 | 19:40:28 UTC Sent 22 Jul 2014 | 21:46:12 UTC Received 22 Jul 2014 | 23:03:18 UTC Server state Over Outcome Computation error Client state Compute error Exit status -98 (0xffffffffffffff9e) Unknown error number Computer ID 127986 Report deadline 27 Jul 2014 | 21:46:12 UTC Run time 4.05 CPU time 2.06 Validate state Invalid Credit 0.00 Application version Long runs (8-12 hours on fastest card) v8.41 (cuda60) Stderr output <core_client_version>7.2.42</core_client_version> <![CDATA[ <message> (unknown error) - exit code -98 (0xffffff9e) </message> <stderr_txt> # GPU [GeForce GTX 690] Platform [Windows] Rev [3301M] VERSION [60] # SWAN Device 1 : # Name : GeForce GTX 690 # ECC : Disabled # Global mem : 2048MB # Capability : 3.0 # PCI ID : 0000:04:00.0 # Device clock : 1019MHz # Memory clock : 3004MHz # Memory width : 256bit # Driver version : r337_00 : 33788 ERROR: file mdioload.cpp line 81: Unable to read bincoordfile 19:03:38 (5576): called boinc_finish </stderr_txt> ]]> http://www.gpugrid.net/result.php?resultid=12864314 | |

| ID: 37369 | Rating: 0 | rate:

| |

|

Same error here: ERROR: file mdioload.cpp line 81: Unable to read bincoordfile potx1x284-NOELIA_INSP-2-13-RND0923 : WU 9908067 potx1x225-NOELIA_INSP-5-13-RND8250 : WU 9907982 Bye, Grubix. | |

| ID: 37370 | Rating: 0 | rate:

| |

|

This error does not affect NOELIAs only, I had a SANTI_p53final fail on me the other day with the exact same error: ERROR: file mdioload.cpp line 81: Unable to read bincoordfile ____________  | |

| ID: 37372 | Rating: 0 | rate:

| |

Message boards : News : WU: NOELIA_INS1P