Message boards : News : acemdlong application 8.14 - discussion

| Author | Message |

|---|---|

|

We decided to go live with the new application on ACEMD-Long. | |

| ID: 32322 | Rating: 0 | rate:

| |

|

Saying none available :( | |

| ID: 32324 | Rating: 0 | rate:

| |

|

Unfortunately I do not get new work on my GTX 670 (2048MB) driver: 311.6, although you mention that the application will assign the Cuda version correspondingly. | |

| ID: 32325 | Rating: 0 | rate:

| |

|

I think it's probably that there are no WU's right now and the webpage doesn't update very often the count. | |

| ID: 32326 | Rating: 0 | rate:

| |

|

Are you producing new WUs at this very moment? I am asking as there had been quite a lot (around 2000) a few days ago, but as somebody else noted they desapiered very fast some days ago. | |

| ID: 32328 | Rating: 0 | rate:

| |

|

noelia is checking some small batch and then putting on 1000. | |

| ID: 32330 | Rating: 0 | rate:

| |

|

I am beyond impressed | |

| ID: 32342 | Rating: 0 | rate:

| |

|

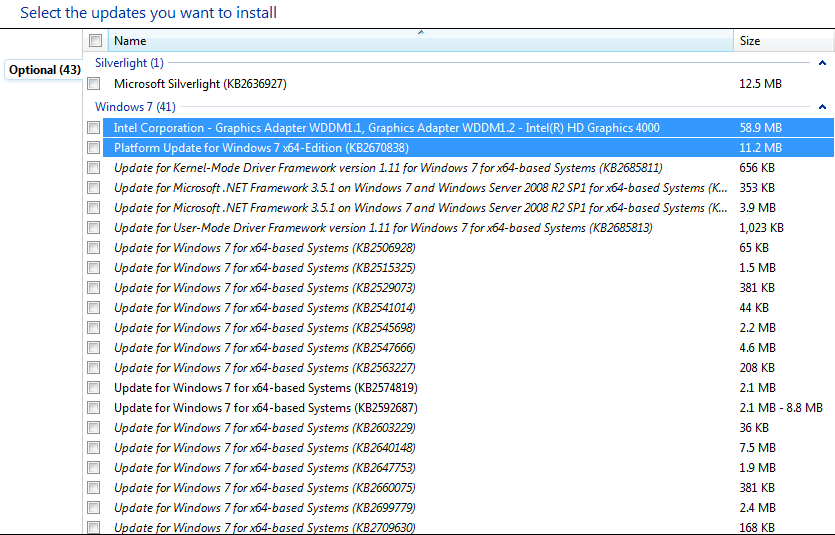

Not getting any work units, installed lates drivers: | |

| ID: 32343 | Rating: 0 | rate:

| |

Not getting any work units, installed lates drivers: Try the latest from here: https://developer.nvidia.com/opengl-driver Currently 326.84 Operator ____________ | |

| ID: 32344 | Rating: 0 | rate:

| |

|

Operator, please post your completed time on the long run for you titan when finished. | |

| ID: 32345 | Rating: 0 | rate:

| |

|

Just updated to 320.49, the latest Nvidia driver for my 680. Boinc is telling me I still need to update my drivers in order to receive work. | |

| ID: 32347 | Rating: 0 | rate:

| |

Operator, please post your completed time on the long run for you titan when finished. Nathan Kid on Titan 19k seconds 3.9ms per step | |

| ID: 32352 | Rating: 0 | rate:

| |

Just updated to 320.49, the latest Nvidia driver for my 680. Boinc is telling me I still need to update my drivers in order to receive work. Hi Matt, You can install those drivers safe, but you find the latest drivers at nVidia´s official site as well. Look here for the GTX680. If you click then on "start search" you get the latest for your card, 326.80. Hope this helps. Edit: it is a beta driver, but I am using it now for a week on two rigs without any issues. ____________ Greetings from TJ | |

| ID: 32353 | Rating: 0 | rate:

| |

Just updated to 320.49, the latest Nvidia driver for my 680. Boinc is telling me I still need to update my drivers in order to receive work. 326.80 http://www.geforce.com/drivers @+ *_* ____________ | |

| ID: 32354 | Rating: 0 | rate:

| |

|

First long unit GPUGRID completed by my Titan. | |

| ID: 32358 | Rating: 0 | rate:

| |

We decided to go live with the new application on ACEMD-Long. Does the difference from 4.2 and 5.5 make any difference for fermi cards or can "we" stay at the currect drivers performancewise? ____________ DSKAG Austria Research Team: http://www.research.dskag.at  | |

| ID: 32361 | Rating: 0 | rate:

| |

|

There seems to be a small improvement in the new application but not in the Cuda version. | |

| ID: 32363 | Rating: 0 | rate:

| |

|

Hello guys and gals ! | |

| ID: 32365 | Rating: 0 | rate:

| |

There seems to be a small improvement in the new application but not in the Cuda version. Well if you have a Titan or a 780 the apps now work! I think that was the point, at least it was for me (since March). Now... I wish the server would give me more than just one WU at a time since I have TWO GPUs. Operator ____________ | |

| ID: 32370 | Rating: 0 | rate:

| |

Now... I wish the server would give me more than just one WU at a time since I have TWO GPUs. I wish it would give me ANY WUs. Updated the 670 to 326.80 and STILL NO WORK :-( | |

| ID: 32371 | Rating: 0 | rate:

| |

|

Odd error on a long run for my 780: | |

| ID: 32373 | Rating: 0 | rate:

| |

|

Running dry of WUs in all machines. No long or short are beeing splited for sometime. As others say, it´s not a driver issue, despite the new message from server. | |

| ID: 32384 | Rating: 0 | rate:

| |

|

They are flowing now, thank you. | |

| ID: 32396 | Rating: 0 | rate:

| |

|

Ok, I'm getting wu's again, BUT I give up on what new app names to use in the app_config.xml file. Can anyone help me? Thanks in advance, Rick | |

| ID: 32406 | Rating: 0 | rate:

| |

Operator, please post your completed time on the long run for you titan when finished. 5pot; The first 'non-beta' long WU for me was I79R10-NATHAN_KIDKIXc22_6-3-50-RND1517_0 at 19,232.48 seconds (5.34 hrs). http://www.gpugrid.net/workunit.php?wuid=4727415 The second long WU for me was I46R3-NATHAN_KIDKIXc22_6-2-50-RND7378_1 at 19,145.93 seconds (5.318314 hrs). http://www.gpugrid.net/workunit.php?wuid=4723649 W7x64 Dell T3500 12GB Titan x2 (both EVGA factory OC'd, and on air - limited to 80C) 1100hz (ballpark freq, not fixed) running 326.84 drivers from the developer site. No crashes or funny business so far. Operator ____________ | |

| ID: 32414 | Rating: 0 | rate:

| |

|

So about another 1k seconds shaved off. | |

| ID: 32415 | Rating: 0 | rate:

| |

Hello Operator, you are the one I need to ask something. What is the PSU in your Dell and how many GPU power plugs do you have? I have a T7400 with a 1000W PSU but only two 6 pins and 1 not usual 8 pin GPU power plug, so I am very limited with this big box. Thanks for your answer highly appreciated. ____________ Greetings from TJ | |

| ID: 32418 | Rating: 0 | rate:

| |

TJ; I've sent you a PM so as not to crosspost. Operator ____________ | |

| ID: 32428 | Rating: 0 | rate:

| |

There seems to be a small improvement in the new application but not in the Cuda version. Well that might have been the point of the new app but the question and answer that you have quoted was about performance increase for Fermi cards. So nothing at all to do with Titan or 780. | |

| ID: 32441 | Rating: 0 | rate:

| |

|

Getting the unknown error number crashes on kid WUs | |

| ID: 32512 | Rating: 0 | rate:

| |

|

More have crashed, with the exact same unknown error number. I dont know if this is from the 8.02 or whatever app that has been pushed out, but it only recently began doing this. | |

| ID: 32515 | Rating: 0 | rate:

| |

|

Please can we go back to 8.00 (or maybe 8.01)? | |

| ID: 32519 | Rating: 0 | rate:

| |

|

Opearator, 5pot, | |

| ID: 32523 | Rating: 0 | rate:

| |

|

8.02 is, in general, doing better than 8.00 but it looks like there's some regression that's affecting a few machines. For now I've reverted acemdlong to 8.00 (which will appear as 8.03 because of a bug in the server). 8.02 will stay on acemdshort for continued testing. | |

| ID: 32524 | Rating: 0 | rate:

| |

Getting the unknown error number crashes on kid WUs It isn't the error number which is unknown, it's the plain-English description for it. Yours got a 0xffffffffc0000005: mine has just died with a 0xffffffffffffff9f, description equally unknown. Task 7221543. | |

| ID: 32527 | Rating: 0 | rate:

| |

|

I've not had any issues with 8.0, 8.1 or 8.2 on my 500 & 600 cards, either Linux or Win. The only problem now is getting fresh wu's from the server. | |

| ID: 32528 | Rating: 0 | rate:

| |

I've not had any issues with 8.0, 8.1 or 8.2 on my 500 & 600 cards, either Linux or Win. The only problem now is getting fresh wu's from the server. Me too for linux and 680 and Titan. | |

| ID: 32529 | Rating: 0 | rate:

| |

|

Short runs (2-3 hours on fastest card) 0 801 1.95 (0.10 - 9.83) 501 | |

| ID: 32530 | Rating: 0 | rate:

| |

|

Noelia has over 1000 WU to submit but we cannot as there are still these problems. | |

| ID: 32532 | Rating: 0 | rate:

| |

Getting the unknown error number crashes on kid WUs Ty for the clarification. | |

| ID: 32536 | Rating: 0 | rate:

| |

Getting the unknown error number crashes on kid WUs No problem. Looking a little further down, my 0xffffffffffffff9f (more legibly described as 'exit code -97') also says # Simulation has crashed. Since then, I've had another with the same failure: Task 7224143. | |

| ID: 32538 | Rating: 0 | rate:

| |

Noelia has over 1000 WU to submit but we cannot as there are still these problems. Well put 500 of them in the beta queque then please, so our rigs can run overnight while we sleep. ____________ Greetings from TJ | |

| ID: 32542 | Rating: 0 | rate:

| |

|

Using the 803-55 application now (on Titans) I received two NATHAN KIDKIX WUs | |

| ID: 32554 | Rating: 0 | rate:

| |

|

803 and 800 are the exact same binary. | |

| ID: 32555 | Rating: 0 | rate:

| |

803 and 800 are the exact same binary. Okay. I think I found the issue. My Precision X was off. Thanks, Back in the fast lane now. Operator. ____________ | |

| ID: 32556 | Rating: 0 | rate:

| |

|

...and 8.04 is what? | |

| ID: 32563 | Rating: 0 | rate:

| |

|

8.04 is a new beta app. Includes a bit more debugging to help me find the cause of the remaining common failure modes. | |

| ID: 32565 | Rating: 0 | rate:

| |

8.04 is a new beta app. Includes a bit more debugging to help me find the cause of the remaining common failure modes. Hello MJH, Do we need to post the error message or the links to it, or find you it yourself at server side? I have 6 error with 8.04 for Harvey test and Noelia_Klebebeata. 8.00 and 8.02 did okay on my 660 and 770. I have the most error on the 660, both cards have 2GB. ____________ Greetings from TJ | |

| ID: 32569 | Rating: 0 | rate:

| |

|

Hi, | |

| ID: 32570 | Rating: 0 | rate:

| |

|

As Stderr output reports: | |

| ID: 32613 | Rating: 0 | rate:

| |

|

EDIT my previous post: Just not make false accusations: the task "I83R9-NATHAN_baxbimx-4-6-RND5261_0" did not run until today because I forgot to quite the suspension of BOINC MANAGER. It is running fine now. | |

| ID: 32635 | Rating: 0 | rate:

| |

|

The version 8.14 application that's been live on short is now out on acemdlong. | |

| ID: 32864 | Rating: 0 | rate:

| |

|

Hi! | |

| ID: 32883 | Rating: 0 | rate:

| |

|

While I wasnt getting them with 803, I havent had a crash yet on my 780s. | |

| ID: 32885 | Rating: 0 | rate:

| |

|

Hi, MJH: | |

| ID: 32888 | Rating: 0 | rate:

| |

|

Hi Matt, # BOINC suspending at user request (exit) However I have done nothing, I was asleep, so the rig was unattended. It is there when a WU failed, but also with a success as in in the last Nathan LR. On the 770 everything works perfect. Perhaps the latest derives are optimized for the 7xx series by nVidia and not so good for the 6xx series of cards. ____________ Greetings from TJ | |

| ID: 32889 | Rating: 0 | rate:

| |

Since the last 3 days I some times see this in the output file: Sometimes benchmarks automatically run, and GPU work gets temporarily suspended. Also, sometimes high-priority CPU jobs step in, and GPU work gets temporarily suspended. You can always look in Event Viewer, or the stdoutdae.txt file, for more information. | |

| ID: 32890 | Rating: 0 | rate:

| |

Hi Matt, Add this line of code to your cc_config file to stop the CPU benchmarks from running. <skip_cpu_benchmarks>1</skip_cpu_benchmarks> If you're using a version of BOINC above 7.0.55 then you can go to advanced>read config files and the changes will take effect. if you're using a version lower than 7.0.55 you'll have to shut down and restart BOINC for the change to take effect. | |

| ID: 32891 | Rating: 0 | rate:

| |

Since the last 3 days I some times see this in the output file: I just did a brief test, where I ran CPU benchmarks manually using: Advanced -> Run CPU benchmarks. The result in the slots stderr.txt file was: # BOINC suspending at user request (thread suspend) # BOINC resuming at user request (thread suspend) I then ran a test where I selected Activity -> Suspend GPU, then Activity -> Use GPU based on preferences. The result in the slots stderr.txt file was: # BOINC suspending at user request (exit) So... I no-longer believe your issue was caused by benchmarks. But it still could have been caused by high-priority CPU jobs. | |

| ID: 32892 | Rating: 0 | rate:

| |

Hi, MJH: John, I know you were asking MJH....I have a GTX 650TI (2GB) running the 326.84 drivers without any issues at all. http://www.gpugrid.net/show_host_detail.php?hostid=155526 Running the older drivers will result in your system getting tasks for the older CUDA version which is less efficient. The project is leaning forward in trying to eventually bring the codebase to the latest CUDA (5.5) version to keep up with the technology and newer GPUs. You would be wise to try and run newer drivers to allow your system to run as efficiently as possible. Again, I can verify that my GTX 650Ti runs fine on 326.84. Operator ____________ | |

| ID: 32893 | Rating: 0 | rate:

| |

|

Many thanks, Operator!! | |

| ID: 32896 | Rating: 0 | rate:

| |

|

John, | |

| ID: 32897 | Rating: 0 | rate:

| |

|

I don't know what happened here but all of my tasks are not running. They start run for a second or two, get a fraction of a percentile done then just stop utilizing the video card. The timer in boinc is running but no progress is being done and I can see they are not hitting the cards for any work. | |

| ID: 32898 | Rating: 0 | rate:

| |

|

Hi, Jim: | |

| ID: 32899 | Rating: 0 | rate:

| |

|

TJ - that message probably doesn-t indicate that the client is running benchmarks unless you have a version or configuration I've not seen. For benchmarking the message is along the lines of '(thread suspended)' not '(exit)'. | |

| ID: 32900 | Rating: 0 | rate:

| |

|

Ascholten - which of your machines is the problematic one? | |

| ID: 32901 | Rating: 0 | rate:

| |

Same code base, compiled with two different compiler versions. 4.2 is very old now and we need to move to 5.5 to stay current and be ready for future hardware. (We had to skip 5.0 because of unresolved performance problems) Matt | |

| ID: 32902 | Rating: 0 | rate:

| |

Is 5.5 supposed to be any faster? I didn´t notice any difference in crunching speed. | |

| ID: 32905 | Rating: 0 | rate:

| |

Nope. M | |

| ID: 32906 | Rating: 0 | rate:

| |

|

Then I will stay with driver: 311.6 and CUDA 4.2. Works great for me! | |

| ID: 32909 | Rating: 0 | rate:

| |

FWIW 5.5 was about 5 minutes faster on the betas than the 4.2 for me. http://www.gpugrid.net/result.php?resultid=7264729 <--4.2 http://www.gpugrid.net/result.php?resultid=7265118 <--5.5 Long runs seem to be a little faster also. 18-20 minutes http://www.gpugrid.net/result.php?resultid=7226082 <--4.2 http://www.gpugrid.net/result.php?resultid=7232613<--5.5 | |

| ID: 32910 | Rating: 0 | rate:

| |

TJ - that message probably doesn-t indicate that the client is running benchmarks unless you have a version or configuration I've not seen. For benchmarking the message is along the lines of '(thread suspended)' not '(exit)'. Aha, suspended is indeed something different than exit. That for letting that know. Only 5 Rosetta tasks are running on this 2, Xeon 4 cores computer, so 3 real cores are free to run GPUGRID and other things like antivirus. Indeed my AV runs at night a few times a week, but when watched I have never seen that is suspend any BOINC work. And we don't have a cat or dog :) Well as long as the WU will resume and not result in error, then it is okay. ____________ Greetings from TJ | |

| ID: 32915 | Rating: 0 | rate:

| |

|

Tools, Computing Preferences, Processor usage tab, | |

| ID: 32917 | Rating: 0 | rate:

| |

Tools, Computing Preferences, Processor usage tab, I will try that immediately. ____________ Greetings from TJ | |

| ID: 32918 | Rating: 0 | rate:

| |

|

MJH; | |

| ID: 32919 | Rating: 0 | rate:

| |

|

Operator,

Those log messages are printed in response to suspend/resume signals received from the client and quite why that's happening so frequently here isn't clear, but looks very much like a client error. If it helps you to diagnose it, I think thread suspending should only happen when the client runs its benchmarks, with other suspend event causing the app to exit ( "user request (exit)" ). Mjh | |

| ID: 32920 | Rating: 0 | rate:

| |

|

Event Log may also give more insight into why the client is suspending/resuming. I don't think it's a client error, but rather user configuration that is making it do so. | |

| ID: 32921 | Rating: 0 | rate:

| |

|

MJH; | |

| ID: 32922 | Rating: 0 | rate:

| |

|

Operator, | |

| ID: 32923 | Rating: 0 | rate:

| |

Operator, As in task state "2" ? I may need a little direction here. Oh and the Titan machine keeps switching to waiting WUs and then back again. Meaning it will work on two of them for 10 or 20% and then stop and start on the other two in the queue, and then go back to the first two. Also I get the "waiting access violation" thing now and again. And as I said before the temps are running 64-66 degrees C which is really strange. No where near the 81-82 limits they ran with 8.03. I have already reset the project a couple of times thinking that would fix this. It hasn't. So I'm at a bit of a loss right now. It doesn't seem to matter if my Precision X utility is on or not. Operator. ____________ | |

| ID: 32924 | Rating: 0 | rate:

| |

|

Operator, what setting do you have in place for, Switch Between Applications Every (time)? | |

| ID: 32925 | Rating: 0 | rate:

| |

And that's in addition to the "thread suspend"ing ? Can you say how frequently that is happening (watch the output to the stderr file as the app is running). Does the task state that the client reports (running, suspend, etc) match, or is it always showing the task as running ,even when you can see that it has suspended? Could you try running just a single task, and see whether that behaves itself. Use the app_config setting Beyond advises, here: [quote http://www.gpugrid.net/forum_thread.php?id=3473&nowrap=true#32913 [/quote] Matt | |

| ID: 32926 | Rating: 0 | rate:

| |

Matt[/quote] I've just changed the computing prefs from 60.0 minutes to 990.0. Don't know why this Titan box would start hopping around now when it never has before, and the GTX590 box has the exact same settings and doesn't do it with the 4 WUs it has waiting in the queue..but... And as for the stderr; Check this out, this is just a portion.. 9/12/2013 8:09:37 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:09:37 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 9/12/2013 8:14:11 PM | GPUGRID | Restarting task I93R10-NATHAN_KIDc22_glu-1-10-RND9319_0 using acemdlong version 814 (cuda55) in slot 0 9/12/2013 8:15:26 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:17:38 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 9/12/2013 8:19:38 PM | GPUGRID | Restarting task I93R10-NATHAN_KIDc22_glu-1-10-RND9319_0 using acemdlong version 814 (cuda55) in slot 0 9/12/2013 8:22:10 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:23:00 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 9/12/2013 8:27:13 PM | GPUGRID | Restarting task I93R10-NATHAN_KIDc22_glu-1-10-RND9319_0 using acemdlong version 814 (cuda55) in slot 0 9/12/2013 8:28:06 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 9/12/2013 8:30:32 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:36:27 PM | GPUGRID | Restarting task I93R10-NATHAN_KIDc22_glu-1-10-RND9319_0 using acemdlong version 814 (cuda55) in slot 0 9/12/2013 8:39:18 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:48:54 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 9/12/2013 8:51:12 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 8:55:34 PM | GPUGRID | Restarting task I93R10-NATHAN_KIDc22_glu-1-10-RND9319_0 using acemdlong version 814 (cuda55) in slot 0 9/12/2013 8:56:47 PM | GPUGRID | Restarting task e7s7_e6s12f416-SDOERR_VillinAdaptN2-0-1-RND2117_0 using acemdlong version 814 (cuda55) in slot 1 9/12/2013 9:01:11 PM | GPUGRID | Restarting task e7s15_e4s11f480-SDOERR_VillinAdaptN4-0-1-RND1175_0 using acemdlong version 814 (cuda55) in slot 3 I will try running just one WU (suspending the others) and see what happens. Operator ____________ | |

| ID: 32927 | Rating: 0 | rate:

| |

Operator, what setting do you have in place for, Switch Between Applications Every (time)? I use a higher setting too but this should only apply when running more than 1 project. If it's switching between WUs of the same project it's a BOINC bug (heaven forbid) and should be reported. | |

| ID: 32930 | Rating: 0 | rate:

| |

|

My 780 completes them fine. But has constant access violations. | |

| ID: 32931 | Rating: 0 | rate:

| |

Operator, what setting do you have in place for, Switch Between Applications Every (time)? That is one side-effect of the "BOINC temporary exit" procedure, which I think is what Matt Harvey is using for his new crash-recovery application. When an internal problem occurs, the new v8.14 application exits completely, and as far as BOINC knows, the GPU is free and available to schedule another task. If you have another task - from this or any other project - ready to start, BOINC will start it. On my system, with (still) a stupidly high DCF, the sequence is: Task 1 errors and exits Task 2 starts on the vacant GPU Task 1 becomes ready ('waiting to run') again. BOINC notices a deadline miss looming BOINC pre-empts Task 2, and restarts Task 1, marking it 'high priority' That results in Task 2 showing 'Waiting to run', with a minute or two of runtime completed, before Task 1 finally completes. With more normal estimates and no EDF, Task 1 and Task 2 would swap places every time a fault and temporary exit occurred. Even if you run minimal cache and don't normally fetch the next task until shortly before it is needed, you may find that a work fetch is triggered by the first error and temporary exit, and the swapping behaviour starts after that. If the tasks swap places more than a few times each run, your GPU is marginal and you should investigate the cause. | |

| ID: 32932 | Rating: 0 | rate:

| |

I'm not sure what your last statement means "your GPU is marginal". I have reviewed some completed WUs for another Titan host to see if we were having similar issues. http://www.gpugrid.net/results.php?hostid=156948 And this host (Anonymous) is posting completion times I used to get while running the 8.03 long app. The major thing that jumped out at me was this box is running driver 326.41. Still showing lots of "Access violations" though. I believe I'm running 326.84. I just can't figure out why this just started seemingly out of the blue. I don't remember making any changes to the system and this box does nothing but GPUGrid. Operator ____________ | |

| ID: 32933 | Rating: 0 | rate:

| |

I'm not sure what your last statement means "your GPU is marginal". For me, a marginal GPU is one which throws a lot of errors, and a GPU which throws a lot of errors is marginal, even if it recovers from them. Marginal being faulty, badly installed, badly maintained, or just in need of some TLC. Things like overclocking, overheating, under-ventilating, under-powering (small PSU), badly seating (in PCIe slot) - anything which makes it unhappy. | |

| ID: 32934 | Rating: 0 | rate:

| |

|

Error -97s are a strong indication that the GPU is misbehaving. When I get around to it, I'll sort out a memory testing program for you all to test for this. The access violations are indicative of GPU hardware problems. There are a few hosts that are disproportionately affected by these (Operator, 5pot) and it's not clear why. I suspect there's a relationship with some third-party software, but don't yet have a handle on it. | |

| ID: 32935 | Rating: 0 | rate:

| |

Error -97s are a strong indication that the GPU is misbehaving. When I get around to it, I'll sort out a memory testing program for you all to test for this. The access violations are indicative of GPU hardware problems. There are a few hosts that are disproportionately affected by these (Operator, 5pot) and it's not clear why. I suspect there's a relationship with some third-party software, but don't yet have a handle on it. Well my GTX660 has a lot of -97 errors, especially with the CRASH (Santi) tests. LR's and the Noelia's are handled most of the time error free on it. Einstein, Albert and Milkyway run almost error free on it too. So if it is something of the PC or other software (I don't have many installed), would also result in 50% error with the other 3 GPU projects. Or not? ____________ Greetings from TJ | |

| ID: 32936 | Rating: 0 | rate:

| |

Well my GTX660 has a lot of -97 errors, especially with the CRASH (Santi) tests. LR's and the Noelia's are handled most of the time error free on it. Einstein, Albert and Milkyway run almost error free on it too. So if it is something of the PC or other software (I don't have many installed), would also result in 50% error with the other 3 GPU projects. Or not? No. Different projects have different apps, which use different parts of the GPU (causing different GPU usage, and/or power draw). I have two Asus GTX 670 DC2OG 2GD5 (factory overclocked) cards in one of my hosts, and they were unreliable with some batches of workunits. I've upgraded the MB/CPU/RAM in this host, but its reliability stayed low with those batches. Even the WLAN connection was lost when the GPU related failures happened. Then I've found a voltage tweaking utility for the Kepler based cards, and with the help of this utility I've raised the GPU's boost voltage by 25mV, and its power limits by 25W. Since then this host didn't have any GPU related errors. Maybe you should give it a try too. It's a quite simple tool, if you put nvflash beside its files, it can directly flash the modified BIOS. | |

| ID: 32943 | Rating: 0 | rate:

| |

|

The *only* other thing running besides GPUgrid, was WCG. And I mean no anti-virus, nothing at all. Still got the access violations. So I suspended the WCG app. Still the access violations occurred. | |

| ID: 32944 | Rating: 0 | rate:

| |

|

Thanks Zoltan, I will install them and try tomorrow (later today after sleep). | |

| ID: 32945 | Rating: 0 | rate:

| |

Error -97s are a strong indication that the GPU is misbehaving. When I get around to it, I'll sort out a memory testing program for you all to test for this. The access violations are indicative of GPU hardware problems. There are a few hosts that are disproportionately affected by these (Operator, 5pot) and it's not clear why. I suspect there's a relationship with some third-party software, but don't yet have a handle on it. Yesterday evening in response to Mr. Haselgrove's post I decided to do a thorough review of my Titan system. I removed the 326.84 drivers and did a clean install of the 326.41 drivers. Removed the Precision X utility completely. Removed, inspected and reinstalled both Titan GPUs. No dust or other foreign contaminants were found inside the case or GPU enclosures. All cabling checks out. Confirmed all bios settings were as originally set and correct. No other programs are starting with Windows except Teamviewer (which is on all my systems and was long before these problems started). I reinstalled BOINC from scratch with no settings saved from the old installation. I setup GPUGrid all over again (new machine number now). No appconfig or any other XML tweak files are present. Both GPUs are running with factory settings (EVGA GTX Titan SC). I downloaded two long 8.14 WUs and they started. Then the second two downloaded and went from "Ready to Start" to "Waiting to Run" as the first two paused and the system started processing the newly arrived ones. And then after a few minutes back again to the first two that were downloaded. Temps the whole time were nominal - that is to say in the 70's C. I monitored the task manager and occasionally would see one of the 814 apps drop off, leaving only one running, and then after a minute or so there would be two of them running again. The results show multiple access violations: http://www.gpugrid.net/result.php?resultid=7277767 http://www.gpugrid.net/result.php?resultid=7267751 It is also taking longer to complete these WUs using 8.14 (than with the 8.03 app). Again, this started for me with the 8.14 app. None of this happened on the 8.03 app, even with a bit of overclocking it was dead stable. I am confident with what I went through last evening that this issue is not with my system. I have now set this box to do betas only. - Updated the betas (8.14) are doing the same swapping as the normal long WUs were doing. I have to manually suspend two of them to keep this from happening. On the other hand my dual GTX 590 system is happy as a clam. Two steps forward, one step back. Operator ____________ | |

| ID: 32954 | Rating: 0 | rate:

| |

|

Yours appear to be happening much more frequently than mine. Even after I increased the voltage, still get access violations. | |

| ID: 32955 | Rating: 0 | rate:

| |

And now... 9/14/2013 12:44:56 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 12:48:12 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 12:51:13 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 12:54:26 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 12:57:43 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:00:45 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:03:48 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:07:02 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:10:19 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:13:22 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:16:24 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:19:27 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:22:29 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:25:32 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:28:34 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:31:38 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:34:40 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:37:33 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:40:50 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:43:52 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:46:56 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:49:59 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:53:02 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:56:04 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 9/14/2013 1:58:56 PM | GPUGRID | Restarting task 152-MJHARVEY_CRASH2-24-25-RND4251_0 using acemdbeta version 814 (cuda55) in slot 0 So even manually suspending the two waiting WUs the ones that are supposed to be running keep starting and stopping. I really have no idea what could be causing this. Operator ____________ | |

| ID: 32957 | Rating: 0 | rate:

| |

|

Hello Operator, | |

| ID: 32961 | Rating: 0 | rate:

| |

Hello Operator, TJ I thought of taking one card out to see what happens and will probably try that tomorrow. I observed today running beta apps (8.14) that there were times when both WUs were "Waiting to Run" Scheduler: Access Violation. Meaning with only two WUs, one for each GPU, nothing was getting done because the app(s) had temporarily shutdown. So I would expect that with just one card the same thing would happen, just one WU and sometimes it would just stop and then restart. I will try that tomorrow anyway. Operator ____________ | |

| ID: 32963 | Rating: 0 | rate:

| |

|

Operator: this sounds like your card(s ?) may experience computation errors which were not detected by the 8.03 app. I don't know for sure, but it could well be that the new recovery mode also added enhanced detection of faults. What I'd try: | |

| ID: 32970 | Rating: 0 | rate:

| |

|

This morning I saw that my GTX660 was down clocked to 50% again (would be nice Matt if we can see that in the stderr output file), so I booted the system. # GPU 0 : 64C SWAN : FATAL : Cuda driver error 999 in file 'swanlibnv2.cpp' in line 1963. # SWAN swan_assert 0 # GPU [GeForce GTX 660] Platform [Windows] Rev [3203] VERSION [42] # SWAN Device 0 : # Name : GeForce GTX 660 I have never seen this before. Perhaps "strange" things happened in the past as weel, but now we can look for it. ____________ Greetings from TJ | |

| ID: 32977 | Rating: 0 | rate:

| |

|

I think Richards explanation sums up what's going on well. <cc_config> <options> <use_all_gpus>1</use_all_gpus> <exclude_gpu> <url>http://www.gpugrid.net/</url> <device_num>0</device_num> </exclude_gpu> </options> </cc_config>

| |

| ID: 32978 | Rating: 0 | rate:

| |

|

Currently when the app does a temporary exit it tells the client to wait 30secs before attempting a restart. I'll probably change this to an immediate restart; this should minimise the opportunity for the client to chop and change tasks. | |

| ID: 32980 | Rating: 0 | rate:

| |

|

http://www.gpugrid.net/result.php?resultid=7280096 | |

| ID: 32982 | Rating: 0 | rate:

| |

|

I don't think the cards are to hot. I have had a GTX550Ti running at 79°C 24/7 with this project and almost none errors. | |

| ID: 32983 | Rating: 0 | rate:

| |

|

I've dropped the clock, and still get the violations. | |

| ID: 32985 | Rating: 0 | rate:

| |

I don't think the cards are to hot. I have had a GTX550Ti running at 79°C 24/7 with this project and almost none errors. I don't think you can generalize from one card to the next even if they are in the same series, much less from a GTX550Ti to a GTX 660. If it fails when it hits a certain temperature, that looks like a smoking gun to me. | |

| ID: 32993 | Rating: 0 | rate:

| |

|

Perhaps most of the access violations were already being recovered by the card while running the earlier apps; there are errors that the card can recover without intervention (recoverable errors). | |

| ID: 32995 | Rating: 0 | rate:

| |

|

Okay so let's take a look at somebody else. | |

| ID: 32999 | Rating: 0 | rate:

| |

|

MJH | |

| ID: 33001 | Rating: 0 | rate:

| |

|

We only run Linux in-house. | |

| ID: 33002 | Rating: 0 | rate:

| |

|

The access violations appear to come from deep inside NVIDIA code. Maybe I'll have to get a Titan machine bumped to Windows for testing, since there's a limit to the information I can get remotely.

That's just because the client is using a 32bit integer to hold the memory size. 4GB is largest value representable in that datatype. MJH | |

| ID: 33003 | Rating: 0 | rate:

| |

|

Well, thats one step forward. Best of luck. Deep inside nvidia code doesnt sound good, but for me, while it does slow the tasks down, im just happy theyre running and validating. | |

| ID: 33007 | Rating: 0 | rate:

| |

The access violations appear to come from deep inside NVIDIA code. Maybe I'll have to get a Titan machine bumped to Windows for testing, since there's a limit to the information I can get remotely. Thank you sir for the 32 bit reference on the memory size, makes perfect sense. While we're on that topic, I don't suppose there is any hope of compiling a 64 bit version of the app and sending that out for testing? It's my understanding that either a 32 bit or 64 bit app can be compilied by the toolkit. Is this correct? What's the downside of doing this? Thanks, Operator ____________ | |

| ID: 33008 | Rating: 0 | rate:

| |

No, the Windows application will stay 32bit for the near future, since that will work on all hosts. Importantly, there's no performance advantage in a 64bit version. It may happen in the future, but not until 1) after the transition to Cuda 5.5 is complete, and 2) 32bit hosts contribute an insignificant fraction of our compute capability. Matt | |

| ID: 33009 | Rating: 0 | rate:

| |

The app will attempt recovery if it ran long enough to make a new checkpoint file. If it starts and crashes before that point, it will just abort the task, to avoid getting stuck in a loop.

My experience is that you get best performance out of a Titan if the temperature is below 78C. By 80 it is throttling. Over 80 and the thermal environment is too challenging for it to maintain its target (the card will be spending most of its time in the lowest performance state) r, and you should really try and improve the cooling. Just turning up the fanspeed can have counter-intuitive effects. When we tried this on our chasses, the increased airflow through the GPUs hindered airflow around the cards, actually making the top parts of the card away from the thermal sensors hotter. MJH | |

| ID: 33011 | Rating: 0 | rate:

| |

|

For tests you could just grad an old spare HDD an install some windows on it - it doesn't even need to be activated. | |

| ID: 33014 | Rating: 0 | rate:

| |

MrS; Easy enough to find out. Here's the results of the last 4 WUs crunched on the Titan system using 8.03 before 8.14 got downloaded automatically: http://www.gpugrid.net/result.php?resultid=7264399 http://www.gpugrid.net/result.php?resultid=7263637 http://www.gpugrid.net/result.php?resultid=7262985 http://www.gpugrid.net/result.php?resultid=7262745 I don't see any evidence of either errors or Access violations. And here is the very first WU running on the 8.14 app: http://www.gpugrid.net/result.php?resultid=7265074 So I don't think it's about heat issues, third party software, tribbles in the vent shafts, moon phases, any of that. I've been through this system thoroughly. It clearly started with 8.14. From the very first 8.14 WU I got. Operator ____________ | |

| ID: 33017 | Rating: 0 | rate:

| |

|

What you're forgetting is that it most likely wasn't reporting the error since that time point. He added more debug information as time went on. | |

| ID: 33022 | Rating: 0 | rate:

| |

|

Operator, you and I don't think it is an heat issue, but the temperature reading where absent in 8.03 and where introduced between 8.04 and 8.14. | |

| ID: 33024 | Rating: 0 | rate:

| |

|

For these access violation problems, it seems that I'm going to have to set up a Windows system with a Titan in the lab and try to reproduce it. Unfortunately I'll not be back to do that until mid October at the earliest. I hope you can tolerate the current state of affairs until then? | |

| ID: 33026 | Rating: 0 | rate:

| |

|

In the afternoon I looked at my PC and just by coincidence I saw a WU stop (CRASH) and then another one started but the GPU clock dropped to half by that. Nothing I did with suspending/resuming/EVGA software to get the clock up again than booting the system, 1 day and 11 hours after its last boot by the same issue. # BOINC suspending at user request (exit) I did nothing and the PC was only doing GPUGRID and 5 Rosetta WU's in the CPU's. Virus scanner was not in use will happen during night time. And I used the line from Operator in cc_config to never do a Benchmark. I think Matt has made a good diagnostic program and we get now to see things we never saw but could have happened. It would be nice though to see somewhere what all these messages mean (and what we could do or not do about it). But only when you have time Matt, we know you are busy with programming and you need to get your PhD as well. I am now 3 days error free even on my 660, so things have improved, for me at least. Thanks for that. ____________ Greetings from TJ | |

| ID: 33028 | Rating: 0 | rate:

| |

For these access violation problems, it seems that I'm going to have to set up a Windows system with a Titan in the lab and try to reproduce it. Unfortunately I'll not be back to do that until mid October at the earliest. I hope you can tolerate the current state of affairs until then? Like I said, theyre running and validating. Fine with me | |

| ID: 33030 | Rating: 0 | rate:

| |

I hope you can tolerate the current state of affairs until then? Matt; Will have to do. Thanks for looking into it though. That's encouraging. As I indicated I would, I removed one GPU and booted up to run long WUs. Got one NATHAN_KIDc downloaded and running and the second, a NOELIA_INS "Ready to Start". After one hour I came back to check and sure enough the first one had stopped and was now "Waiting to run" and the second one was running. I'm sure they'll swap back and forth again several times before completion. Operator ____________ | |

| ID: 33032 | Rating: 0 | rate:

| |

|

Operator: | |

| ID: 33033 | Rating: 0 | rate:

| |

|

Here's an anecdotal story, based on a random sample of one. YMMV - in fact, your system will certainly be different - but this may be of interest. | |

| ID: 33035 | Rating: 0 | rate:

| |

|

Jacob; | |

| ID: 33038 | Rating: 0 | rate:

| |

Richard; Thanks. My system board (Dell) has no integrated Intel HD video, discrete only. I do have the platform updates already installed, and in fact have most if not all of the other updates you show there installed as well. I actually did have Nvidia driver version 326.84 installed and reverted back to a clean install of 326.41 to determine if that had anything to do with the problem, but apparently it didn't. I think it's the way the 8.14 app runs on 780/Titan GPUs that is the issue. I don't see any of these problems with apps running on my 590 box. Matt (MJH) says he's going to have a go at investigating when he gets a chance. I'm considering doing a Linux build to see if that makes any difference because it seems that the development branches may be different for Windows vs Linux GPUGrid apps. But I have very little experience with Linux in general so this would be time consuming for me to get spun up on. Operator ____________ | |

| ID: 33040 | Rating: 0 | rate:

| |

|

Hi, Folks | |

| ID: 33041 | Rating: 0 | rate:

| |

|

Operator, | |

| ID: 33043 | Rating: 0 | rate:

| |

Hi, Folks I wouldn't pay much attention to the forecast. See what the actual run time is; it should be about 18 hours. | |

| ID: 33044 | Rating: 0 | rate:

| |

Hi, Folks Many thanks, Jim. Regards, John | |

| ID: 33045 | Rating: 0 | rate:

| |

What could be interesting is whether these access violations already happened in 8.03 but had no visible effect, or if they're caused by some change made to the app since then. If I remember correctly Matt only introduced the error handling with 8.11. And may have also improved the error detection. So I still think it's possible that what ever triggers the error detection now was happening before, but did not actually harm the WUs. It's just one possibility, though, which I don't think we can answer. Matt, would it be sufficient if you got remote access to a Titan on Win? I don't have any, but others might want to help. That would certainly be quicker than to set the system up yourself.. although you migth want to have some Windows system to hunt nasty bugs anyway. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 33048 | Rating: 0 | rate:

| |

What could be interesting is whether these access violations already happened in 8.03 but had no visible effect, or if they're caused by some change made to the app since then. MrS; Looking back at the last 10 or so SANTI_RAP, NOELIA-INSP, and NATHAN_KIDKIX WUs that were run on the 8.03 app just before the switch to 8.14... http://www.gpugrid.net/results.php?hostid=158641&offset=20&show_names=1&state=0&appid= you can see that average completion times were about 20k. After 8.14? Sometimes double that due to the constant restarts. So even if error checking was introduced with version 8.11, and there may have been hidden errors created when running the 8.03 app (I'm not sure how that follows logically though), the near doubling of the work unit completion times immediately upon initial usage of the 8.14 app is enough of a smoking gun that there is something amiss. And that is the real problem here I think, the amount of time it takes a WU to complete due to all the starts and stops. That directly impacts the number of WUs that this system (and other Titan/780 equipped systems like it) can get returned. If you like, look at it from the perspective of the "return on the Kilowatts consumed". Now, I am perfectly happy to wait till Matt has a chance to do some testing, and see where that takes us. I'll put the second Titan GPU back in the case and continue as before until...whatever. Operator ____________ | |

| ID: 33049 | Rating: 0 | rate:

| |

|

A CRASHNPT was suspended, still 3% to finish, and another was running. I suppose this happened due to the "termination by the app to avoid hangup". So I suspended the other WU and the one that was almost finished, started again, but failed immediately. So this manually suspending is not working properly anymore, or it is because the app stopped it itself? | |

| ID: 33054 | Rating: 0 | rate:

| |

As an example of what I was referring to above: With one Titan GPU installed and only one WU downloaded and crunching, the amount of time 'wasted' by the "Scheduler: Access violation, Waiting to Run" issue for I59R6-NATHAN_KIDc22_glu-6-10-RND3767_1 was 2 hours 47 minutes and 31 seconds of nothing happening. This data came from the stdoutdae.txt file and was imported into Excel where the 'gaps' between restarts for this WU were totalled up. So this WU could have finished in 'real time' (not GPU time) almost three hours earlier than it did and would have allowed another WU to have been mostly completed if not for all the restarts. Let me know if anybody sees this a different way. Operator ____________ | |

| ID: 33056 | Rating: 0 | rate:

| |

|

I agree that it's possible that the loading and clearing of the app could use up a substantial amount of time. This again suggests that recoverable errors are now triggering the app suspension and recovery mechanism. Maybe the app just needs to be refined so that it doesn't get triggered so often. | |

| ID: 33061 | Rating: 0 | rate:

| |

Operator, It would be nice to have a 64bit Linux image with BOINC, NVidia and ATI drivers installed if that's even possible. No need for anything else. All my boxes are AMD with both NVidia and AMD GPUs. Haven't had a lot of success getting Linux running so that BOINC will work for both GPU types. | |

| ID: 33062 | Rating: 0 | rate:

| |

yours is doing something completely different from mine. Why I don't know. But since mine suspend and start another task, very little is lost. In fact, my times are pretty much unchanged. Your issue is. Odd and unique. | |

| ID: 33065 | Rating: 0 | rate:

| |

Unsurprising. It's difficult to do, and fragile when it's done. The trick is to do the installation in this order: * Operating System's X, mesa packages * Nvidia driver * force a re-install X, mesa packages * Catalyst * Configure X server for the AMD card. * Start X MJH | |

| ID: 33066 | Rating: 0 | rate:

| |

To be clear I'm referring to the difference between the WU runtime showing in the results (20+k seconds) and the actual 'real' time the computer took to complete the WU from start to finish. As an example, if you start a WU and you only have that one running, and it repeatedly starts and stops until its finished, there will be a difference in the 'GPU runtime' versus the actual clock time the WU took to complete. Unless I'm way off base the GPU time is logged only when the WU is being actively worked. If it's "Waiting to run" I don't think that time counts. So that's why I said that there was 2 hours 47 minutes and 31 seconds of nothing happening that was essentially lost. Now, if I completely have this wrong about GPU time vs. 'real time' please jump in here and straighten me out! Operator ____________ | |

| ID: 33069 | Rating: 0 | rate:

| |

Now, if I completely have this wrong about GPU time vs. 'real time' please jump in here and straighten me out! You're certainly not wrong here.. but looking at your tasks I actually see 2 different issues. With 8.03 you needed ~20ks for the long runs. Now you get some tasks which take ~23ks and have many access violations and subsequent restarts. Not good, for sure. These use as much CPU as GPU time. Then there are the tasks taking 40 - 50ks with lot's of "# BOINC suspending at user request (thread suspend)" and the occasional access violation thrown in for fun. Here the GPU time is twice as high as CPU time. These ones really hurt, I think. Maybe a stupid question, but just to make sure: do you have BOINC set to use 100% CPU time? And "only run if CPU load is lower than 0%"? Do you run TThrottle? It's curious.. which user is requesting this suspension? So even if error checking was introduced with version 8.11, and there may have been hidden errors created when running the 8.03 app (I'm not sure how that follows logically though), the near doubling of the work unit completion times immediately upon initial usage of the 8.14 app is enough of a smoking gun that there is something amiss. No doubt about something being wrong. The error I was speculating about is this: I don't know how exactly Matt's error detection works, but he's certainly has to look for unusual / unwanted behaviour of the simulation. Now it could be that something fullfils his criteria, something which has been happening all along and which is not an actual error in the sense that the simulation can simply continue despite this whatever happening. It's just me speculating about a possibility, though, so don't spend too much time wondering about it. We can't do anything to research this. Another wild guess: if some functionality added between 8.03 and 8.14 triggers the error.. why not deactivate half of them (as far as possible) and try a bisection search with a new beta? This could work to identify at least the offending functionality in a few days. If it's as simple as the temperature reporting, it could easily be removed for GK110 until nVidia fixes it. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 33073 | Rating: 0 | rate:

| |

|

Correct, if you only have 1 WU, and none downloaded. Im saying, if you have one you're working on, and one thats next in line. The time lost switching between tasks wont be nearly that large. Will it still affect real time computation? Yes. But maybe by a couple minutes. | |

| ID: 33078 | Rating: 0 | rate:

| |

Operator, Matt; Yes it would! Operator ____________ | |

| ID: 33092 | Rating: 0 | rate:

| |

Correct, if you only have 1 WU, and none downloaded. Im saying, if you have one you're working on, and one thats next in line. The time lost switching between tasks wont be nearly that large. Will it still affect real time computation? Yes. But maybe by a couple minutes. I agree. In part. Most of the time when one WU goes into the "Waiting to run" state the next one in the queue resumes computation. But not always! There have actually been times when all WUs were showing "Waiting to run" and absolutely nothing was happening. Doesn't happen often I'll admit. So I do have the system set to have an additional 0.2 days worth of work in the queue and that does provide another WU for the system to start crunching when one is stopped for some reason. But I was referring to a specific set of circumstances where I had only one Titan installed and only one WU downloaded (nothing waiting to start). That's the worst case scenario. When I did the calculations of the 'gaps' between the stops and restarts (previous post about the 2:47:31) I was struck by the fact that the majority of the times spans the app was not actively working (stopped and waiting to restart) was 2 minutes and 20 seconds. Not every gap but most of them were precisely that long. Curious. Operator ____________ | |

| ID: 33093 | Rating: 0 | rate:

| |

|

My troublesome GTX660 has now done a Noelia LR and a Santi LR, both without any interruption!. So no "Terminating to avoid lock-up" and no "BOINC suspending at user request (exit)" (whatever that may be). | |

| ID: 33290 | Rating: 0 | rate:

| |

|

I don't think a single successful completion with a Noelia long proves much. My GTX 660s successfully completed three NOELIA_INS1P until erroring out on the fourth. But a mere error is not a big deal; it was the slow run on a NATHAN_KIDKIXc22 that caused me the real problem. | |

| ID: 33291 | Rating: 0 | rate:

| |

|

Could be lower temperatures helping you, TJ (in this case it would be hardware-related). | |

| ID: 33314 | Rating: 0 | rate:

| |

Could be lower temperatures helping you, TJ (in this case it would be hardware-related). Or throttling the GPU clock a little :) However it was a bot of joy to early, had a beta (Santi SR) again with The simulation has become unstable. Terminating to avoid lock-up and a downclocking again overnight. Another beta ran without any interruption. So a bit random. I know now for sure that I don't like 660's. ____________ Greetings from TJ | |

| ID: 33316 | Rating: 0 | rate:

| |

Message boards : News : acemdlong application 8.14 - discussion