Message boards : Graphics cards (GPUs) : nVidia GTX GeForce 770 & 780

| Author | Message |

|---|---|

|

GeForce 770 & 780, the same appearance as the TITAN. | |

| ID: 29752 | Rating: 0 | rate:

| |

|

More information here: | |

| ID: 29766 | Rating: 0 | rate:

| |

|

Going by that, and similar speculation, NVidia will drop a trimmed down version of the Titan into the GeForce 700 series (presumably still CC3.5) and call it a GTX780, re-brand their GTX680 as a GTX770 (probably still CC3.0), and their GTX670 as a GTX760. | |

| ID: 29773 | Rating: 0 | rate:

| |

|

GeForce 780, After the card, the box, | |

| ID: 30044 | Rating: 0 | rate:

| |

|

Just bought another 650 Ti at the egg yesterday for $85. How much will these cost and how much faster will they be? Oh, forgot, they probably won't even run GPUGrid until...??? | |

| ID: 30051 | Rating: 0 | rate:

| |

|

NVIDIA Publicly Announces the GeForce GTX 780 At GeForce E-Sports | |

| ID: 30095 | Rating: 0 | rate:

| |

|

As soon as the microcenter by my house gets one in stock, ill be purchasing the 780. Been itching to do a new build for awhile. Plan on using a Haswell processor, but may end up trying out IB-E. Dependson how much money I feel like spending. | |

| ID: 30435 | Rating: 0 | rate:

| |

|

The Gainward GTX 770 Phantom 2GB has a base clock of 1150MHz and a boost of 1202MHz. | |

| ID: 30564 | Rating: 0 | rate:

| |

|

Competitive price and open to manufacturer designs - excellent! | |

| ID: 30566 | Rating: 0 | rate:

| |

|

I can't believe EVGA has 10 GTX770's and 7 GTX780 cards not including their hydro models. I agree that the GTX770 is going to be a great series for GPUGRID, it's going to be interesting to see how well they do. The 770 will work right out of the box, unlike the Titan or 780, I think it's using an updated GK104 chip maybe? | |

| ID: 30569 | Rating: 0 | rate:

| |

I think it's using an updated GK104 chip maybe? No, it's just using the same GK104. Maybe a newer revision, but this would apply to others as well. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 30575 | Rating: 0 | rate:

| |

I can't believe EVGA has 10 GTX770's and 7 GTX780 cards not including their hydro models. I agree that the GTX770 is going to be a great series for GPUGRID, it's going to be interesting to see how well they do. The 770 will work right out of the box, unlike the Titan or 780, I think it's using an updated GK104 chip maybe? Seriously. Any idea why the 770 will work and not the 780/Titan? I wasn't impressed with the price/performance ratio of the Titan or even the 780 but the 770 looks like a winner. | |

| ID: 30605 | Rating: 0 | rate:

| |

|

I was just assuming that it would work because it's GK104 chip same as the 600's, the Titan and 780 are GK110. I wonder if anyone has bought a GTX770 and tried it, is there any way to find out? | |

| ID: 30612 | Rating: 0 | rate:

| |

|

Only going by the first extremely short Betas to successfully run on a Titan it doesn't look any faster than a GTX680. It might take some time to get the most from it - architecturally its a very different beast than a GK104, and conversely a GTX770 is basically a supped up GTX680. I can't think of any reason why a GTX770 wouldn't work straight out of the box, but we cannot say for sure until someone tests one. The Titans on the other hand - I'm not keen on them, so far. | |

| ID: 30621 | Rating: 0 | rate:

| |

|

Just bought a 780 (non-reference) today. Will be buying a Haswell tomorrow, and building everything between Monday-Tuesday. Ugh this stuff get's expensive lol. Fun to build though. | |

| ID: 30622 | Rating: 0 | rate:

| |

Only going by the first extremely short Betas to successfully run on a Titan it doesn't look any faster than a GTX680. It might take some time to get the most from it - architecturally its a very different beast than a GK104, and conversely a GTX770 is basically a supped up GTX680. I can't think of any reason why a GTX770 wouldn't work straight out of the box, but we cannot say for sure until someone tests one. The Titans on the other hand - I'm not keen on them, so far. So it might turn out that a GTX 770 is faster than a GTX 780 or a Titan at GPUGrid, at least for a while. Maybe longer. Interesting though. | |

| ID: 30625 | Rating: 0 | rate:

| |

|

The GPUGrid devs might need to change the app to better accommodate the Titans. | |

| ID: 30626 | Rating: 0 | rate:

| |

|

If and when the GPUG devs start supporting the new top-end cards, I hope they do it in a backwards-compatible manner. I'd hate to see the performance of today's mid-range cards drop (5xx and 6xx, maybe even some 4xx), just for the sake of a few contributors that may have one of the new beasts. We're not all ready (yet) to dish out e.g. 500 euro for a 680 or 400 for a 770. Heck, I'm not even ready to give 200 euro to buy a 660! | |

| ID: 30630 | Rating: 0 | rate:

| |

|

They wouldn't drop support for older series JUST for those cards, so no worries there. They do offer a nice performance bump however. First step is to get them crunching, than they just need to optimize. | |

| ID: 30634 | Rating: 0 | rate:

| |

|

Guys, relax! Titan is not that different. It's got the same basic SMX's, just with a few more registers per thread and some new functionality, which will surely not be used by GPU-Grid because that would brake backwards compatibility. | |

| ID: 30656 | Rating: 0 | rate:

| |

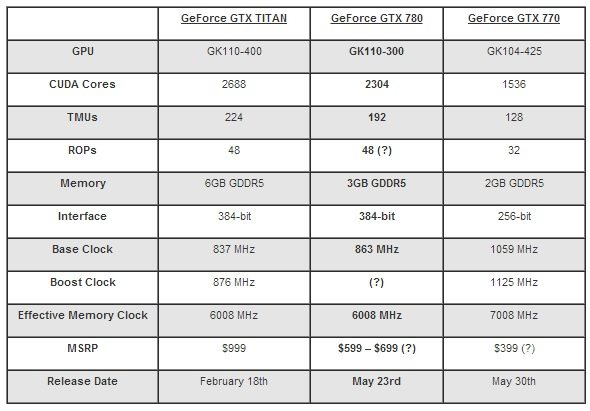

Anyone know the theoretical performance increase for the GPUgrid app? Not for the app, but for SP the GTX780 is theoretically 3977/3213≈23.8% faster than the GTX770. However, it's ~60% more expensive! How it actually pans out for the apps here is still unknown, and there are some obvious differences besides the GK104 vs GK110 architecture - the 780 has a 50% wider bus, and the 770 has 16.6% faster GDDR (hence the 230W TDP compared to the GTX680's 195W). In theory the Titan is 40% faster than the GTX770, but costs 2.5times as much. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 30675 | Rating: 0 | rate:

| |

|

Price, while important overall, wasn't really on my list when making the decision. I just really wanted a gk110 :). They also clock REALLY well. My EVGA SC, acx? The twin fans one. Came at a default boost rate of 1100. I was quite impressed. | |

| ID: 30678 | Rating: 0 | rate:

| |

|

Someone wanted to know if anybody had a GTX 770 yet. | |

| ID: 30958 | Rating: 0 | rate:

| |

I bought a 770 SuperClocked w/ACX cooler a few weeks ago. Nice card! How long, on average, does a Nathan take on it? ____________ Greetings from TJ | |

| ID: 30961 | Rating: 0 | rate:

| |

|

Hi TJ, | |

| ID: 30966 | Rating: 0 | rate:

| |

|

Hi again TJ, | |

| ID: 30967 | Rating: 0 | rate:

| |

|

Hi Midimaniac, | |

| ID: 30971 | Rating: 0 | rate:

| |

|

Nice to hear your card is working well! About the clock speed: nVidia states the typical expected turbo clock speed, not the maximum one. What is reached in reality depends mostly on the load itself and GPU temperature. The EVGA Precision software allows me to slow the card down if I have to If you do so, lower the power target to make the card boost less. This way clock speed and voltage are reduced, so power efficiency is improved. Actually 1.187 V is a bit much for 1.19 GHz. For comparison my GTX660Ti (same chip, just a bit older and one shader cluster deactivated) reaches 1.23 GHz at 1.175 V. Close, but shows you'll probably have some headroom left in that card (which could either be used for a higher offset clock, or a tad bit lower voltage at stock clock). MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 30972 | Rating: 0 | rate:

| |

|

Interesting crash report. 8 CPU cores at 100%... GPU Load is mostly 80%, and GPU Power runs 58-62% TDP Your system appears to be better optimized for CPU usage than GPU usage. I'm using 3 GPU's (not running CPU tasks). The GTX660Ti (PCIE2 @X2) has 88% GPU usage and 85% power (running a NATHAN_KIDc22_SODcharge or a NATHAN_KIDc22_noPhos) and the other two are ~93% GPU usage and 92 to 94% power (NATHAN_KIDc22_full and NATHAN_KIDc22_noPhos). ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 30973 | Rating: 0 | rate:

| |

|

I don't think he understood completely, the longest NATHAN's give 167,550 points and it looks as though he's only done 1, it took him 10.55 hours. That's a little slower than my 770 by about 1/2 hour. | |

| ID: 30975 | Rating: 0 | rate:

| |

Interesting crash report. Probably not crashing, but the taskbar icon is disappearing. I've also seen the icon disappear but Afterburner is still operating. According to Unwinder the icon is disappearing because of a notify bug in windows. He's intending workarounds in the next Afterburner version. | |

| ID: 30978 | Rating: 0 | rate:

| |

|

Definitely an app crash in my case (though I think I've also experienced what you describe where the icon just vanishes):

Faulting module name: nvapi.dll, version: 9.18.13.2014, time stamp: 0x518965f4 Exception code: 0xc0000094 Fault offset: 0x001ab9a4 Faulting process id: 0x11c8 Faulting application start time: 0x01ce6dd888a0b914 Faulting application path: C:\Program Files (x86)\MSI Afterburner\MSIAfterburner.exe Faulting module path: C:\Windows\system32\nvapi.dll Report Id: b6db0dcf-d9e9-11e2-a7c5-d43d7e2bd120

| |

| ID: 30980 | Rating: 0 | rate:

| |

|

So many replies all at once! Regarding your crash: 2 utilities constantly polling the GPUs sensors can cause problems. I actually leave none of them on constantly. I minimize GPU-Z and set it not to continue refrsehing when in the background. If I want a current reading I pop it up again. For continous monitoring I'd use only one utility and set the refrseh rate not too high, maybe every 10 s.Two? How about four? In addition to the 2 EVGA I also had TThrottle running and RealTemp running as well! Your advice is well taken- I will close everything but TThrottle. Uh-oh, now that I think about it EVGA Precision needs to be running for the custom software fan curve to be enabled. When software not running the chip in the hardware takes over and the card runs hotter. This A.M. when I woke the GTX770 was running at 61 C, which is only 4 deg hotter than my software fan curve would have allowed, so no big deal. Maybe I can just minimize EVGA Precision? I will cut the polling back in all my software to about 10sec as you suggested. ...you'll probably have some headroom left in that card (which could either be used for a higher offset clock, or a tad bit lower voltage at stock clock).Apparently I can't lower just the voltage in EVGA Precision. All I can do is raise it! That seems kinda dumb! But I can lower the Power Target. That would lower the voltage as you suggested. And I can also adjust GPU & Mem clock offsets up or down. I have a feeling that polling the hardware from 4 different softwares caused the problem last night! It is interesting that when the two EVGA applications report voltage of 1187mv when crunching hard, CPUID Hardware Monitor reports voltage of only 0900mv. It's interesting because at idle EVGA reports 0861mv and CPUID reports 0862mv, almost exactly the same voltage. Flashawk- Thanks for the insight into the Nathans. I see what you mean. The lower GPU load I have is apparently because of running Rosetta@home together with GPUG and maxxing out my CPU to 100% on all cores. I just now suspended Rosetta so that only GPUG is running and my GPU stats immediately changed. Apparently having Rosetta running is starving the GPU for the CPU time that it requires to run GPUG at full capacity. Interesting. GPU load goes up with Rosetta suspended: GPU Load: 92% GPU Power: 66-67% TDP Temp went up 1 degree to 58C GPU load goes down with Rosetta running: GPU Load: 84-86% GPU Power: 64.1% TDP Temp is back down to 57C So apparently running GPUG by itself would yield the quickest times for completion of GPUG tasks. Interesting. I suppose I could time a few GPUG tasks to see how fast the card turns them over when it has the CPU to itself, but I probably won't. It's not something that really matters a lot, right? | |

| ID: 30984 | Rating: 0 | rate:

| |

So apparently running GPUG by itself would yield the quickest times for completion of GPUG tasks. Interesting. I suppose I could time a few GPUG tasks to see how fast the card turns them over when it has the CPU to itself, but I probably won't. It's not something that really matters a lot, right? Depends what you want - do you want to do more work for GPUGrid or Rosetta? It's entirely up to you, but for reference: Your GPU is presently 14% faster than my GTX660Ti. If you didn't do anything other than not crunch CPU tasks it would be 23% faster than my GPU, just going by GPU usage. As this isn't necessarily accurate you would actually need to run a few work units without the CPU being used to get an accurate measurement, and your GPU would probably be faster than +23% over my GPU, moreso if you tweaked everything else towards GPU crunching. Many people tend to go for a reasonably happy-medium of say 6 or 7 CPU tasks and 1 GPU task. I have 3 GPU's in the one system (one on heels) so I want to give them every opportunity of success. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 30985 | Rating: 0 | rate:

| |

|

I have some remarks as well for you Midimaniac. | |

| ID: 30988 | Rating: 0 | rate:

| |

I have stopped TThrottle, it is a great program, but if you throttle anything, CPU and/or GPU then the GPU load will flip from heavy to low load, this will result in a GPU WU to take longer to finish. TThrottle is best used as a safeguard against overheating for incidents such as fan failure or extremely hot environmental conditions in which you cannot access the machine. It is not a good alternative to a fan control program such as Afterburner. Think of it as a safeguard in catastrophic conditions. It's also perfect for transmitting CPU & GPU temps to BoincTasks so you can monitor them from one client. | |

| ID: 30989 | Rating: 0 | rate:

| |

Result is that my CPU is around 71°C That is really high for water or even air cooling, I have water cooling on my CPU's and GPU's and my FX-8350's run from 38° to 42° and they run pretty hot. Has anybody tried disabling Hyperthreading and run all their GPU's with CPU tasks and see if that lowers the GPU utilization? I don't experience any issue's when running all eight cores flat out with Rosetta or CPDN, I have noticed that what BOINC says and other applications say differ greatly. When running GPUGRID, my CPU utilization is from 99.42% to 99.56% for each core that feeds a GPU. I would try it but the last Intel CPU I bought that I used was a socket 370 Pentium III 800EB (I have a PIII 1.1GHz but never used it, Intel wanted it back). | |

| ID: 30990 | Rating: 0 | rate:

| |

|

That is getting kind of toasty. | |

| ID: 30991 | Rating: 0 | rate:

| |

Has anybody tried disabling Hyperthreading and run all their GPU's with CPU tasks and see if that lowers the GPU utilization? I don't experience any issue's when running all eight cores flat out with Rosetta or CPDN, I have noticed that what BOINC says and other applications say differ greatly. When running GPUGRID, my CPU utilization is from 99.42% to 99.56% for each core that feeds a GPU. I would try it but the last Intel CPU I bought that I used was a socket 370 Pentium III 800EB (I have a PIII 1.1GHz but never used it, Intel wanted it back). Yes I have as I have bought a refurbished rig with 2 Xeon's no HT (not possible), and when running a GPU task and none CPU, GPU use gets a steady load. When adding a core at a time for CPU crunching, the GPU load drops, to below 35% with 7 cores and to zero with 8 cores crunching CPU. Then only very limited a core give some time to the GPU WU. ____________ Greetings from TJ | |

| ID: 30992 | Rating: 0 | rate:

| |

|

TJ & skgiven, Secondly in EVGA Precision you have the option "GPU clock offset" this can be plus or minus. You can also click on "voltage" on the left site of the program under "test" and "monitoring". A new window will pop-up and you can lower the voltage. EVGA has neat software, that is one reason I like that brand.I don't get it. Are you sure about lowering the voltage?? When I click on Voltage a new window pops up but lowering the V is not possible. All you can do is click overvoltage and then drag the arrow up to raise the voltage. My software revision is the new one: 4.2.0. I see from my stats that I already have 2.1 million credits at GPUG and I have only had the one crash last night that I was not directly responsible for, so I guess I'm currently doing pretty good. | |

| ID: 30993 | Rating: 0 | rate:

| |

Yes I have as I have bought a refurbished rig with 2 Xeon's no HT (not possible), and when running a GPU task and none CPU, GPU use gets a steady load. When adding a core at a time for CPU crunching, the GPU load drops, to below 35% with 7 cores and to zero with 8 cores crunching CPU. Then only very limited a core give some time to the GPU WU. Okay, I understand now, I didn't realize that the new rig you bought didn't have Hyperthreading. I think it's strange that only the Intel processors experience this throttling and the AMD processors don't (at least in my case). I have removed 2 cores from BOINC so they can only be used by the video cards and nothing else in BOINC and I'm assuming everyone else has done this too. | |

| ID: 30994 | Rating: 0 | rate:

| |

|

Intel i3/5/7 CPUs can reach VERY high temps (~90C) quite easily with their stock Intel coolers! I don't understand why 70C under continuous crunching load is considered too hot or toasty! Isn't a difference of 20C from the consciously Intel-selected upper operating temperature, a safe distance? | |

| ID: 30997 | Rating: 0 | rate:

| |

Intel i3/5/7 CPUs can reach VERY high temps (~90C) quite easily with their stock Intel coolers! I don't understand why 70C under continuous crunching load is considered too hot or toasty! Isn't a difference of 20C from the consciously Intel-selected upper operating temperature, a safe distance? Your are quite right about the temperatures, but with liquid cooling 71°C is indeed toasty. Secondly running on such high temperatures will reduce the lifespan of a CPU significantly and more over circuit boards. So good/efficient cooling is a must for crunchers, especially 24/7 ones. ____________ Greetings from TJ | |

| ID: 30999 | Rating: 0 | rate:

| |

TJ & skgiven, I have set under Tools, Computing preference, Processor usage to 75%. This results in one core doing nothing. In my case it results in slightly higher GPU load. This setting works for all tasks that run on the CPU not just Rosie. As far as I know you can not set that per project, you can only give a project more time by setting its share higher. But this will not affect CPU usage. However you have a cool case, with cool I mean cool like the Americans use this word often with something is great :) Secondly in EVGA Precision you have the option "GPU clock offset" this can be plus or minus. You can also click on "voltage" on the left site of the program under "test" and "monitoring". A new window will pop-up and you can lower the voltage. EVGA has neat software, that is one reason I like that brand.I don't get it. Are you sure about lowering the voltage?? When I click on Voltage a new window pops up but lowering the V is not possible. All you can do is click overvoltage and then drag the arrow up to raise the voltage. My software revision is the new one: 4.2.0. Yes same software here on two rigs. When I click on Voltage I get the same pop-up window and can draw the arrow with the mouse up and down from 1150 to 825 mV. I can set i.e. at 900 mV, click on apply and then click on the red cross and it works. But I have other cards, that could have to do with it. Nothing higher than a GTX660. ____________ Greetings from TJ | |

| ID: 31000 | Rating: 0 | rate:

| |

|

TJ is correct, just because the CPUs can hit and run at 70, doesn't mean they should 24/7/365. It WILL shorten the lifespan. | |

| ID: 31004 | Rating: 0 | rate:

| |

|

TJ- | |

| ID: 31009 | Rating: 0 | rate:

| |

|

The 320.x driver is probably preventing this, going by the 60-odd page manual. | |

| ID: 31010 | Rating: 0 | rate:

| |

|

You need to remember that the GTX770 has a TDP of 230 watts while a GTX680 has a TDP of 195 watts, they upped the default max voltage from 1.175v to 1.187v. | |

| ID: 31011 | Rating: 0 | rate:

| |

|

Regarding the voltage: as you said under crunching load 1.187 V are displayed. This is actually the voltage for about the highest turbo bin. If you set a voltage of 1.175 V in Precision, this should limit the maximum used. But as you say, your card runs pretty well by now, so I'd rather use that headroom left in the chip for some OC without voltage increases. A good 50 MHz should surely be left. Or us the power target to drive power consumption down and efficiency up, if need. In tihs case the card will choose lower clocks & voltages itself. <app_config> Won't make much of a difference, though. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 31014 | Rating: 0 | rate:

| |

TJ is correct, just because the CPUs can hit and run at 70, doesn't mean they should 24/7/365. It WILL shorten the lifespan. I ignore this most of the year and run at about 68 to 78 degrees, but we are pushing 25 degrees Celsius in Hammerfest (Northernmost city in the world) so I've had to pause my cpu and gpu computing (normally I heat my house almost entirely on computing). This leaves room for possibly upgrading without it affecting computing contribution. On that note, have you concluded anything profound in this thread? Or must we just wait and see for the first person to buy them all and pit them against each other? Off-topic: Can AMD be used in GPUgrid yet? I have not stayed updated on the matter. except one thing, I have noticed some very cheap AMD cards trance my 560Ti in various OpenCL applications. | |

| ID: 31017 | Rating: 0 | rate:

| |

|

780 doesn't work yet. 770 is slightly faster than a 680 (but the jury is out on the performance/watt side). | |

| ID: 31020 | Rating: 0 | rate:

| |

|

Hm, thanks. Then I'll have to wait a bit until 780 works well before I decide. | |

| ID: 31022 | Rating: 0 | rate:

| |

|

Hi MrS- Note that on Kepler GPUs (pretty much all we're talking about now) GPU-Grid always uses a full CPU thread, despite that unfortunate "0.7xxx" value being shown by BOINC. But I do not understand this, and why it needs to be fixed: This means by default BOINC will launch 8 CPU threads along GPU-Grid (on an 8-core machine) and overcommit the CPU. I typically run Rosetta along with GPUG. I have noticed that if I suspend Rosetta and let GPUG run all by itself the GPU load goes up from 82% to 89-90%. A substantial amount. So I thought OK, I'll fix this and get GPUG going even faster. To begin with I don't understand why Rosie is having an effect on GPUG because Rosie does not touch the GPU at all. I have verified this. (If I suspend GPUG and let Rosie continue the GPU load goes to zero). Apparently, as you were saying, there is a certain load that GPUG requires from the CPU, typically 1 core. So I go to Rosetta's page and adjust my compute preferences, making sure to update Rosie in BOINC, so BOINC knows what to do, but this has had no effect. Right now I have Rosetta's preferences set to use 1 processor for 25% of the time, but BOINC is still ramping up to 100% CPU usage on all cores when running Rosetta and GPUG together. My BOINC preferences are set to use 100% of the processors for 100% of the time, but I'm sure the way to turn down Rose is to do it on Rosie's page, right? Does anyone have any suggestions? Ronny- Run your computers at 70 or 80 degrees and heat your house with the heat. That is amazing. Sounds to me like a study in efficiency. Well done! | |

| ID: 31030 | Rating: 0 | rate:

| |

|

Rosetta doesn't use the GPU, but GPUGrid does use the CPU. | |

| ID: 31032 | Rating: 0 | rate:

| |

Right now I have Rosetta's preferences set to use 1 processor for 25% of the time, but BOINC is still ramping up to 100% CPU usage on all cores when running Rosetta and GPUG together. My BOINC preferences are set to use 100% of the processors for 100% of the time, but I'm sure the way to turn down Rose is to do it on Rosie's page, right? It is not that you have to configure the participation of each project in the project preference page, this does only influence the priority of how many work is send from each project. In my case I have a priority project for CPU and GPU respectively: Top priority climateprediction.net 100%, secondary priority malariacontrol.net 1% so my CPU gets only work if either climateprediction.net does not have or the computer has to fulfill the ration of 100/1. We do refer to “My BOINC preferences” in the BOINC Manager: So I do set “to use 99% of the processors for 100% of the time” as I do have one GPU in the system, so of my entire cores one core will be free to feed my GPU and all others are used in my CPU project. Or in your case you have to free 3 cores or threads to feed your 3 GPUs. This will make run GPUGRID project faster and your system in general will more stable. | |

| ID: 31033 | Rating: 0 | rate:

| |

|

Glad I asked about that! I reset my local preferences to compute on 99% of the processors and it reduced Rosetta to only 7 tasks running and one GPUG task running. | |

| ID: 31041 | Rating: 0 | rate:

| |

|

Hello: The GTX 780-TITAN GPUGRID work already, or is there any provision for this. Greetings. | |

| ID: 31066 | Rating: 0 | rate:

| |

|

Look over there for updates [there haven't been any, as the developer is away]. | |

| ID: 31076 | Rating: 0 | rate:

| |

To fix this one can set it to "use at most 99% of CPUs", but then the CPU will not be fully used if GPU-Grid is down. Or one can place a file called "app_config.xml" into the GPU-Grid project folder with the following contents: I have tried this on my "big system" (new PSU is on its way to fit two AMD GPU's) with Einstein@home and what I saw was that the GPU load went to 0 (zero) and then occasionally increases to 24% load. The WU in the BOINC taks list didn't also make progress. So I set CPU use back to 90% again to lease one core free. ____________ Greetings from TJ | |

| ID: 31086 | Rating: 0 | rate:

| |

|

Mhh, something obviously went wrong there, but I don't know your configuration well enough to make any educated guesses. In my post I assumed a standard setup as starting point, that is 100% CPU use and 1 GPU. Maybe that doesn't apply to your system? | |

| ID: 31113 | Rating: 0 | rate:

| |

Mhh, something obviously went wrong there, but I don't know your configuration well enough to make any educated guesses. In my post I assumed a standard setup as starting point, that is 100% CPU use and 1 GPU. Maybe that doesn't apply to your system? Yes, if I use 90% CPU (via Computing preferences, etc.) on an 8 core (2 x 4 no HT), then one core is free and runs GPU fine. But as soon as I make it higher then 90%, GPU load drops to zero. But that is on Einstein. I will not try this here, as I don't want to risk loosing a WU that has already run long. But thanks for the tip, as it works for other people than that is great. I am a happy cruncher and have learned a lot here. ____________ Greetings from TJ | |

| ID: 31118 | Rating: 0 | rate:

| |

|

Hello: The first task completed with my new Gainward GTX770. http://www.gpugrid.net/results.php?userid=68764 | |

| ID: 31440 | Rating: 0 | rate:

| |

Hello: The first task completed with my new Gainward GTX770. http://www.gpugrid.net/results.php?userid=68764 We can't go to your link, that is not working (no access). However looking at your computers I see that the 770 is doing short runs. Lets see if if does long runs nicely as well? That would be interesting. ____________ Greetings from TJ | |

| ID: 31443 | Rating: 0 | rate:

| |

|

Yeah, especially the new NOELIAs!! | |

| ID: 31459 | Rating: 0 | rate:

| |

|

Hello: Finished the first short tasks with the GTX 770. | |

| ID: 31466 | Rating: 0 | rate:

| |

|

Hello: Finished two simultaneous short tasks on the GTX 770 and the result is an improvement of 5% (approx) of executing a single task, not much but it's something. | |

| ID: 31480 | Rating: 0 | rate:

| |

Possibly try with three tasks at once. Don't bother, you won't get any improvement and 5% isn't worth the extra failure rate. Unfortunately there are problems with Linux Noelias I hope is fixed soon. Greetings. The problem is not related to Linux or Windows, it's with the WU's. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 31482 | Rating: 0 | rate:

| |

Unfortunately there are problems with Linux Noelias I hope is fixed soon. Greetings. Hello: Thanks for your comments. If the problem is not better suspend Noelias ship out...? | |

| ID: 31484 | Rating: 0 | rate:

| |

|

Short queue has NATHANIEL's WU's. | |

| ID: 31488 | Rating: 0 | rate:

| |

|

My GTX770 runs the exact same times as my GTX680's, I guess the faster memory has no impact here. With a TDP of 230 watts for the 770 compared to the 680's TDP of 195 watts, I think the GTX680 is the better deal and with Global Foundries process maturing very nicely, the new GTX680's are going straight to 1201MHz - 1215MHz right out of the box at 1.175 volts. I just really like those 680's, you can't go wrong with them. | |

| ID: 31490 | Rating: 0 | rate:

| |

|

It might not be so obvious if the difference was only 5% and there was some task runtime variation. | |

| ID: 31498 | Rating: 0 | rate:

| |

This TDP difference makes me think that the best cards around now might be the GTX670's. For now, you are saying and that is a good point. How are these cards in the future? The WU's evolve fast, so a top card now can become "outdated" very fast. So perhaps is investing in the best cards available (Titan?) a safer option then buying a "cheap" one now and in a few months they are slow again. A year ago my GTX550Ti did not bad here, now its taking 18-30 hours. On the other hand science projects need computer power from the public, that's the idea of BOINC. Then they should keep in mind that a lot of people have a tight budget to use for expensive computer hardware. So lower-end and mid-range cards must be used as well, they are in the majority after all. I know the SR queue is there for, but these are gradually taking more time and more resources as well. ____________ Greetings from TJ | |

| ID: 31500 | Rating: 0 | rate:

| |

So perhaps is investing in the best cards available (Titan?) a safer option then buying a "cheap" one now and in a few months they are slow again. I don't ever think it's a good decision to buy new and/or top-end stuff at their premium prices. Something like 2/3rds of the way to the top seems like the best cost-efficient choice for me. Being realistic, I don't think that hardware for crunching should even reach that 2/3 point. I mean, come on, we're giving real money, buying real hardware, consuming real electricity, working 24/7, putting in big chunks of our real time for a close-to-zero probability that something really useful will come out of all of this... Nice and romantic and all, but why invest big money? On the other hand science projects need computer power from the public, that's the idea of BOINC. Then they should keep in mind that a lot of people have a tight budget to use for expensive computer hardware. So lower-end and mid-range cards must be used as well, they are in the majority after all. I agree with you 100%! The majority is what every science project out there should optimize for. Doing that will give them the biggest gain. The short-run queue is TOO LAME in the credit it gives at present. Boosting credit gain will bring more people with low-to-mid-range cards to GPUGRID. On the other hand, SR WUs are almost always much fewer than LR, so they may just not care. ____________  | |

| ID: 31502 | Rating: 0 | rate:

| |

|

There isn't much difference between a GTX670 and a GTX770 architecturally so it's unlikely that the 670 will be outdated before the 770 or 760. | |

| ID: 31503 | Rating: 0 | rate:

| |

|

Indeed we invest heavily on hardware and electricity, but we are not forced to do so. We choose to run BOINC as or free will. And everyone can contribute to her or his own possibilities. | |

| ID: 31504 | Rating: 0 | rate:

| |

|

I know what you mean TJ and I also want to help the fight against cancer and other diseases in any way I can. That's why I have my machine running 24/7 and keep calming down my wife all the time, who gets upset regularly by the (pretty low) noise and (substantial) heat it generates, lol! | |

| ID: 31536 | Rating: 0 | rate:

| |

|

If anyone has actual power usage info for the GTX770 let us know. | |

| ID: 31662 | Rating: 0 | rate:

| |

|

just a note. | |

| ID: 31680 | Rating: 0 | rate:

| |

TJ wrote at 14 Jul 2013 | 13:35:36 UTC: That is also why I am contributing to GPUGRID. I've lost some familymembers by the disease cancer, like my own father at very young age. My mother lost also 2 very good friends of her, who had breast cancer. It's less close than your experience with the disease but close enough for my to set folding as a goal. Vagelis Giannadakis wrote at 15 Jul 2013 | 9:18:25 UTC: LOL! :P But I can understand it. Sometimes it is really a sacrifice because your room isn't silent at all (noise by watching movies at the television), your room is overheating (very nice when the ambient is already 28 degrees Celsius :P) and sometimes you can't do heavy (graphic) work or gaming at the computer when there is running a large GPU WU. Not talking about electricity bills... you get the point. :P But we all know why we are here. For the credit or for a higher goal. ;-) Maybe it's also because you do not know exactly what you are doing. In "Folding@Home" and I thought also "FightAIDS@Home" you can see in a special application a simulation of what you're doing. It's all based on trust, that GPUGRID makes good use of your data from folded WU's. For all publised papers you've to pay money, something like 35 euros. I think that is a lot of money for a few pages of text, where I can't understand more then 50% procent of the text because it is writed in scientific launguage. Why is this not free available to people who have worked as a volenteer there? Is giving (read: rendering) a lot of WU's to the project not enough for GPUGRID for reading free the results you've rendering for? very strange in my honest opinion...

I also agree with this. I don't have the money for buy each year a highend card solution for rough performance and good (water/air)cooling (per card). It's frustrating to see when WU's are become larger and larger. As example a GTX560(TI) isn't enough anymore or other GPU's with less then 1,5GB VRAM. The instability of WU's is frustrating as hell, when you offer your free computing time and kWh's electricity and you get only errors. I asked that before, why does GPUGRID have so many errors and strange load @ the GPU's. The answer were that GPUGRID changes the WU's (I guess they meant the instructions in the WU) for optimalisation. But wait? optimalization, where do I see that? Only what I see is larger WU's and a lot instability, crashes, errors, stranges loads different per project/WU. A little while ago I quited GPUGRID for that reasons... very frustrating when you have those problems again and again. At Primegrid as example I get always WU's who give me 99% load. With GFN and PPS sieve... there isn't a different load per subproject (like Nathan of NOELIA tasks over here) or differences in WU's in one subproject. At this moment I'm running 2 NOELIA's (@ 2 GPU's, each card 1 WU). Last week it gave me loads to 85%, now is the max suddenly just 65% and the expected runtime increased to 16,5 hours! Last week was that 14,5 hours on the same cards with LOWER corespeeds. Very dramatic... in the Netherlands we would say: "Je kan er geen touw aan vast binden", what means in a way something like it's all uncertain and you can't keep nothing as standard. :P A better support for older cards could lead to a happier and maybe a larger community. Maybe is an option (read: a checkbox in preferences) for selecting WU's for <1,5GB cards a good option and increase the report time for large WU's on older cards, with a different credit system. Recent highend cards can do the normal long runs with CUDA4.2, older cards can fold with that option smaller long runs or the same long runs but with increased report time. Then you've as a folder at home a choice which one you want to do and which one fit the best to your system. | |

| ID: 31682 | Rating: 0 | rate:

| |

It's all based on trust, that GPUGRID makes good use of your data from folded WU's. For all publised papers you've to pay money, something like 35 euros. I think that is a lot of money for a few pages of text, where I can't understand more then 50% procent of the text because it is writed in scientific launguage. That is not completely true. Most papers can be read entirely, in pdf or html. It depends on the publisher. For some you need to have an account or pay for it indeed. When GPUGRID submits a paper to a publisher then the publisher is the "owner" of it and decides free reading rights or not. You can not blame GPUGRID for it as they don't have the rights. You can click on every publication name in your own account or from others and then you will see that most can be read completely. And I think you can read about 95% of it, but I guess you wouldn't understand the half of it. I don't mean this in a bad way. But these papers are about chemistry, biochemistry, molecular modelling and medicine. You need to be familiar with the methods used in these disciplines to understand fully. So think twice before you pay 35 euros for just one article :) ____________ Greetings from TJ | |

| ID: 31688 | Rating: 0 | rate:

| |

|

most if not all the articles are available in pdf from our webpage. | |

| ID: 31689 | Rating: 0 | rate:

| |

just a note. GTX770's work - several crunchers are already using them, Firehawk, Carlesa25, TJ My concern is the GTX770's 230W TDP, actual power usage and performance/Watt for here. It's likely that the additional power consumption is mostly down to the faster GDDR5 (7008MHz compared to 6008MHz for the GTX680, TDP 195W). While this facilitated a bandwidth increased from 192GB/s to 224GB/s, I don't think the GTX680 was particularly bandwidth constrained. So does 224GB/s actually result in a speed bump for here, or does it come without any performance gain? If performance is the same as a GTX680 (or within a few percentages) on Windows people might be able to drop the GDDR5 slightly to make the card more economical to run. For Linux crunchers the GTX680 might be the better card, as changing clocks on Linux isn't easy. Of course I'm speculating and we wont know any of this until someone posts up some power and runtime info and drops the GDDR5 clocks to see if it makes any difference. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 31692 | Rating: 0 | rate:

| |

|

The GTX770 won't draw 40 W more. The increased power limit is there to let the chip turbo up more often and higher. But since at GPU-Grid cards should be able to reach the top boost bin anyway (unless it's really hot), there won't be much difference: on GTX770 the voltage for the highest bin is one step higher than on GTX680 and the memory clock.. which will contribute a few W at most. | |

| ID: 31694 | Rating: 0 | rate:

| |

I'm only saying that the researchers should optimize their WUs for the hardware the majority of their crunching supporters have, that's all. That would give them the biggest gain and their supporters crunching satisfaction! Mega-crunchers would continue getting their mega-credit with the sheer amount of crunching work produced. That's pretty obvious methinks. It is an obvious solution, however, it is hard to do, otherwise it would have been done a long time ago. | |

| ID: 31695 | Rating: 0 | rate:

| |

And I don't think the "1.5 GB is not enough" will be the norm now. 1.5GB is enough to crunch the NOELIA tasks we're talking about. My old GTX480@701MHz can handle them in 45000~48800 sec (12h30m~13h34m). | |

| ID: 31696 | Rating: 0 | rate:

| |

|

I have removed my 770 from the system as it ran fine but slow as it used 58540 seconds for a Nathan, while other 770 where around 32000 seconds for these WU's. Must be my MOBO, as the 660 had problems in the same system. But you know all that. | |

| ID: 31700 | Rating: 0 | rate:

| |

If anyone has actual power usage info for the GTX770 let us know. With my Asus GTX770, I see a 168 watt difference between idle and full load measured through my UPS. And the WU is a long Nathan_Kid. | |

| ID: 31744 | Rating: 0 | rate:

| |

|

A system with a GTX770 will use around 20W more when idle than without the GPU. | |

| ID: 31748 | Rating: 0 | rate:

| |

A system with a GTX770 will use around 20W more when idle than without the GPU. Yes I have some information. I have built my AMD rig and has ran overnight. Asus Sabertooth 990FX R2.0 (great board) AMD FX8350 black edition Asus DRW 24B5ST Asus GTX770-DC2-OC SanDisk SSD 128GB Seagate barracuda 1Tb Kingston VRAM 1066MHz 1.5V 4 case fans 1 CPU cooler stock from AMD (lot of noise, good cooling) Power when Idle (PC only) 190W Power when running Nathan_kid LR 320W Power when running Santi SR and 4 fightmalaria on CPU 384W The 770 runs smooth (but only 2SR and 1LR) with driver 320.49 and BOINC 7.0.64 SR 87000sec LR 29700sec [the SR are almost 4 times as fast than my GTX550Ti in a quad core] We still have the heatwave and heavy thunder at the moment, so when the last SR has finished I will power it down. ____________ Greetings from TJ | |

| ID: 31757 | Rating: 0 | rate:

| |

|

190 W at idle?! Did you deactivate all power management? Some people regularly recommend this, but I think it's really rubbish. Run with standard configuration and if things run smooth leave it at that. Because even while crunching BOINC your system on't be under load all the time (boot, project down, partly free cores etc.). No power saving means just wasted money under such circumstances. You should be looking at <70 W in idle mode. | |

| ID: 31762 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : nVidia GTX GeForce 770 & 780